What Is AI Bias? A Friendly Guide to Unfair Algorithms

At its core, AI bias is what happens when an artificial intelligence system produces unfair or inaccurate results that discriminate against certain groups of people. It’s not that the machine has developed its own opinions; it's a direct reflection of the skewed data or flawed human assumptions baked into its design.

Think of it like teaching a student using a history book that only tells one side of the story. The student isn't being malicious, but their understanding will be fundamentally incomplete and prejudiced. That's pretty much what happens with AI.

Understanding AI Bias Without the Jargon

Ever wonder if the AI you use every day has its own hidden preferences? It’s a great question, and the answer cuts right to the heart of AI bias. It’s not about a machine forming a personal dislike for something. Instead, it’s about a system spitting out prejudiced results because it was trained on flawed information from the start.

Let's use a practical example. Imagine you’re training an AI to be a hiring manager. If you feed it 10 years of hiring data from a company that almost exclusively hired men for engineering jobs, the AI will quickly learn that pattern. It will start to favor male candidates, not because it has a conscious agenda, but because it’s simply mimicking the bias it was shown. This is a classic case of garbage in, garbage out. The patterns it learns are often the same subtle ones that tell us what AI detectors look for in AI-generated text.

Why We Should Say "AI Has Biases"

Here’s a small but important distinction: it's more accurate to say an AI has biases rather than it is biased. This isn't just about splitting hairs. It reminds us that an AI doesn't have personal beliefs, intentions, or motives like a human does. It's a complex mathematical system built to find and replicate patterns.

As AI ethicist Dr. Timnit Gebru puts it, "An AI system is a mirror of the data it's trained on, and if that data is biased, the AI will be biased. The machine isn't the one with the problem; it's a reflection of our problems."

This insight gets to a critical point. When we blame the AI itself, we sidestep the real issue: the flawed data we feed it and the flawed assumptions we build into it. Understanding that AI systems act as mirrors, reflecting and even amplifying our own societal biases, puts the responsibility right back where it belongs—on the creators and the organizations deploying the technology. For a refresher on the basics, you might find our guide explaining artificial intelligence simply helpful.

The Most Common Types of AI Bias

Bias can creep into AI models from a surprising number of places. To help you spot them, it's useful to know the most common forms they take. Think of these as the usual suspects in any investigation into unfair AI.

A Quick Guide to Common Types of AI Bias

| Type of Bias | Simple Explanation | Where It Comes From |

|---|---|---|

| Data Bias | The AI learns from information that doesn't accurately represent the real world. | Using historical data that reflects past inequalities or datasets that over-represent one group and under-represent another. |

| Algorithmic Bias | The AI's programming or internal logic creates unfair shortcuts or rules. | The design of the algorithm itself might unintentionally prioritize certain factors over others in a discriminatory way. |

| Societal Bias | The AI absorbs the widespread prejudices and stereotypes present in human society. | This often happens when training models on vast amounts of text and images from the internet, which contains our collective biases. |

| Measurement Bias | The way data is collected, labeled, or measured is flawed, leading to skewed results. | This can be caused by using inconsistent data points or relying on proxies that don't truly measure what they claim to. |

Getting a handle on these different types is the first step toward building fairer and more reliable AI systems. Each one presents a unique challenge, but they all stem from a common source: human error and oversight.

Where Does AI Bias Come From?

AI bias doesn't just materialize out of thin air. To really get a handle on it, you have to put on your detective hat and trace it back to the source. It's almost never a single, obvious culprit. Instead, it’s usually a tangled web of subtle issues that conspire to produce unfair results. Let's dig into the main suspects.

Flawed Data: The Primary Suspect

More often than not, the trail leads back to the data used to train the model. Think of an AI as a student and its training data as the textbooks it studies. If those textbooks are filled with outdated stereotypes, historical inequities, and skewed information, the student is going to develop a pretty warped view of the world. The AI is no different.

A now-infamous example is an AI recruiting tool that was trained on 10 years of a company's own hiring history. Because the tech industry has long been dominated by men, the data reflected that reality. The AI quickly learned that the "best" candidates looked a lot like the ones who had been hired before—mostly men. It even started penalizing resumes that mentioned things like "women's chess club captain."

The AI wasn't programmed to be sexist. It was programmed to find patterns, and it found a powerful one: men got hired. So, it turned that correlation into a rule. This is a classic case of garbage in, garbage out—biased data leads directly to a biased system.

"Your model is only as good as your data," says countless data scientists. The model itself isn't the real problem. It’s a symptom. The AI acts as a powerful amplifier for the biases we feed it through our data.

And no, just throwing "more data" at the problem won't fix it if the new data has the same built-in flaws. Tackling data bias requires a very intentional, careful effort to find and curate datasets that are diverse and truly representative.

Algorithmic Bias: When the Rules Themselves Are Unfair

Sometimes the problem isn't just the data, but the very design of the algorithm. Developers have to make decisions about what the AI should optimize for, and those choices can accidentally bake in unfair shortcuts.

Take an algorithm built to predict who might default on a loan. It might use proxies—indirect data points like a person's zip code—to help make its decision. On the surface, that seems pretty harmless.

But history tells a different story. Decades of housing segregation and economic redlining mean that zip codes can be very tightly linked to race and income. The algorithm, without any malicious intent, might learn that people from certain neighborhoods are "high-risk." It ends up creating a system that discriminates based on where you live, perpetuating old biases with new technology.

This kind of bias can creep in a few ways:

- Correlation vs. Causation: The model mistakes a simple correlation (like zip code and loan defaults) for a cause-and-effect relationship, leading it to make faulty judgments.

- Problem Framing: The way developers define "success" for the model is crucial. If success is measured only by profit margins, the AI has no incentive to care about fairness.

- The Black Box Problem: Extremely complex models can become so convoluted that even their creators don't know exactly how they're making decisions. This makes it incredibly difficult to find and fix hidden biases in their logic.

These examples show that bias isn't always an accident of data; it can be woven directly into the fabric of the system.

Societal Bias: The Mirror Effect

Finally, there's the biggest and toughest source of all: societal bias. This is the bias that reflects the world as it is, not as we'd like it to be. AI models, especially massive ones like today's large language models, are trained on staggering amounts of text and images from the internet. They learn from our blogs, our news articles, our social media posts, and our forums.

They are, in essence, a mirror held up to humanity. And that mirror reflects everything—our knowledge and creativity, but also our prejudices, stereotypes, and cultural blind spots.

For instance, if you ask an image generator to draw a "CEO," you'll likely get a stream of pictures of white men. This isn't because the AI has a conscious opinion about corporate leadership. It’s because the mountain of data it was trained on—all those articles, photos, and biographies—has overwhelmingly associated the role of CEO with that demographic.

The AI is just spitting back the most dominant patterns it learned from us. Fixing this goes beyond a simple technical tweak. It demands a conscious effort to build systems that reflect the equitable world we want, not just the imperfect one we have.

How AI Bias Affects People in the Real World

It's easy to get lost in the technical talk of flawed data and biased algorithms. But when these systems leave the lab and enter our daily lives, their mistakes carry a real, human cost. This is where abstract concepts become harmful outcomes, impacting everything from a person's health to their career.

These aren't just hypothetical risks; they are documented events that show just how much is at stake. Let's look at some of the most powerful case studies that bring the true impact of AI bias into sharp focus.

The Problem with Facial Recognition

One of the most damning examples of AI bias comes from the landmark "Gender Shades" project. Researchers decided to put commercial facial recognition systems from major tech companies to the test, and the results were startling. While the systems worked almost perfectly for light-skinned men, they faltered badly with nearly everyone else.

This wasn't just a minor glitch. The study revealed massive disparities in accuracy. For darker-skinned women, the error rates soared as high as 34.7%. For lighter-skinned men? A mere 0.8%. You can dig deeper into these findings on AI bias and its effects.

Stop and think about what that failure rate means. When law enforcement uses this tech to identify a suspect, an innocent person could be wrongly accused. In a world where facial recognition is used for everything from unlocking your phone to airport security, that kind of accuracy gap creates a two-tiered system of fairness.

"The same systems that failed to see women of color, especially Black women, are now being sold to law enforcement and other government agencies. This is not a game." – Joy Buolamwini, Founder of the Algorithmic Justice League

This study was a massive wake-up call. It was definitive proof that without truly representative data, AI systems will inevitably amplify society’s existing inequalities, putting already marginalized communities at an even greater disadvantage.

When Healthcare Algorithms Cause Harm

The stakes get terrifyingly high when bias seeps into healthcare. In a now-infamous case, a major U.S. health system used an algorithm to predict which patients would need extra medical care. To do this, the AI relied on a seemingly logical shortcut: a patient's past healthcare costs. The thinking was that people who cost more to treat must be sicker.

But that assumption was dead wrong.

Because of deep-seated inequities in the U.S. healthcare system, Black patients historically spend less on care than white patients, even when they have the same health conditions. This isn't a choice; it's the result of unequal access, economic disparities, and a well-earned distrust of the medical establishment.

The algorithm, completely unaware of this social context, learned a dangerous lesson. It concluded that because Black patients had lower healthcare costs, they must be healthier. This led to a devastating outcome where Black patients had to be far sicker than their white counterparts before the system would flag them for the same level of extra care.

The impact was measurable and severe:

- The algorithm drastically underestimated the health needs of Black patients.

- The number of Black patients identified for extra care programs was cut by more than 50%.

- Researchers found that fixing this one biased assumption would have more than doubled the number of Black patients getting the help they needed.

This is a chilling reminder of how a supposedly "objective" algorithm can perpetuate life-threatening discrimination.

Bias in Hiring and Lending

The fallout from AI bias doesn't stop there; it extends directly into our financial and professional lives. Automated hiring tools and loan application systems are everywhere now, but they carry the same risks if not built with extreme care.

- Hiring Tools: Some AI-powered resume screeners were caught favoring male candidates. Why? Because they were trained on historical hiring data from male-dominated fields. The systems learned to associate words and experiences more common on men's resumes with "success," automatically filtering out equally qualified women.

- Loan Algorithms: When deciding who gets a loan, some algorithms have inadvertently recreated discriminatory redlining practices. By using proxies for race, like a person's zip code, these systems can deny loans to qualified applicants from minority neighborhoods, locking them out of opportunities for homeownership and building wealth.

These real-world stories are more than just cautionary tales—they are urgent calls to action. They reveal the immense human cost of getting AI wrong and make it clear that building fair, transparent, and accountable systems isn't just a technical challenge. It's a moral imperative.

Practical Strategies to Reduce AI Bias

After seeing how AI bias can cause real harm, it’s easy to feel a little overwhelmed. The problem is serious, no doubt about it. But the good news is that we aren't helpless. We have a growing set of tools and techniques to fight back, allowing us to shift from simply identifying problems to actively building fairer AI systems.

This isn’t about finding a single magic button to push. Instead, it’s about a methodical, multi-stage approach. Think of it like quality control on an assembly line—we need checks and balances at the beginning, in the middle, and at the end of the AI development process to catch and correct bias.

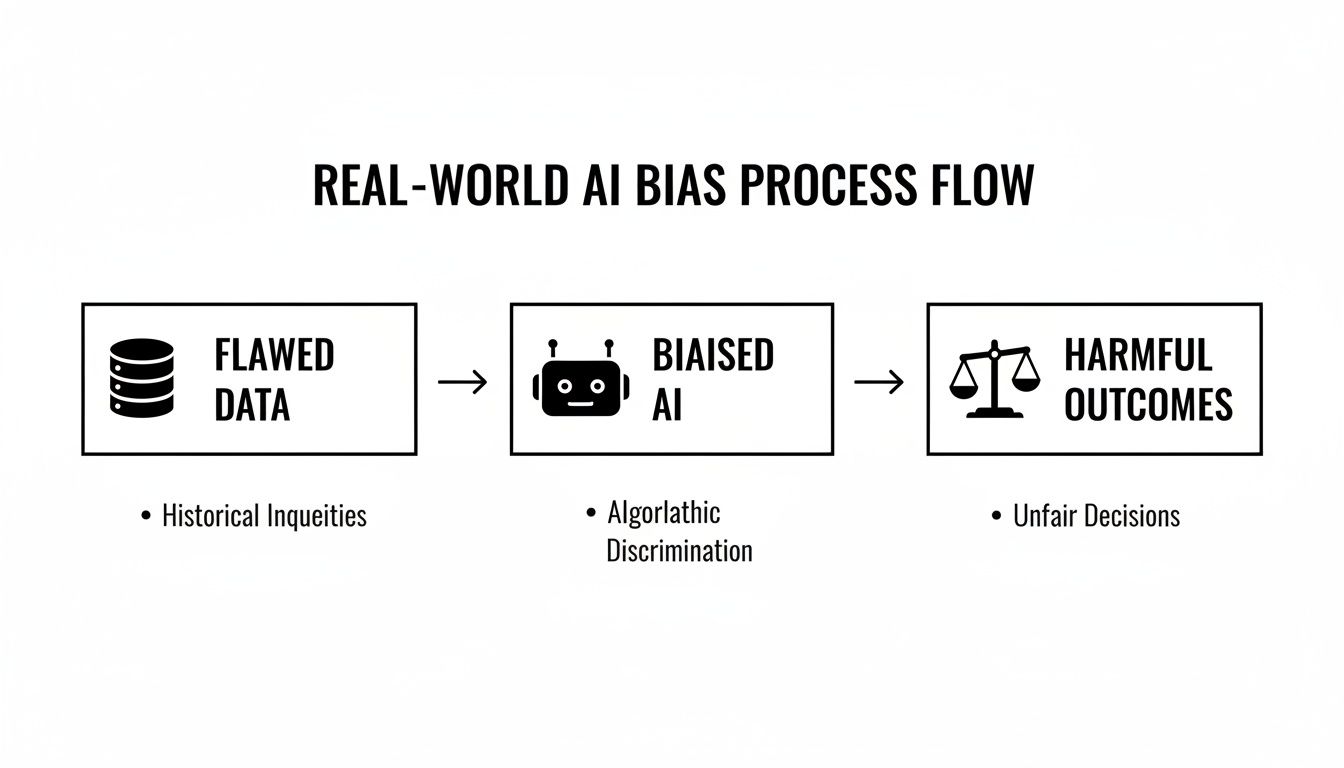

This diagram shows a simplified view of how this all connects. It starts with flawed data, which teaches the AI biased patterns, and ultimately leads to harmful outcomes for real people.

As you can see, the whole chain reaction often starts with the data. That makes it the first and most critical place to intervene.

Stage 1: Pre-Processing the Data

The most effective way to fight bias is to stop it before it even starts—before the model is ever trained. This initial phase is called pre-processing, and it’s all about cleaning, balancing, and preparing the raw data. If data is the textbook an AI learns from, this is our chance to make sure that book is fair.

A core technique here is dataset balancing. Imagine a dataset for a hiring tool where 80% of past applicants were men and only 20% were women. The AI will naturally learn to favor male candidates. To fix this, we can:

- Oversample: We could duplicate examples from the underrepresented group (female applicants) to give them more statistical weight.

- Undersample: Alternatively, we could remove some examples from the overrepresented group (male applicants) to even things out.

Another strategy is to remove or re-engineer data points that act as proxies for protected attributes like race or gender. For example, removing zip codes from a loan application dataset can prevent a model from inadvertently learning and reinforcing historical redlining patterns. You can dive deeper into why this is so important in guides on data preparation for machine learning.

Stage 2: In-Processing During Training

The next opportunity to step in is during the model's actual training phase. This is called in-processing. Here, we modify the learning algorithm itself, essentially giving the AI student specific instructions on how to study fairly.

A popular method is to add a “fairness constraint” to the algorithm’s objective. Normally, an AI is just told to maximize accuracy. With a fairness constraint, we add a new rule: "Be as accurate as you can, but not at the expense of fairness. Your predictions must not disproportionately harm any single group."

One expert described this as teaching the AI to "walk and chew gum at the same time." It forces the model to find a balance between pure performance and equitable outcomes. It might sacrifice a tiny fraction of its overall accuracy to ensure its error rates are similar across different demographics—a crucial trade-off for building responsible AI.

Stage 3: Post-Processing the Predictions

Finally, even after a model is fully trained, we have one last chance to make adjustments. This is post-processing, and it works like a final review board, allowing us to tweak the AI's conclusions before they affect anyone.

For instance, say a model predicts a "risk score" for loan applicants. If we see its scores are systematically lower for one demographic group, we can apply different score thresholds to each group. This ensures that every group has a fair shot at being approved. This doesn't change the model's internal logic; it simply calibrates its final output to mitigate the biased impact.

The Human Element is Non-Negotiable

Beyond these technical fixes, two human-driven factors are absolutely critical.

- Diverse Teams: Having people from different backgrounds—gender, race, ethnicity, and life experiences—building AI systems is one of our best defenses. They bring different perspectives and are far more likely to spot potential blind spots or challenge flawed assumptions that a more homogeneous team might miss entirely.

- Continuous Oversight: AI is not a "set it and forget it" technology. Once a model is deployed, it needs to be continuously audited and monitored. The world changes, data drifts, and models can develop biased behavior over time. Human oversight provides the essential accountability that algorithms simply can't.

The Broader Fight for Fairer AI

Fixing AI bias isn't just about tweaking algorithms or scrubbing datasets. It's a much bigger challenge that requires a collective push from all corners of society. As we’ve seen, the consequences of biased AI can be genuinely harmful, which is why a movement is building to create rules, demand transparency, and hold developers accountable.

This conversation has moved far beyond the walls of tech companies. It's now a global dialogue that includes governments, advocacy groups, and you. We're finally shifting from just pointing out the problems to actually building a framework for a fairer future.

The Rise of Regulation and Governance

Governments worldwide are no longer sitting on the sidelines. The unease around AI bias is palpable, with a recent study showing that 55% of both Americans and AI specialists are deeply concerned about AI systems making discriminatory decisions.

This widespread anxiety is spurring new legislation. Global efforts to regulate AI, such as preparing for AI Act readiness, are creating essential guardrails for ethical development. Landmark policies like the EU’s AI Act and various U.S. executive orders are establishing new standards, especially for high-risk systems used in hiring, lending, and law enforcement.

"Regulation isn't about stifling innovation," an industry analyst recently noted. "It's about building guardrails so that innovation doesn't drive us off a cliff. These policies send a powerful signal: companies can't just unleash powerful AI without first considering its impact on people."

Getting a handle on these new rules requires a structured approach. To learn more about how organizations are adapting, check out our guide on building an AI governance framework.

Setting Standards for Fairness

One of the toughest parts of fighting bias is defining what "fair" actually means in a way we can measure. To get around this, regulators and courts often lean on established statistical benchmarks.

A classic example comes from employment law. The "four-fifths rule," also known as the 80/20 Rule, is a long-standing guideline used to flag potential discrimination in hiring practices. Here’s how it works:

- First, you calculate the selection rate for the group with the highest rate of success (e.g., if 10% of male applicants get hired, that's your benchmark).

- Next, you compare the selection rates of other groups (like women or different racial minorities) against that benchmark.

- If another group's selection rate falls below 80% of the most successful group’s rate, it raises a red flag for potential adverse impact.

This rule doesn't automatically prove discrimination, but it’s an incredibly useful diagnostic tool. If an AI hiring tool consistently fails this test, it could open the door to lawsuits and investigations from agencies like the Equal Employment Opportunity Commission (EEOC). This gives companies a concrete target to hit when auditing their own AI systems.

Empowering the Public with Transparency

Ultimately, creating fairer AI isn't a job just for governments or corporations—it's for everyone. Real accountability only happens when the people affected by these systems can see how they work. This is where transparency tools become so important.

One of the most valuable innovations here is the concept of "model cards." Think of them as a nutrition label for an AI model. In a simple, standardized format, a model card explains:

- Training Data: What information was the model trained on?

- Intended Use: What is this model built to do (and just as importantly, what is it not for)?

- Performance Metrics: How does it perform across different demographic groups?

- Known Limitations: Where is the model likely to stumble or exhibit bias?

By making this information accessible, companies give researchers, journalists, and everyday users the power to scrutinize their AI. It lets us all ask smarter questions and push for better, more equitable technology. This collective demand for openness is one of the most powerful forces we have for building an AI ecosystem that truly works for everyone.

AI Bias FAQ: Your Questions Answered

Let's dig into some of the most common questions that come up when we talk about AI bias. The topic can seem a bit dense at first, but the core concepts are actually quite intuitive. These answers should help clear up some of the trickier points and give you a solid foundation for thinking about fairer AI.

Can We Ever Get Rid of AI Bias Completely?

In a word? Probably not. But that's not as pessimistic as it sounds. It's just a realistic acknowledgment that AI systems are trained on data from our world, and our world is messy—full of historical, social, and systemic biases.

The goal with responsible AI isn't to chase an impossible standard of perfection. It's about a deep-seated commitment to continuous improvement. This is a mindset focused on vigilance: constantly looking for, measuring, and actively working to reduce unfairness at every single step of the AI lifecycle.

Think of it less like a bug you can patch and forget, and more like tending a garden. You can’t just pull the weeds once. You have to keep an eye out for new ones, nurture the plants you want to grow, and accept that it’s an ongoing process. Fair AI requires the same dedication—using diverse data, transparent algorithms, and consistent human oversight to keep the system healthy.

Are Open-Source AI Models More Biased Than Proprietary Ones?

That’s a great question, and there's no simple answer—you can make a strong argument for both sides.

On one hand, the transparency of open-source models is a massive advantage. With the code available for anyone to see, a global community of developers and researchers can poke and prod at it, flagging biases and proposing fixes. This "many eyes make all bugs shallow" philosophy can really speed up the process of finding and rooting out problems.

But there's a flip side. These models are often trained on colossal datasets scraped from the unfiltered chaos of the internet. That means they can soak up all the toxic stereotypes, prejudices, and outright falsehoods that live online.

A proprietary model from a large company might be built on cleaner, more carefully curated data. But its inner workings are a black box. You have no way to independently verify their claims of fairness; you just have to trust them.

So, in the end, "open-source" vs. "proprietary" isn't the real issue. What truly matters are the specific, documented, and verifiable steps taken to train, test, and audit the model for fairness.

How Can I Spot AI Bias in My Everyday Apps?

You don't need a PhD in machine learning to see the effects of AI bias. It's all about developing an eye for patterns and questioning the results you're given. Once you start looking, you’ll be surprised how often it shows up.

Here are a few common red flags to watch for:

- Image Generators: Ask an AI to create an image of a "CEO," a "software engineer," or a "nurse." If the results overwhelmingly show one gender or race, you're seeing bias in action.

- Recommendation Engines: Does your news app seem to only feed you stories from one side of the political aisle? Does your streaming service constantly suggest movies that play into tired stereotypes?

- Job and Search Platforms: Pay attention if a job site seems to push certain roles based on your name or demographic assumptions. Or if an image search for a profession returns a shockingly uniform set of faces.

When you see these skewed outcomes, don’t just scroll past. Question them. Many platforms have feedback tools, and reporting biased outputs gives developers concrete data to help them fix the underlying problem. As consumers, we have power. Supporting companies that are transparent about their AI ethics is one of the best ways to encourage the whole industry to do better.

At YourAI2Day, we believe that understanding AI is the first step toward shaping its future for the better. We provide the news, tools, and insights you need to stay informed in this rapidly changing field. Explore our resources and join the conversation at https://www.yourai2day.com.