Transformer Architecture Explained: Your Ultimate Guide for Beginners

Ever wondered what's really going on inside an AI like ChatGPT? It’s not magic, but a brilliant piece of engineering called the Transformer architecture. Think of it as the super-smart engine that powers modern AI, allowing it to understand and write text that feels genuinely human. It’s a total game-changer that threw out the old, slow rulebook of how machines process language.

Let's dive in and see how this incredible technology actually works, in simple terms.

A Breakthrough in Understanding Language

How can an AI read a dense paragraph and immediately get the point? The answer lies in the Transformer. Before Transformers came along, AI models were a bit like reading a book through a keyhole—they could only see one word at a time. This step-by-step approach meant they often lost the plot, forgetting the beginning of a sentence by the time they reached the end.

This was a huge roadblock. For instance, in a sentence like, "The cat, which had been sleeping on the warm windowsill all afternoon, finally woke up," an older model would struggle to connect "cat" with "woke up" because of all the words in between. The Transformer blew that limitation wide open.

The Power of Seeing Everything at Once

Instead of processing word by word, Transformers look at the entire sentence all at once. This ability to see the whole picture was the big "aha!" moment for modern AI. It allows the model to instantly weigh how important every word is to every other word, no matter where they are in the sentence.

This fresh approach was unveiled by Google researchers in June 2017 in a paper that changed everything: "Attention Is All You Need." Their design dropped the sequential nature of old models entirely, relying on a mechanism called self-attention. It was a game-changer because it meant training could be massively parallelized, dramatically speeding up AI development.

Expert Opinion: "The shift to parallel processing wasn't just a minor tweak; it was a fundamental change in how we thought about modeling language," says AI researcher Dr. Eva Rostova. "It unlocked our ability to scale models to sizes that were once pure science fiction, leading directly to the powerful AI assistants we use every day."

What This Means for AI

To give you a better sense of this shift, here's a quick comparison of the old way versus the new.

Transformer vs Traditional Models: A Quick Comparison

| Feature | Transformer Architecture | RNN / LSTM Architecture |

|---|---|---|

| Data Processing | Parallel (looks at the whole sequence at once) | Sequential (one element at a time) |

| Core Mechanism | Self-attention mechanism | Recurrent connections and gating |

| Context Handling | Excellent at long-range dependencies | Struggles with long-range context (vanishing gradients) |

| Training Speed | Highly parallelizable, much faster to train | Slower due to sequential nature |

| Primary Use Cases | State-of-the-art NLP, vision, audio | Older machine translation, sentiment analysis |

This table really highlights why Transformers took over. They're just built better for the scale and complexity of modern data.

By understanding the relationships between all words simultaneously, Transformers build a much richer, more nuanced model of language. This capability is at the heart of Natural Language Processing, the field dedicated to teaching computers to understand and work with human language.

Let's look at some practical benefits:

- Contextual Awareness: A Transformer can easily tell the difference between "bank" in "river bank" and "money bank" by looking at the other words around it. It gets the context instantly.

- Long-Range Dependencies: It correctly connects a pronoun back to a noun that appeared much earlier in a long document, solving a classic AI headache.

- Efficiency in Training: Processing data in parallel slashes the time it takes to train massive, powerful models from months to weeks or even days.

Ultimately, getting to grips with the Transformer architecture is the first step to truly understanding how today's most advanced AI systems think, write, and create. It’s the foundation that the entire modern AI boom is built on.

Why We Had to Move Beyond RNNs

To really get why transformers changed everything, you have to understand the world they were born into. Before 2017, if you were working with language data, you were almost certainly using Recurrent Neural Networks (RNNs). The logic seemed sound: RNNs read a sentence one word at a time, from left to right, just like we do.

This step-by-step process was intuitive, but it was also a huge bottleneck. An RNN would process the first word, create a hidden state (think of it as a summary), and then feed that summary forward as it processed the second word. It kept this up, hoping to carry the full context of the sentence along the chain.

The Memory Bottleneck

The problem is, this process is like a game of telephone. Imagine trying to recall the exact first word of a long paragraph you just read. By the time you get to the end, that initial context has been watered down or even lost entirely. RNNs suffered from the exact same issue, a classic challenge in deep learning known as the vanishing gradient problem.

This made it incredibly difficult for the model to connect a pronoun at the end of a long sentence to the noun it was referring to way back at the beginning. The model’s "memory" was just too short to handle these long-range dependencies, which are absolutely essential for understanding complex language.

Expert Opinion: "The sequential nature of RNNs was both their defining feature and their biggest weakness," notes AI architect Kenji Tanaka. "While they were good at capturing short-term context, their inability to parallelize training and their struggle with long-term memory meant the entire field of AI was hitting a performance wall. We needed a way to break free from that linear constraint."

LSTMs: A Clever Workaround, Not a Solution

Clever researchers came up with fixes, most famously the Long Short-Term Memory (LSTM) network. LSTMs are a beefed-up version of RNNs, equipped with internal "gates" that help them decide what information is important enough to keep and what can be forgotten. This was a big help for the vanishing gradient problem and let models work with much longer sentences.

LSTMs were a huge step forward, building on decades of research. The groundwork was laid in the early 90s, and the formal introduction of LSTMs came in 1997, specifically to solve the memory issues of older RNNs. If you're curious, you can explore the complete AI timeline from the 1990s to today to see the full evolution.

But even with these upgrades, LSTMs were still stuck in that one-word-at-a-time traffic jam. They had to process text sequentially, which made training on massive datasets painfully slow and expensive. The AI community needed something fundamentally different—an architecture that could look at the entire sentence at once. This need created the perfect opening for the transformer to walk onto the scene.

Exploring the Core Components of a Transformer

Now that we know why the AI world was hungry for something new, let’s pop the hood and look at the engine that makes a transformer go. The whole architecture is like a sophisticated assembly line, where each station has a very specific job. When all these parts work in harmony, they create the stunning language understanding we see in today's most advanced AI.

We’re going to break down the key machinery piece by piece, starting with the undisputed star of the show.

Self-Attention: The Art of Contextual Awareness

The absolute heart of the transformer is the self-attention mechanism. This is the magic that lets the model weigh the importance of different words in a sentence when it's trying to understand one specific word. It’s what gives the transformer its uncanny ability to grasp context.

Think of it like this: if you read the sentence, "The dog chased the cat because it was fast," your brain instantly knows "it" refers to the "cat." How? You didn't just read the words; you instinctively made connections.

Self-attention does the same thing. For every single word, it looks at all the other words in the sentence and asks, "How relevant are you to understanding this specific word?" This lets it figure out that in "The bee stung the man because it was angry," the word "it" points back to the "bee," not the "man."

Multi-Head Attention: Seeing from Different Perspectives

A single self-attention mechanism is powerful, but what if you could run several of them at once, each looking for different kinds of connections? That's the genius behind Multi-Head Attention. Instead of one attention process, a transformer runs many of them in parallel.

Each "head" acts like a specialist on a team, analyzing the sentence from a unique angle:

- Practical Example: One head might focus purely on the grammatical structure—how nouns, verbs, and adjectives link together. Another could be an expert in identifying subjects and objects, figuring out who did what to whom. A third head might specialize in spotting semantic relationships—like how "king," "queen," and "royal" are all connected.

By combining the insights from all these different attention heads, the model gets a much richer, more layered understanding of the text. It's not just seeing one set of connections; it's seeing multiple sets of relationships at the same time, building a far more complete picture of the sentence's meaning.

Expert Opinion: "Multi-Head Attention is a brilliant way to ensure the model doesn't get tunnel vision," explains Dr. Rostova. "A single attention mechanism might latch onto one obvious relationship, but having multiple heads forces the model to explore diverse connections, capturing everything from syntactic links to subtle semantic nuances."

Positional Encodings: Adding a Sense of Order

There's a big problem with looking at all words at once: you lose the original word order. If you just toss words into a bag, "The dog chased the cat" and "The cat chased the dog" look identical, even though they mean completely different things.

This is where Positional Encodings come in. Before any words enter the attention mechanism, the model adds a tiny piece of mathematical information—a vector—to each word that signals its exact position in the sentence. It’s like adding a unique timestamp or a sequential number to each word. This way, the model always knows that "The" is in position 1, "dog" is in position 2, and so on, preserving the crucial sequence information.

The Feed-Forward Network: The Thinking Hub

After the attention mechanisms have done their job and figured out all the contextual relationships, the newly enriched information for each word is passed to a Feed-Forward Network (FFN). You can think of this as the processing or "thinking" hub for each word token.

The FFN is a fairly standard neural network component that performs some heavy-duty calculations on the attention-infused word representations. It helps the model digest the complex relationships identified by the attention layers and transform them into a more refined, useful output. This is where components like the activation function in a neural network are essential for processing these signals.

Critically, each word's information is processed through its own identical FFN, which allows for massive parallelization and makes training these huge models feasible.

These core components—Self-Attention, Multi-Head Attention, Positional Encodings, and Feed-Forward Networks—form the fundamental building blocks of a single transformer layer. In practice, models like GPT-4 stack dozens of these layers on top of each other to build an incredibly deep and powerful understanding of language.

How the Self-Attention Mechanism Really Works

If the transformer is the engine driving modern AI, then the self-attention mechanism is its turbocharger—the piece that gives it a massive power boost. This is where the model stops just processing words in a sequence and starts to understand the complex web of relationships that gives language its meaning. It’s the secret ingredient that lets transformers grasp context on a whole new level.

Let's break it down with a simple analogy. Imagine you're in a library writing a research paper on "ancient Rome." That topic is your Query. To find information, you scan the titles on every bookshelf—those are the Keys. When a Key (a book title) lines up with your Query (your topic), you grab that book and use its contents, which are the Value.

Self-attention operates on a very similar principle, but its world is a single sentence. For every word, the model generates three separate vectors: a Query, a Key, and a Value.

Creating the Core Ingredients: Q, K, and V

For each word token that enters the attention layer, the model multiplies its embedding by three distinct, trainable weight matrices to create these vectors.

- Query (Q): This is the current word's perspective. Think of it as the word asking, "Who else in this sentence is important to me?"

- Key (K): This vector acts as each word's identity or label. It's like a signpost that says, "Here's what I'm about." The Query vector for one word will be compared against the Key vectors of all the other words.

- Value (V): This vector holds the real substance of the word. Once a Query finds a Key it's interested in, it's the associated Value vector that actually gets used in the next step.

So, for any given word, its Query vector scans all the other words' Key vectors to see who it should pay attention to. The strength of those connections determines how much of each word's Value gets mixed into the final output.

Expert Opinion: "The creation of Query, Key, and Value vectors is a beautifully simple yet powerful idea," says Kenji Tanaka. "It transforms the abstract problem of 'understanding context' into a concrete mathematical process of vector comparisons. This allows the model to learn these relationships dynamically during training, figuring out on its own what parts of a sentence are important."

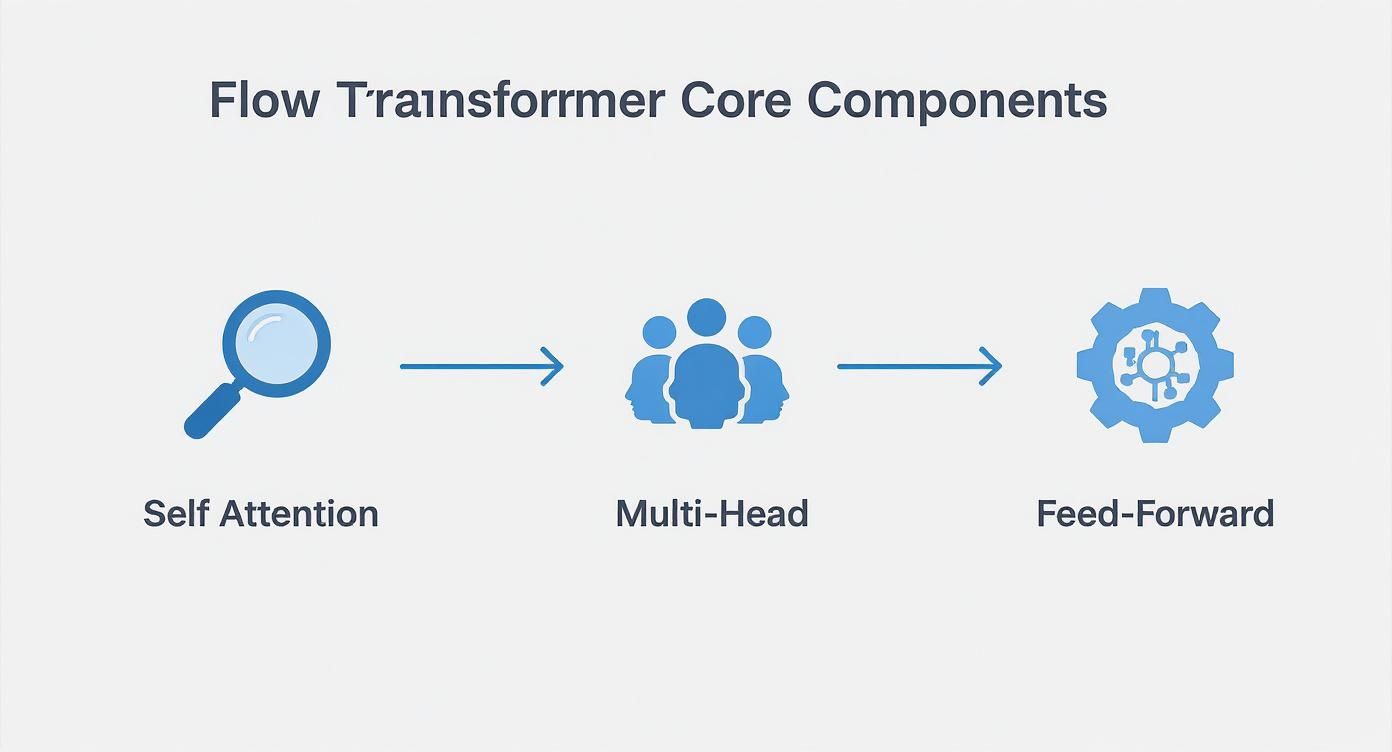

The diagram below shows how information flows through the core components of a transformer layer, starting with this very self-attention mechanism.

This visual gives you a sense of how self-attention feeds into multi-head attention and then a feed-forward network, all of which form the basic building block of a transformer.

Calculating the Attention Scores

With our Q, K, and V vectors in hand, the real work starts. The process unfolds in just a few steps, turning abstract word relationships into numbers the model can actually work with.

Let’s use a classic example: "The robot picked up the ball because it was heavy." We need the model to figure out what "it" refers to. The Query vector for "it" will now be measured against the Key vector of every other word.

- Score Calculation: The model takes the dot product of the Query vector for "it" with the Key vector of every other word in the sentence (including itself). A higher dot product means higher relevance. The score for ("it", "ball") will almost certainly be much higher than for ("it", "robot").

- Scaling: These raw scores are then divided by a scaling factor—usually the square root of the dimension of the key vectors. This is a neat trick to keep the gradients stable during training and prevent the scores from getting too big.

- Softmax Function: The scaled scores are run through a softmax function. This is a crucial step that transforms the scores into a set of probabilities that all add up to 1. Words with high relevance get a high probability (e.g., "ball" might get 0.85), while irrelevant words get a score close to zero (e.g., "The" might get 0.01).

Building a New Representation

The final step is to build a new, context-aware representation for our word "it." This is done by multiplying the Value vector of each word in the sentence by its softmax score, and then simply adding all those weighted vectors together.

So what just happened? The new vector representing "it" is now composed mostly of the Value from "ball" (85% of its meaning, in our example), with only tiny contributions from the other words. The model has now mathematically encoded the understanding that "it" refers to the "ball."

This entire process happens simultaneously for every single word in the sentence, allowing each one to build a rich, contextual understanding by "attending" to its neighbors. It's this deep dive into the transformer architecture explained that shows how these complex relationships are quantified and learned.

Meet the Famous Transformer Family: GPT, BERT, and More

The original "vanilla" transformer architecture was just the beginning—a brilliant blueprint. Once researchers grasped its power, they began adapting it for specific jobs, giving rise to a whole family of specialized models.

Think of it like a car chassis. You can use the same fundamental frame to build a lightning-fast race car, a rugged pickup truck, or a spacious family van. They all share a common origin but are engineered for very different purposes.

The key difference between these famous models often comes down to a simple choice: which parts of the original encoder-decoder structure do they use? This decision splits the family into three main branches.

BERT: The Expert Researcher

First up are the encoder-only models, and the most famous member of this group is BERT (Bidirectional Encoder Representations from Transformers). These models are the undisputed masters of understanding text.

By using only the encoder stack, BERT is designed to read an entire chunk of text at once and build a deep, contextual map of how every word relates to every other word. It’s "bidirectional," meaning it looks at words from both the left and the right to grasp their true meaning in context.

Imagine an expert researcher poring over a document. They don't just read it left-to-right; they jump back and forth, connecting ideas and absorbing the complete picture. That’s BERT.

- Real-World Example: Google Search. When you type a query, BERT helps the search engine understand the intent behind your words, not just the keywords. It gets that "2024 Brazil traveler to USA need a visa" is a specific question about visa rules, which helps it deliver far more relevant results.

GPT: The Creative Storyteller

Next, we have the decoder-only models, a branch made famous by the GPT (Generative Pre-trained Transformer) series from OpenAI. These models are the creative writers and storytellers of the AI world.

Using only the decoder stack, they excel at one thing: predicting what comes next. Their specialty is autoregressive generation. They write one word, then look at everything they've just written to decide on the next word, and then the next, and so on. This process allows them to generate incredibly fluid and human-like text.

- Real-World Example: ChatGPT. When you ask it to write an email or a story, you're seeing a decoder-only model in action. It takes your prompt—like "write a short poem about a robot learning to paint"—and creatively generates the next logical word, one after another, until a complete poem emerges.

This branch of the family tree has seen explosive growth. After Google released BERT in 2018, the race was on. OpenAI followed with its first GPT model, and then GPT-2 arrived in 2019 with 1.5 billion parameters. The real game-changer was GPT-3 in 2020, which scaled up to a mind-boggling 175 billion parameters—over 100 times larger than its predecessor. You can discover more insights about the evolution of transformer models to see just how quickly things progressed.

Expert Opinion: "The split between encoder-only and decoder-only models was a critical moment," says Kenji Tanaka. "It proved you didn't always need the full architecture. By specializing, BERT became the king of analysis, while GPT became the master of creation. This specialization is a huge reason why we have such diverse and powerful AI tools today."

T5: The Versatile All-Rounder

Finally, there are the models that stick to the original blueprint, using both the encoder and the decoder. The superstar here is T5 (Text-to-Text Transfer Transformer). Think of these as the versatile multi-tools of the transformer family.

T5’s brilliance lies in its unifying approach: it frames every single NLP task as a "text-to-text" problem. You give it an input text, and it generates an output text. Simple, yet incredibly powerful.

This framework allows it to handle a massive range of tasks—translation, summarization, question answering—all within a single model.

- Real-World Example: Document summarization. You can give a T5 model a long article with the prefix "summarize:" and it knows what to do. The encoder "reads" the whole document to build a deep understanding, and then the decoder generates a concise, coherent summary. It has effectively translated a long text into a short one.

These three branches—BERT, GPT, and T5—beautifully illustrate how one foundational idea can be adapted to create a stunning variety of tools, each one perfectly suited for its purpose.

Where You See Transformers in Your Daily Life

It’s easy to think of transformer architecture as some far-off, academic concept. The reality is, this technology is already a core part of your daily digital experience. You've probably used a transformer-powered tool in the last hour without even knowing it.

While generative AI chatbots are the most visible examples, their influence runs much deeper. Transformers are the engines humming beneath the surface of countless services you use every day, quietly making the internet smarter and more intuitive.

Powering Your Everyday Tools

Some of the biggest names in tech have rebuilt their core services around transformers. Their impact is so widespread that it's nearly impossible to go online and not interact with one.

Here are just a few places you’ll find them at work:

- Google Search: Ever wonder how Google understands your long, rambling questions? A transformer model like BERT is working behind the scenes to grasp the intent behind your words, not just the keywords.

- Google Translate: The reason real-time translation feels so natural and accurate comes down to transformers. They can understand the subtle relationships between words in two different languages.

- GitHub Copilot: For developers, this is a game-changer. AI assistants suggest entire chunks of code, all thanks to massive transformer models trained on billions of lines of source code.

These are perfect examples of how the transformer architecture explained in theory creates real, tangible value. If you're curious, you can dive deeper into the many other AI applications in daily life that are popping up everywhere.

Expert Opinion: "The true mark of the transformer's success isn't just its performance on benchmarks," notes Dr. Rostova. "It's how invisibly it has integrated into the tools billions of us rely on. When a technology works so well that you don't even notice it's there, you know it's made a real impact."

Beyond Text and Into New Frontiers

Transformers got their start with language, but their core ability to find patterns in sequences is proving incredibly versatile. Researchers are now pointing this architecture at entirely new problems, from analyzing images to decoding biological data.

This expansion is sparking some incredible breakthroughs. We're now seeing transformers that can:

- Generate Stunning AI Art: Models like DALL-E and Midjourney treat image pixels like words in a sentence, applying transformer principles to conjure up amazing visuals from text prompts.

- Accelerate Scientific Discovery: In biology, transformers are helping predict the complex 3D shapes of proteins from their amino acid sequences, which could massively speed up drug discovery.

Looking ahead, the focus is on making transformers more efficient and even more powerful. Researchers are tackling their heavy computational needs and finding ways for them to process even longer streams of information. This work is setting the stage for the next generation of AI, which will be woven even more deeply into the fabric of our lives.

Frequently Asked Questions About Transformers

As you start digging into transformers, a few key questions almost always pop up. It's a complex topic, but the core ideas are surprisingly intuitive. Let's break down some of the most common ones you'll encounter.

What Is the Main Advantage of Transformers Over RNNs?

The one-word answer? Parallelization. Think about how older models like RNNs worked: they had to read a sentence one word at a time, just like a human. This sequential process was a major bottleneck, making it incredibly slow to train on large amounts of text.

Transformers changed the game. Thanks to self-attention, they can look at every word in a sentence all at once. This ability to process everything in parallel is what unlocked the massive scaling we see today. Without it, training models the size of GPT-4 would be practically impossible—it would just take too long.

Why Is It Called Self-Attention?

The name is actually quite literal. The model is learning to pay attention to different parts of the same input sentence to understand the context of each word. For any given word, self-attention weighs the importance of all the other words in that same sentence.

It's an internal-facing mechanism. The sentence is essentially analyzing itself to figure out its own meaning, without needing any outside information.

Expert Opinion: "The beauty of self-attention is that it turns the abstract concept of 'context' into a series of mathematical calculations," says Kenji Tanaka. "The model learns on its own which words are important to which other words, allowing it to dynamically build meaning from the ground up for any given sentence."

Are Transformers Only Used for Text?

Not anymore! They got their start in natural language processing, but the architecture has proven to be incredibly versatile. We're now seeing transformers deliver groundbreaking results in a bunch of other areas. For example, Vision Transformers (ViT) treat small patches of an image just like words in a sentence, and they're now a dominant force in computer vision.

Beyond images, they’re being used for:

- Audio processing for tasks like speech recognition.

- Protein folding in biology, a huge deal for discovering new medicines.

- Time-series forecasting for everything from stock prices to supply chain logistics.

This just goes to show that transformers are a fundamental architecture for finding patterns in nearly any type of sequential data, not just language.

Do Transformers Have Any Weaknesses?

Absolutely. Their biggest downside is the computational cost. The self-attention mechanism has to calculate a relationship score between every single token and every other token in the sequence. This relationship grows exponentially as the text gets longer, a problem known as "quadratic complexity."

This is why a lot of current research is focused on creating more efficient versions of attention. The goal is to get past this bottleneck so we can scale these models to handle entire books, long-form videos, or even complete genomes without needing a supercomputer for every task.

At YourAI2Day, we break down the most important topics in artificial intelligence to keep you informed. Explore our resources to stay ahead of the curve. https://www.yourai2day.com