A Beginner’s Guide to the Machine Learning Workflow

Ever wonder how Netflix knows exactly what show you’ll binge next, or how your email inbox magically filters out junk mail? It's not magic—it's a machine learning workflow. Think of it as the complete, step-by-step recipe that data scientists follow to build any kind of AI. It’s the roadmap that takes a simple idea and transforms it into a smart, functioning application that solves a real-world problem.

This isn't just a single task; it's a full-circle journey of asking the right questions, preparing the right ingredients, and constantly refining your creation. Let's walk through it together.

Your AI Project Roadmap

Jumping into a machine learning project without a clear workflow is like trying to build a house without a blueprint. You might have the best materials in the world, but you'll probably end up with a mess. A machine learning workflow provides that crucial structure, breaking down what seems like a massive, complex undertaking into a series of manageable, easy-to-follow stages.

This structured approach is what keeps teams on the same page, helps them track their progress, and ensures the final product is actually useful. It turns an abstract goal ("let's build an AI!") into a concrete plan, making the whole process feel less like a scary math exam and more like an exciting, achievable project.

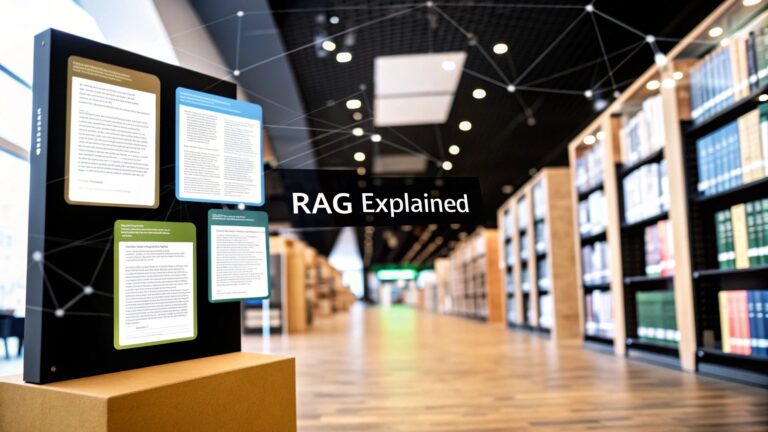

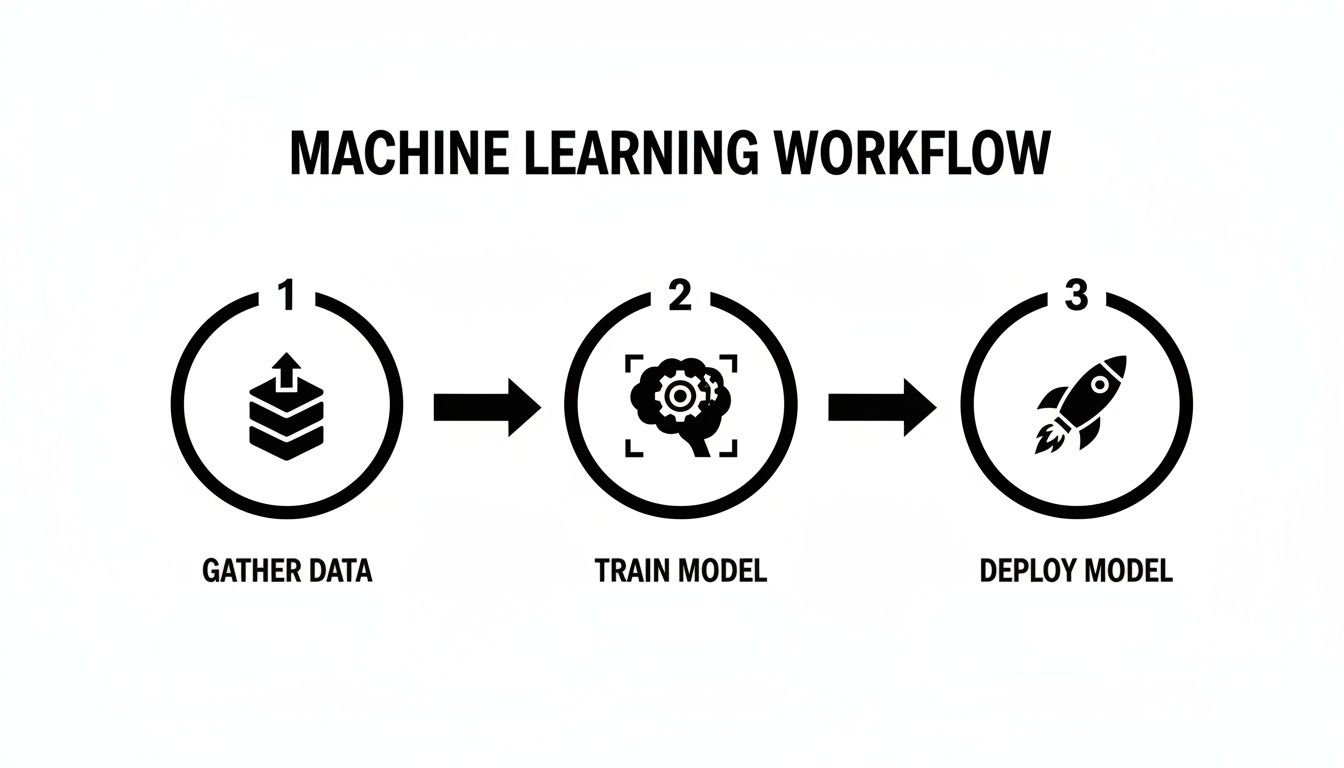

This diagram gives you a clean, high-level look at the core journey: from gathering data and training a model to finally deploying it for real users.

As you can see, the process moves forward from one step to the next, but it’s also designed to be a loop. Each stage builds on the one before it, and you’ll often find yourself circling back to an earlier step to make improvements as you learn more. It’s all part of the process!

The Power of a Structured Process

Following a defined workflow isn't just about being organized; it has a massive impact on your project's efficiency and success. For example, companies that incorporate AutoML (Automated Machine Learning) tools into their workflows see 4.8 times greater labor productivity growth than those that don't. For a beginner, this is great news! It means that using smart tools to streamline parts of the workflow can shrink development timelines from months down to just a few weeks. You can read more about the business impact of ML automation to see just how powerful this can be.

Expert Opinion: "The biggest mistake beginners make is jumping straight to model training. In reality, about 80% of the work in a machine learning workflow happens before you even select an algorithm. Your success is almost entirely determined by how well you frame the problem and prepare your data."

This is a critical insight. A brilliant algorithm can't save a project built on a shaky foundation. A structured workflow forces you to get those crucial early stages right, setting you up for success down the line.

The 7 Stages of a Machine Learning Workflow at a Glance

Every machine learning project, whether it's a simple spam filter for your inbox or a sophisticated recommendation engine for a streaming service, generally follows the same core stages. Think of these as the fundamental building blocks you can apply to almost any problem you want to solve with AI.

This table gives you a quick summary of the entire journey. It’s your cheat sheet!

| Stage Number | Stage Name | Primary Goal |

|---|---|---|

| 1 | Problem Framing | To clearly define the business problem and how a model will create value. |

| 2 | Data Collection | To gather all the raw data needed to train and test the model. |

| 3 | Data Preparation | To clean, transform, and format the raw data so an algorithm can understand it. |

| 4 | Model Training | To teach an algorithm to recognize patterns by feeding it the prepared data. |

| 5 | Model Evaluation | To test the model's accuracy and performance on data it has never seen before. |

| 6 | Deployment | To integrate the trained model into a live application or business process. |

| 7 | Monitoring & Tuning | To track the model's performance in the real world and retrain it as needed. |

By understanding this lifecycle, you have a mental model to guide your efforts and ensure no critical steps are missed. Let's dive into each of these stages and see what they look like in the real world.

Framing the Problem and Gathering Your Data

Every great machine learning project kicks off with a simple, powerful question—not a line of code. This first phase of the machine learning workflow is all about translating a broad business goal into a specific, measurable problem that an algorithm can actually solve. If you get this right, you've built a solid foundation for everything that follows.

Think about it. A goal like "improve customer retention" is a fantastic business aspiration, but it’s too vague for a machine. You have to reframe it into a clear, predictive question to make it actionable.

For instance, that vague goal becomes: "Can we predict which customers are at high risk of cancelling their subscription in the next 30 days?" Now that is a question a model can answer. It can give you a concrete "yes" or "no" for each customer, giving your business a clear path to take action (like offering a discount to at-risk customers!).

Turning Business Goals into ML Problems

This translation step is easily one of the most creative and crucial parts of the whole process. It forces you to think like both a business strategist and a data scientist. The real objective here is to define what "success" looks like in tangible, data-driven terms.

Here are a few more practical examples of how this transformation works:

- Business Goal: "We want to increase our sales."

- ML Problem: "Can we recommend the top three products a specific user is most likely to buy next?" (A classic recommendation problem).

- Business Goal: "We need to reduce operational costs from equipment failure."

- ML Problem: "Can we predict when a specific machine will need maintenance in the next 7 days?" (A predictive maintenance problem).

- Business Goal: "We want to improve our marketing campaigns."

- ML Problem: "Which customer segments are most likely to respond to a new promotion?" (This could be a classification or a clustering problem).

By framing the problem this way, you create a sharp, well-defined target. This clarity becomes your North Star, guiding everything from data collection to model selection and ensuring your final project delivers real business value.

Expert Opinion: "A well-defined problem is 50% of the solution. If you can't state what you're trying to predict in a single, clear sentence, you're not ready to start collecting data. Spend extra time here; it will save you months of wasted effort later."

Gathering Your Raw Ingredients: Data

With your question nailed down, it's time to find the ingredients to answer it: data. Data is the lifeblood of any machine learning model. The quality of your inputs will directly dictate the quality of your outputs—the old saying "garbage in, garbage out" is brutally true here.

Your data can come from just about anywhere—internal customer databases, website analytics, IoT sensor logs, public datasets, or even third-party APIs. Generally, it falls into one of two buckets:

- Structured Data: This is the clean, organized data you’d find in a spreadsheet or a database, with neat rows and columns. Think customer purchase histories, stock prices, or server logs.

- Unstructured Data: This is literally everything else. We’re talking about the text from emails and customer reviews, images, video files, and audio recordings. This data often holds incredible insights, but it requires a lot more effort to get it into a usable format.

No matter where it comes from, the first step is to cast a wide net and collect all the potentially relevant information. For our customer churn example, that means grabbing data on customer demographics, purchase frequency, support ticket history, website activity, and how long they've been a subscriber.

The more relevant signals you can gather, the better your model will be at spotting the subtle patterns of a customer who's about to leave. This initial collection phase is about getting everything you might need before you dive into the critical work of cleaning and refining it, which we'll get to next.

Preparing Your Data for Machine Learning

Once you've gathered all your raw ingredients, it's time to head to the kitchen. Raw data, much like unrefined ore, is packed with potential but is almost never ready to be used as-is. This stage of the machine learning workflow, known as data preprocessing, is where you clean, shape, and transform that raw material into something a model can actually understand and learn from.

Fair warning: data scientists often report that this stage can eat up a staggering 80% of their total project time. It’s meticulous, sometimes tedious work, but it's hands down the most critical step for building an accurate model. A little extra effort here pays off massively down the road.

The Art of Cleaning Your Data

Imagine trying to teach a child to read using a book with smudged ink, missing pages, and typos on every line. It would be a frustrating, ineffective process. A machine learning model faces the same challenge. Data cleaning is all about fixing these kinds of imperfections so your model gets a clear, consistent signal.

This usually involves a few essential tasks.

- Handling Missing Values: It's rare to get a perfect dataset. More often than not, you'll find blank cells where data should be. You have to decide how to handle them—do you remove the entire row, or do you fill in the gap with a logical substitute like the average value (a technique called imputation)?

- Removing Duplicates: Sometimes, the same piece of information gets recorded multiple times. This can skew your model's perspective, making it think certain patterns are more important than they really are. Finding and zapping these duplicates is a quick win for data quality.

- Standardizing Formats: Your data might have annoying inconsistencies, like dates written as "10-25-2023" in one place and "October 25, 2023" in another. You need to pick one format and stick with it, so the algorithm can process everything correctly.

Getting these cleaning steps right ensures your data is reliable and consistent, setting you up for the next crucial phase of preparation.

Engineering Powerful Features from Raw Data

With your data tidied up, you can move on to one of the most creative parts of the entire workflow: feature engineering. This is where you use your domain knowledge to select, transform, and even invent new data columns (features) that will act as the strongest possible signals for your model. It’s less about cleaning and more about making your data smarter.

Think of it this way: if you were trying to predict house prices, you’d have features like 'number of bedrooms' and 'square footage'. Those are useful, sure. But what if you created a new feature called 'price per square foot'? That single, engineered feature could be a much stronger indicator of value than the raw numbers alone.

Expert Opinion: "Feature engineering is where the magic really happens. A simple model with excellent features will almost always outperform a complex model with mediocre ones. This is where human creativity and expertise give you a massive competitive edge in building a great AI."

Let's go back to our customer churn example. Your raw data might include a 'signup date' and a 'last activity date'. By themselves, they're just dates. But if you subtract one from the other, you can engineer a new feature: 'days since last activity'. Suddenly, you have a direct, powerful signal that is far more useful to the model than the original data. Creating these kinds of insightful features is at the heart of building an effective model. If you want to dive deeper, you can explore the art of feature engineering for machine learning in our detailed guide.

Ultimately, this entire preparation stage is about transforming chaotic, raw information into a clean, structured, and insightful dataset. It's the painstaking work that turns basic data into predictive fuel, setting the stage for a model that can actually learn something useful.

Building and Training Your First AI Model

Alright, you’ve wrestled your data into shape. Now comes the fun part—the moment we start turning all that clean, organized information into a working piece of intelligence. This is where we choose a machine learning algorithm and "train" it to see the patterns you worked so hard to prepare.

So, what exactly is a "model"? Think of it as an empty brain, a blank slate ready to learn one specific skill. And "training" is just the teaching process. We feed this brain thousands of examples from our dataset until it starts to figure things out for itself. It’s a lot like teaching a toddler to recognize dogs by pointing out every dog you see at the park. Eventually, they get it.

Choosing Your Learning Style

Machine learning isn’t a one-size-fits-all game. Models learn in different ways, and the right approach always ties back to the problem you defined in step one. The three main "learning styles" you'll encounter are supervised, unsupervised, and reinforcement learning.

Picking the right model type is crucial for your project's success. This table breaks down the three main approaches to help you decide which path makes the most sense for your goals.

Choosing Your Machine Learning Model Type

| Model Type | What It Does | Example Use Case |

|---|---|---|

| Supervised Learning | Learns from data that is already labeled with the right answers. It's like studying with an answer key. | Predicting if an email is spam or not spam. |

| Unsupervised Learning | Finds hidden patterns and structures in unlabeled data. It sorts things into groups without being told what the groups are. | Segmenting customers into different marketing groups based on their purchasing habits. |

| Reinforcement Learning | Learns by trial and error, getting rewards for good actions and penalties for bad ones. | Training an AI to play chess or a video game. |

Each style has its place, but for most people starting out, supervised learning is the most direct route to solving a huge range of common business problems.

The All-Important Train-Test Split

Before you let your model see a single piece of data, you have to do something that might feel counterintuitive: hide some of it. This is easily one of the most critical steps in the entire workflow, known as the train-test split.

Why do we do this? Because if you train your model on your entire dataset, you have no way of knowing if it actually learned the underlying patterns or if it just memorized the answers. It’s like giving a student the exact same questions for their homework and their final exam.

Instead, you split your data into two distinct piles:

- Training Set (around 80%): This is the large portion you'll actually use to teach the model.

- Testing Set (around 20%): This smaller, unseen chunk is kept locked away. You'll use it later to grade your model's performance on data it has never encountered before.

Think of the testing set as that final exam. If your model aces it, you can be confident it's ready for the real world. This process requires some serious number-crunching, which is why having the right infrastructure is so important. In fact, a recent report found that 59% of machine learning practitioners rely on Amazon Web Services (AWS) as their go-to cloud platform for these heavy-duty tasks. You can discover more insights about ML infrastructure trends to see how pros handle this stage.

Expert Opinion: "The biggest rookie mistake is 'overfitting.' This happens when a model becomes too attached to the training data—it memorizes the noise and details instead of learning the general patterns. A proper train-test split is your first and best defense against this. Your model's performance on the test set is the only result that truly matters."

Avoiding overfitting is the key to building a model that is genuinely useful, not just one that looks good on paper. By setting aside that testing data, you ensure your model is robust, reliable, and ready to deliver real value.

Evaluating and Fine-Tuning Model Performance

You’ve built a model and trained it on your data. It's now spitting out predictions, which feels like a huge win. But before you break out the champagne, you have to answer the most important question: is it actually any good?

This is the evaluation stage of the machine learning workflow, and it’s basically your quality control department. Here, you’ll put your model through its paces, testing it against that untouched test data you carefully set aside earlier.

Think of it as a final exam. The model spent all its time "studying" the training data, but now it’s time to see if it can apply that knowledge to problems it's never seen before. This isn't just about getting a pass/fail grade; it's about digging into how the model gets things right and, more importantly, where it goes wrong.

Measuring Success with the Right Metrics

To grade your model, you need the right report card. Just looking at accuracy—the raw percentage of correct predictions—is often a trap.

Imagine you're building a model to detect a rare disease that only affects 1% of people. A lazy model that just predicts "no disease" every single time would be 99% accurate. Sounds great, right? But it’s completely useless because it would miss every single person who actually has the disease.

This is exactly why we need to look at a few key metrics together to get the full story. Let's use a classic spam filter example to make this crystal clear:

- Precision: Out of all the emails your model labeled as spam, how many were actually junk? High precision means you're not accidentally sending important client emails to the spam folder (low false positives).

- Recall: Of all the real spam emails that hit your inbox, how many did your model actually catch? High recall means your filter is doing a great job of keeping junk out of sight (low false negatives).

You're almost always trying to strike a balance between the two. A model with perfect recall might catch every single spam message, but it might also flag half of your legitimate emails. On the flip side, a model with perfect precision might never mislabel a good email, but it could let a ton of junk slip through. Understanding this trade-off is absolutely crucial for building a model that solves the real problem you’re facing.

Fine-Tuning Your Model for Peak Performance

Once you’ve got your evaluation results, it's pretty rare that you'll be perfectly happy with the first attempt. There's almost always room for improvement, and this is where hyperparameter tuning comes into play.

If a model is an engine, think of hyperparameters as the various knobs and dials you can adjust to get more power or better fuel efficiency. These are the settings that you, the data scientist, define before training even starts.

It's a bit like tuning a guitar. You patiently adjust the tension on each string (the hyperparameters) until the instrument produces the perfect sound (the best model performance). This is an iterative loop: you tweak a setting, retrain the model, and evaluate it again, slowly squeezing every last bit of performance out of it.

Expert Opinion: "Beginners often think model selection is the most important step. In reality, a well-tuned, simpler model often outperforms a complex, untuned one. Hyperparameter tuning is where you go from a 'good enough' model to a great one."

This process gets even more critical with massive models like LLMs, where tiny adjustments can lead to huge differences in output. For anyone diving into that world, you can learn more by checking out our guide on how to fine-tune an LLM effectively.

By systematically tuning and re-evaluating, you find the sweet spot for your specific dataset and problem. It’s how you ensure your model isn't just functional—it's performing at its absolute best and is truly ready for deployment.

Deploying and Monitoring Your Model in the Wild

An AI model sitting on a developer's laptop is just a clever experiment. It only starts creating real value when it’s released into the wild, actively making predictions that help a business or an application. This final, critical stage of the machine learning workflow is all about deployment—the process of integrating your trained model into a live production environment.

But launching your model isn't the finish line. It's really the starting line for a whole new race. The world is constantly changing, and what your model learned yesterday might not be true tomorrow. This is why ongoing monitoring is every bit as important as the deployment itself.

From the Lab to the Real World

Getting your model "live" can take a few different forms, depending on what you need it to do. The goal is to make its predictive power accessible to other software or to end-users. Think of it as installing a new, intelligent engine into your existing business machinery.

There are several common strategies for this, each with its own trade-offs:

- API Endpoint: This is probably the most popular method. You wrap your model in an API (Application Programming Interface), which lets other applications send it data and get a prediction back. For example, a website could send customer data to the API and instantly receive a churn risk score.

- Embedded Model: For applications that need lightning-fast responses, like on a smartphone or an industrial sensor, the model can be embedded directly into the device's software. This avoids network delays but makes updates a bit trickier.

- Batch Predictions: Not all predictions need to happen in real-time. Sometimes, you just need to run your model over a large chunk of data on a schedule, like generating a list of at-risk customers once a day.

Choosing the right path comes down to your specific use case. To get a deeper understanding of these different approaches, check out our complete guide on machine learning model deployment.

The Silent Killer: Model Drift

Once your model is live, a new challenge emerges: model drift. This is the natural and inevitable decay of a model's predictive accuracy over time. It happens because the real world doesn't stand still. Customer behaviors change, market trends shift, and new data patterns emerge that your model was never trained on.

Expert Opinion: "A deployed model is a living thing. If you treat it as a 'fire and forget' project, its performance will degrade, and it can go from being a valuable asset to a liability without you even noticing. Active monitoring isn't optional; it's essential risk management."

Imagine a model trained to predict fashion trends back in 2019. It would be completely lost trying to make sense of today's market. That's model drift in action. Without monitoring, your once-accurate model could start making poor, costly decisions.

Your Post-Launch Monitoring Plan

To fight model drift and ensure long-term success, you need a robust monitoring plan. This involves continuously tracking both the model's technical performance and the real-world data it's seeing. Your plan should be a simple checklist of vitals to watch.

A Practical Post-Deployment Checklist:

- Monitor Input Data: Are the new data points coming in structurally similar to the training data? Watch for sudden shifts in averages, the appearance of new categories, or an increase in missing values. This is often your earliest warning sign of drift.

- Track Model Predictions: Keep an eye on the output. If your churn model suddenly starts predicting that 90% of customers will leave, something is probably wrong with the data it's receiving.

- Measure Real-World Accuracy: Whenever possible, compare your model's predictions to actual outcomes. If you predicted a customer would churn and they did, that's a win. If they didn't, that's an error. Tracking this real-world accuracy is the ultimate measure of performance.

- Set Up Alerts: Don't wait to discover a problem by accident. Set up automated alerts that trigger when key metrics (like accuracy or data distribution) drop below a certain threshold.

- Schedule Retraining: Based on what you learn from monitoring, establish a cadence for retraining your model on fresh data—whether it's quarterly, monthly, or even weekly—to keep it sharp and relevant.

Common Questions About the Machine Learning Workflow

Getting started in machine learning always brings up a few practical questions. Let's walk through some of the most frequent ones people ask, so you can get a clearer picture of how these projects really work.

How Long Does a Typical Machine Learning Workflow Take?

This is the classic "how long is a piece of string?" question. The honest answer is that it varies wildly.

A simple project with a small, perfectly clean dataset might be wrapped up in just a few weeks. But that’s rarely the case in the real world. A more realistic project—one that involves wrangling messy data from different places, a ton of feature engineering, and rigorous model tuning—can easily stretch into several months, sometimes even over a year.

Keep in mind that data preparation is almost always the longest pole in the tent. It’s not uncommon for this stage alone to eat up 80% of the entire project timeline.

What Are the Most Common Tools Used in a Workflow?

While the ML toolkit is always expanding, a few key tools form the backbone of most projects. Python is the undisputed king of the hill, mainly because of its incredible ecosystem of libraries built specifically for data science and ML.

You'll run into these tools constantly:

- Pandas: The essential library for any kind of data cleaning and manipulation. If your data is in a table, you'll be using Pandas.

- Scikit-learn: A powerhouse for building just about any traditional machine learning model you can think of.

- TensorFlow & PyTorch: The two heavyweights in the deep learning space, used for building complex neural networks.

- Cloud Platforms: Services from AWS, Google Cloud, and Microsoft Azure are non-negotiable for serious work, providing the raw computing power needed to train and deploy models.

Expert Opinion: "You don't need to master every tool at once. Start with Python, Pandas, and Scikit-learn. That combination is powerful enough to handle a huge percentage of real-world machine learning problems, giving you a solid foundation before you dive into more specialized tools."

What Is MLOps and How Does It Relate to the Workflow?

You’ll hear the term MLOps (Machine Learning Operations) pop up more and more, and for good reason. It’s a crucial practice for anyone moving beyond hobby projects.

Think of it like this: MLOps takes the principles of automation and reliability from software development (known as DevOps) and applies them to the entire machine learning lifecycle. It's not a single step in the workflow; it's a philosophy that covers everything from data ingestion to model monitoring.

The whole point is to make the process of building, deploying, and maintaining models faster, repeatable, and less prone to human error. When a model is running a critical business function, MLOps is what keeps it stable and reliable.

At YourAI2Day, we're dedicated to bringing you clear, practical insights into the world of artificial intelligence. To stay ahead of the curve and continue your learning journey, explore our latest articles and resources at https://www.yourai2day.com.