Your Guide to Machine Learning Model Deployment

Ever wondered how a machine learning model escapes a data scientist's laptop to power a real-world app, like your Netflix recommendations or a spam filter? That leap from a Jupyter notebook to a live application is the entire game of machine learning model deployment. It's the critical final mile that turns a clever piece of code into a valuable business tool that actually does something.

From Code to Reality: Your Deployment Journey

Think of it like this: building a model is like writing a brilliant manuscript. You've spent countless hours researching, writing, and perfecting every chapter. But a manuscript gathering dust on your desk doesn't help anyone, right?

Deployment is the publishing process. It’s the editing, printing, distribution, and logistics needed to get that book into the hands of readers. For a model, it’s about making your hard work accessible and useful to the people or systems that need it. This is where the real value is unlocked.

Why Deployment Is More Than Just Flipping a Switch

It's a common misconception that deploying a model is a simple hand-off. The reality is that it’s a sophisticated process that lives at the intersection of data science and software engineering. When you move a model into a live production environment, you slam into a wall of real-world challenges that just don't exist in a clean, controlled development setting.

This journey means you have to plan for:

- Scalability: How will your model handle thousands, or even millions, of requests without breaking a sweat?

- Reliability: What's the plan if a server goes down? Your model needs to be resilient and available 24/7.

- Maintenance: How do you update the model with fresh data or patch a bug without taking the whole system offline?

- Efficiency: The model has to return predictions fast, without hogging a ton of expensive computing resources.

Getting these things right is what separates AI projects that deliver real impact from those that become expensive science experiments. To get a better handle on this, you can learn more about the strategic value of these projects by exploring our guide on implementing AI in business.

The Growing Importance of Nailing Deployment

The stakes for getting deployment right have never been higher. As more businesses rely on AI to run their operations, the ability to deploy and manage models effectively has become a core competency, not just a "nice-to-have."

The market numbers tell the story. The global machine learning market is projected to skyrocket from $93.95 billion in 2025 to a staggering $1.41 trillion by 2034. This growth isn't about building more models; it's about putting those models into production to solve real problems. North America and Europe are leading the charge, making up nearly 89% of the market and showing just how crucial this final step is to business success.

As one MLOps expert I know likes to say, "A machine learning model only begins to create value once it's deployed. Until then, it's a powerful but dormant asset."

This guide will give you a clear roadmap from theory to application. We'll break down different deployment patterns, walk through the essential tools, and share best practices to help you confidently get your models out of your lab and into the real world. Let's get started.

Choosing the Right Deployment Strategy

Getting a machine learning model out into the world isn't a one-size-fits-all process. The best machine learning model deployment strategy comes down to what your application actually needs to do. You wouldn't use a slow, daily process for something that demands an instant answer, just like you wouldn't send a formal invitation by text message.

To build a successful, real-world AI application, you first need to understand the fundamental deployment patterns. Let’s break down the big three.

Online or Real-Time Inference

Think of real-time inference like a live conversation. You ask a question, and you expect an answer right away. This deployment pattern works the same way, processing a single piece of data (or a tiny batch) on the fly to give you a prediction in milliseconds.

This approach is non-negotiable for any application where a user is actively waiting for the model to do something. It’s the magic behind most of the AI we interact with every day.

Common Use Cases for Real-Time Inference:

- Fraud Detection: Instantly flagging a sketchy credit card transaction before the payment goes through.

- Product Recommendations: Showing an online shopper relevant items as they click around your e-commerce site.

- Spam Filtering: Deciding in a fraction of a second whether an incoming email belongs in the inbox or the junk folder.

The biggest win here is speed and immediate feedback. The trade-off? Your model has to be up and running 24/7, which can make scaling and maintenance more complex, as you have to guarantee low latency and constant availability.

Batch Inference

If real-time is a phone call, batch inference is more like processing payroll at the end of the month. It doesn't need to happen this very second. Instead, it chews through huge volumes of data on a set schedule—maybe hourly, daily, or even weekly.

This "collect now, process later" method is a fantastic fit for tasks that aren't time-sensitive but involve enormous datasets. It's often far more cost-effective and simpler to manage than a real-time system.

Practical Examples of Batch Inference:

- Sales Forecasting: Kicking off a job every morning to generate a sales forecast for the week based on yesterday's numbers.

- Customer Segmentation: Running a weekly process to group customers into new marketing segments based on their recent activity.

- Image Tagging: Processing a new dump of thousands of user-uploaded photos overnight to get them all categorized and tagged.

While batch processing is incredibly efficient for big jobs, its Achilles' heel is latency. The insights aren't immediate, making it a poor choice for anything requiring live user interaction.

Edge Deployment

Edge deployment flips the script entirely. Instead of running in the cloud, the model lives directly on a device—a smartphone, a smart camera, or a sensor on a factory floor. It’s like having a calculator in your pocket instead of calling someone to do the math for you. All the number-crunching happens right where the data is created.

Expert Opinion: "Moving inference to the edge is a game-changer for privacy and speed. By processing data locally, you minimize latency and reduce the risk associated with sending sensitive information to a central server. It's essential for the next wave of responsive, intelligent devices."

This strategy is absolutely critical for apps that need lightning-fast responses or have to work even when there's no internet connection.

When to Use Edge Deployment:

- Facial Recognition: Unlocking your smartphone instantly without sending your face to the cloud.

- Predictive Maintenance: A sensor on a piece of machinery running a model to spot early signs of a potential failure.

- Voice Assistants: Handling simple commands like "set a timer" on a smart speaker without needing to hit a server.

The main benefits are incredibly low latency and the ability to function offline. But this approach comes with its own set of challenges. Edge devices have limited compute power and memory, so models often need to be heavily optimized just to run.

Comparing ML Model Deployment Patterns

To help you decide which pattern is the best fit for your project, here’s a quick-glance comparison of the three primary approaches.

| Deployment Pattern | Best For | Example Use Case | Key Advantage |

|---|---|---|---|

| Real-Time Inference | Instant, on-demand predictions | Credit card fraud alerts | Immediate response |

| Batch Inference | Large data volumes on a schedule | Nightly sales forecasting | High throughput & efficiency |

| Edge Deployment | Offline functionality and low latency | Smartphone facial recognition | Speed and data privacy |

Choosing the right deployment path from the beginning is one of the most important decisions you'll make. It will save you countless headaches down the road and ensure your model actually delivers on its promise.

Your Deployment Toolkit: Platforms and Tools

Now that we’ve covered the "what" and "why" of deployment strategies, it’s time to get our hands dirty with the "how." Let's dive into the actual platforms and tools that bring your machine learning models to life.

Think of this as a tour of your workshop. We'll look at the big all-in-one workstations, the standardized shipping containers for moving your work around, and the nimble, on-demand engines that fire up only when you need them.

Cloud Platform Options

Major cloud platforms like AWS SageMaker, Google AI Platform, and Azure Machine Learning are like fully-equipped, managed workshops. They bundle everything you need for training, tuning, hosting, and monitoring your models under one roof.

The real advantage here is convenience and speed. These platforms are designed to remove a ton of the operational friction that can slow down a project.

Key benefits include:

- Effortless Setup: You can get a ready-made API endpoint for your model in minutes, not days.

- Built-in Scalability: The infrastructure automatically scales up or down to handle traffic spikes, so you don't have to worry about your model crashing under pressure.

- Integrated Tooling: They come with tools for experiment tracking, model versioning (a model registry), and real-time performance alerts.

“Using a managed cloud platform reduces operational burden and speeds up time to production,” notes AI engineer Priya Desai.

Of course, you aren't locked into a pure-cloud approach. Many teams create a hybrid setup, combining managed services with their on-premise infrastructure for added security and control. For instance, you could use a dedicated VPC to securely connect cloud endpoints to private data centers or lean on hybrid tools like AWS Outposts or Azure Arc for consistent management across environments.

Containerization Essentials

This is where Docker comes in. If you're new to it, containerization is all about packaging your model, its code, and all its dependencies into a single, self-contained unit.

The best analogy is a standardized shipping container. It doesn't matter if it's on a ship, a train, or a truck—the contents inside are protected and arrive exactly as they were packed. This simple idea solves the age-old "it worked on my machine!" problem, ensuring your model runs identically everywhere.

But just having containers isn't enough. You need a way to manage them at scale. That's the job of an orchestrator like Kubernetes, which acts as the air traffic control for your fleet of containers.

Kubernetes handles critical tasks like:

- Automated Scaling: It spins up more containers (pods) when traffic is heavy and shuts them down when it's light.

- Rolling Updates: You can deploy new model versions with zero downtime.

- Self-Healing: If a container fails, Kubernetes automatically replaces it to keep your application running.

When you're building a Docker image for your model, a few best practices go a long way. Start with a lightweight base image, and always be specific about your library versions to avoid unexpected breaks down the line. Use multi-stage builds to keep the final image small and scan it for security vulnerabilities before pushing it to a private registry for your team.

Serverless Model Hosting

What if you could forget about managing infrastructure altogether? That's the promise of serverless computing with platforms like AWS Lambda, Google Cloud Functions, and Azure Functions.

This approach is perfect for models that get intermittent or unpredictable traffic. Instead of paying for a server that's always on and waiting, you only pay for the exact compute time your model uses for an inference.

Deploying a model with a serverless function is surprisingly straightforward:

- Package your inference code and model file into a zip archive or a container image.

- Write a simple "handler" function that acts as the entry point for your API.

- Configure the function's memory, timeout settings, and any environment variables it needs.

- Set up an HTTP trigger, often through a service like API Gateway, to make it accessible from the web.

- Test it locally, then deploy it with a few clicks in the cloud console or a single command-line instruction.

If you're looking to improve your data pipelines before they even hit the model, check out our comprehensive guide on AI Data Analysis Tools to build a stronger foundation.

Serverless isn't without its quirks, namely cold starts (a slight delay when the function is invoked for the first time) and execution timeouts. But you can manage these. "Provisioned concurrency" keeps a few instances warm to reduce latency, and you can optimize performance by caching assets and fine-tuning memory allocation.

Selecting Your Best Fit

So, which path is right for you? As with most things in tech, it depends. Each method presents a different trade-off between cost, control, and complexity.

Cloud platforms are fantastic for end-to-end management and speed. Containers give you unmatched portability. And serverless is a cost-effective champion for bursty, event-driven workloads.

The best way to decide is to experiment. Build a small prototype with each approach and see which one feels right for your team's skills and your project's specific needs. This toolkit is all about giving you the power to choose the right tool for the job.

Automating Your Workflow with MLOps

Trying to deploy models by hand is a recipe for disaster. It's slow, prone to human error, and completely falls apart once you have more than one or two projects. This is where the professionals turn to automation, and the practice that makes it all happen is called MLOps (Machine Learning Operations).

Simply put, MLOps is about applying the same rigorous, automated principles from software engineering to the world of machine learning.

At the very heart of MLOps is the CI/CD pipeline, which stands for Continuous Integration and Continuous Deployment. Think of it as a fully automated assembly line for your model. You feed it the raw materials—your code and data—and it handles every single step to produce a final, production-ready model without anyone needing to click a button.

When a developer pushes even a small code change, the whole assembly line whirs to life. This automation is what ensures every update is consistent, reliable, and ready for users in record time.

The Stages of a CI/CD Pipeline

Let's break down what this looks like in the real world. Imagine you have a sentiment analysis model that sorts customer reviews into "positive" or "negative." A data scientist on your team just tweaked the code to better understand sarcasm.

The moment they commit that change, here’s what the automated pipeline does:

-

Continuous Integration (CI): As soon as the new code hits a shared repository like GitHub, the pipeline kicks off. It immediately runs a battery of automated tests to make sure the new code didn't accidentally break something else. This isn't just about code; it includes data validation to confirm the model can still handle the data formats it expects.

-

Model Validation: Once the initial checks pass, the pipeline gets to the important part: it automatically retrains the model using the new code. Then, it pits the new model against a holdout test dataset. If key metrics like accuracy or precision dip below a predefined threshold, the pipeline fails and alerts the team. This is a critical safety net that prevents a weaker model from ever seeing the light of day.

-

Packaging: With the new model validated, the pipeline packages it up for deployment. This typically means creating a Docker container—a self-contained, portable unit that bundles the model file, the inference code (like a FastAPI app), and all of its dependencies.

-

Continuous Deployment (CD): Finally, the pipeline pushes this new container into the production environment. This doesn't have to be an all-or-nothing switch. It could be a full rollout, or it might be a more cautious "canary release," where the new model is only exposed to a small fraction of users first to monitor its real-world performance.

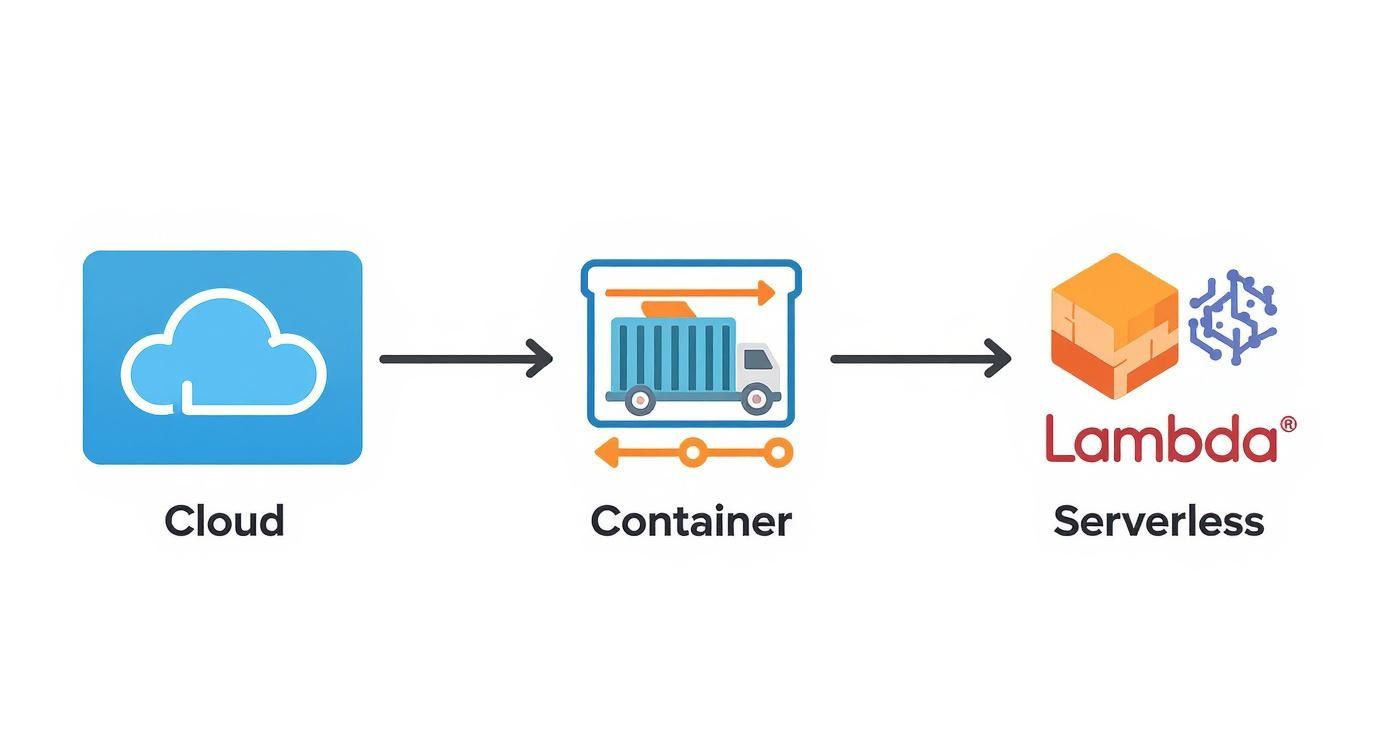

This infographic gives you a bird's-eye view of how all the pieces, from cloud platforms to containers, fit into this automated flow.

As you can see, the journey from high-level cloud services down to specific containers or serverless functions is all part of a larger, automated puzzle that MLOps helps solve.

Introducing Continuous Training

A CI/CD pipeline is fantastic for handling changes to your code, but what about when your data changes? This is where the next evolution, Continuous Training (CT), comes into play. CT creates a truly "hands-off" system where the model can retrain and redeploy itself automatically.

A CT pipeline is designed to automatically trigger retraining whenever it detects that new data is available or that the model's performance in production has started to degrade—a problem we call model drift.

This proactive approach keeps your model fresh, relevant, and accurate over time without requiring a data scientist to manually step in.

This is quickly becoming the gold standard for modern machine learning model deployment. In fact, a major trend in MLOps heading into 2025 is the widespread adoption of this kind of automation.

Automated retraining pipelines are essential for keeping models from going stale, slashing error rates, and freeing up your team to work on the next big thing. By merging MLOps with established DevOps practices, we're creating a single, unified development cycle for both software and AI. As you can imagine, this dramatically accelerates how quickly you can get valuable models out the door. You can learn more about how MLOps practices are evolving and what you need to know on hatchworks.com.

Keeping Your Live Model Healthy

Getting your model into production feels like crossing the finish line, but it’s really just the beginning. This is where the real race starts. Once your model is live, it’s no longer in a clean, controlled lab; it's out in the wild, dealing with messy, unpredictable, real-world data.

The truth is, the world doesn't stand still. A model trained on last year's data might be completely out of sync with today’s reality. This is one of the most critical challenges in machine learning model deployment, and it has a name: model drift.

As one expert puts it, "A deployed model without monitoring is a ticking time bomb." It might look fine on the surface, but its performance could be silently degrading, leading to bad predictions and, ultimately, poor business outcomes.

Understanding the Two Faces of Model Drift

Model drift isn't a single, simple problem. It actually shows up in two distinct ways, and knowing the difference is crucial for figuring out why your model's performance is tanking.

-

Concept Drift: This is when the fundamental relationship between your inputs and what you’re trying to predict changes. Think about customer behavior, market trends, or even the economy—they all shift. For example, a model built to predict "popular fashion items" would be useless if it was trained before a new viral trend took over. The meaning of "popular" has changed.

-

Data Drift: This happens when the input data itself starts to look different from the data the model was trained on. Imagine a loan approval model trained mostly on data from one city. If the company suddenly expands nationwide, the new applicant data—with different income levels and credit histories—will have a completely different statistical profile. The inputs have changed, even if the definition of a "good loan" hasn't.

Key Metrics to Keep on Your Radar

To catch drift before it wrecks your results, you need a solid monitoring system. This means building dashboards and setting up alerts to track a few essential vital signs for your model.

Your Monitoring Checklist Should Include:

- Prediction Speed (Latency): How long does the model take to spit out a prediction? A sudden spike here could point to an infrastructure bottleneck or a problem with the incoming data.

- Error Rates: This is your most direct performance measure. Keep a sharp eye on metrics like accuracy, precision, and recall. Are they holding steady, or are they slowly creeping downward?

- Data Quality: Are you suddenly seeing a flood of missing values or data in weird formats? Garbage in, garbage out. Bad input data always leads to bad predictions, so this can be an early warning sign of a broken data pipeline.

- Prediction Distribution: Watch the model’s outputs. If a fraud detection model that normally flags 1% of transactions suddenly starts flagging 20%, you can bet something is very wrong.

By 2025, deploying machine learning models has moved far beyond small experiments into large-scale business operations. In fact, about 70% of global companies now use AI in some capacity, and nearly half have operationalized it across multiple departments. With 74% of these initiatives meeting or beating their ROI goals, the pressure to maintain model health and performance is immense. You can explore more about these AI and machine learning trends at hblabgroup.com.

Proactive monitoring isn't just a technical task; it's a security imperative. Making sure your model is behaving as expected is a core part of preventing misuse. For a deeper look into this, check out our guide on AI security best practices. Setting up automated alerts that ping your team the second a metric crosses a critical threshold isn't optional—it's what keeps your deployed model healthy, accurate, and valuable.

Got Questions About Model Deployment? Let's Clear Things Up.

As you start wrapping your head around machine learning model deployment, it's totally normal for questions to pop up. This final step in the ML lifecycle often feels like the most mysterious and complex part of the whole journey.

Let's cut through the confusion. Here are some straightforward answers to the most common questions people have, designed to give you the confidence to get your own models out into the world.

What Is the Hardest Part of Deploying an ML Model?

You might be surprised to hear this, but for most teams, the initial launch isn't the biggest hurdle. The real challenge is the ongoing maintenance.

The world doesn't stand still, and neither does your data. Over time, the live data your model sees in production can start to look different from the data it was trained on. This phenomenon, known as "model drift," slowly chips away at your model's accuracy until it's no longer reliable.

The truly tough part is building the systems to deal with that reality. Setting up robust monitoring to catch drift early, creating automated pipelines to retrain the model on new data, and keeping the whole thing secure is far more demanding than that first push to production. It's where data science truly has to shake hands with software engineering—the very heart of MLOps.

A Word From the Trenches: "The biggest mistake I see is teams treating deployment as an afterthought. They'll spend 99% of their energy chasing a high accuracy score and only think about how to get it live at the very end. That's a recipe for massive delays. You have to plan for the entire lifecycle from day one."

Is Deploying a Deep Learning Model Different?

Yes and no. The core principles are the same, but deep learning models absolutely bring their own unique set of headaches to the party.

For starters, they are often enormous. This size has a direct impact on a few critical things:

- Storage Costs: Bigger models mean bigger bills for storing them.

- Memory Needs: They demand a lot more RAM to run, which can get expensive fast.

- Prediction Speed (Latency): A huge model can take longer to spit out an answer, which is a total dealbreaker for real-time applications.

On top of that, they often need specialized hardware, like GPUs, to perform well, adding another layer of infrastructure complexity. To get around these issues, engineers use some clever optimization tricks.

Common Optimization Strategies:

- Model Quantization: This technique cleverly reduces the precision of the numbers in the model's weights (say, from a 32-bit float to an 8-bit integer). The result is a much smaller and faster model, usually with a barely noticeable drop in accuracy.

- Model Pruning: Think of this like carefully trimming a bonsai tree. It involves snipping away unnecessary connections within the neural network, which cuts down on both its size and the computing power it needs.

These strategies are non-negotiable when you're deploying on edge devices like smartphones, where every byte of memory and every millisecond of speed is precious.

Can I Deploy a Model Without Using the Cloud?

Absolutely. While the big cloud providers offer incredible scale and convenience, they aren't the only game in town. You can always deploy a model "on-premise," using your own company's servers and data centers.

This approach gives you total control over your hardware and, crucially, your data. For industries with iron-clad security and privacy rules, like healthcare or finance, this is often the only acceptable path.

Another popular non-cloud route is edge deployment. This is where the model runs directly on the end-user's device—think a smart camera, a factory sensor, or your phone. This is perfect for apps that need lightning-fast responses or have to work even when there's no internet connection, like a security camera doing real-time object detection.

What Are the Most Common Deployment Mistakes to Avoid?

Besides the big one—treating deployment as an afterthought—a few other classic traps catch teams all the time. Steering clear of these will save you a world of pain.

- Forgetting to Monitor: Launching a model without a way to track its performance is like flying blind. You'll have no idea it's making bad predictions until a customer complains or the business starts losing money.

- Not Planning for Scale: A model that runs beautifully for 10 users in a test environment might fall over and die when hit with traffic from 10,000 real users. You have to load-test your setup to make sure it can handle the pressure of production.

- Ignoring Dependencies: This one is subtle but deadly. If you don't lock down the specific versions of the libraries you used (like NumPy or TensorFlow), a simple package update could break your model in ways that are incredibly difficult to debug.

By thinking like an MLOps pro from the very start—focusing on monitoring, scale, and dependencies—you can dodge these common bullets and build a far more reliable home for your machine learning models.

At YourAI2Day, we are dedicated to helping you understand and apply AI with confidence. Whether you're a professional looking to sharpen your skills or a business ready to implement AI solutions, we provide the insights and tools you need. Explore more at https://www.yourai2day.com.