At its heart, the worry about artificial intelligence and privacy isn't really about robots taking over. It's about how AI systems gather, chew through, and act on massive amounts of our personal data—often without us fully understanding what's happening. It’s like having a personal assistant who remembers everything you’ve ever said or done, but you don't know who they're sharing that information with. This can lead to some serious risks, like constant surveillance, biased decisions made by algorithms, and the ever-present threat of major data breaches. It’s no wonder so many people feel like they’ve lost the remote control to their own digital lives.

What AI Means for Your Personal Privacy

We run into artificial intelligence constantly, whether it's Netflix guessing the next series you'll binge-watch or your map app finding a clever shortcut to avoid a traffic jam. These tools are incredibly convenient, but behind the curtain of helpfulness, a critical discussion about our personal information is taking shape.

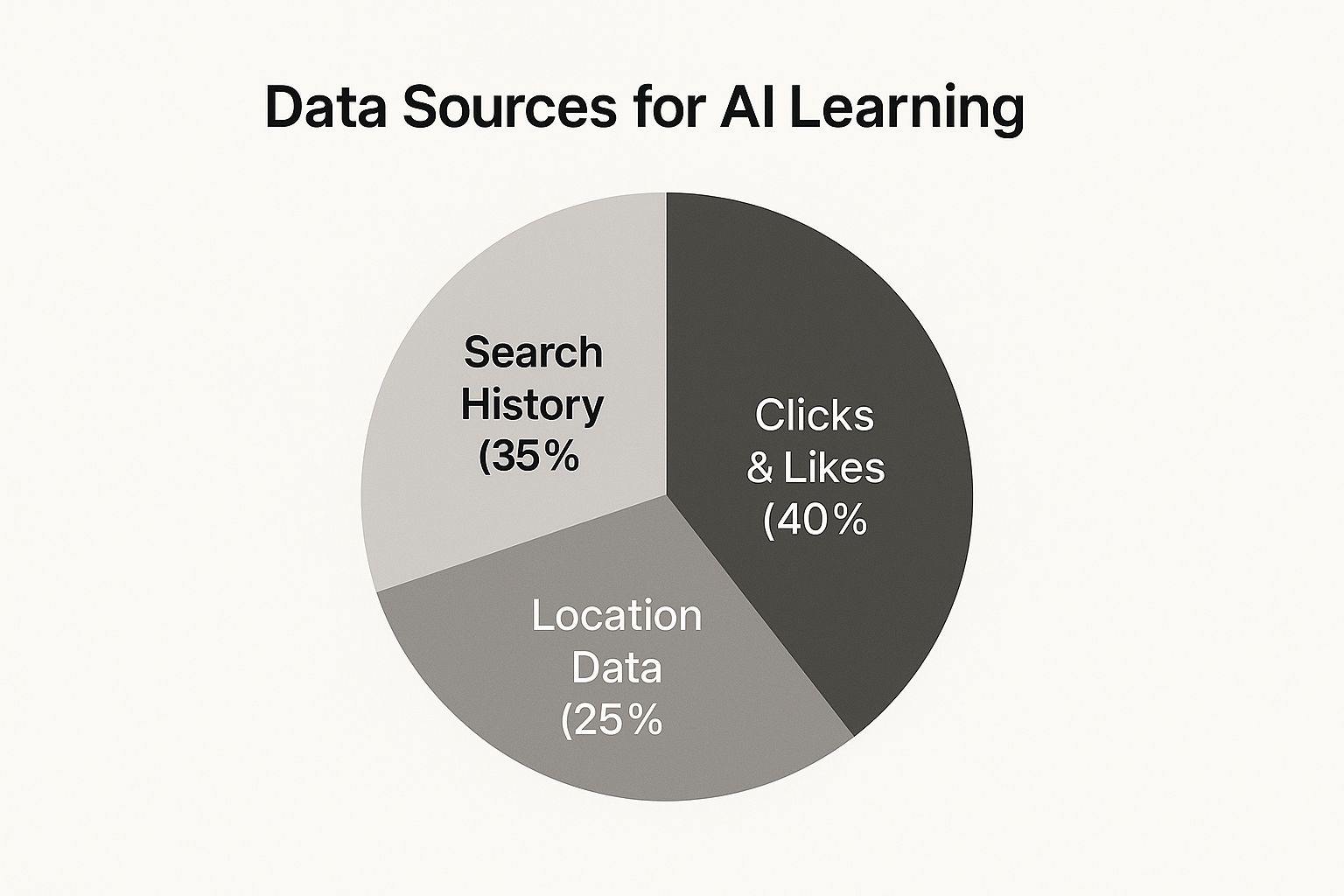

Fundamentally, AI learns from data. Don't picture a sci-fi villain; think of it more like an incredibly fast-learning student that spots patterns to make predictions. The "textbooks" it studies are compiled from our data—our clicks, likes, search queries, and even the photos we post online. This is precisely where the core of artificial intelligence privacy concerns really begins.

Why This Conversation Matters Now

The sheer speed and scale of AI are what make this privacy conversation so urgent. In the past, collecting data was often a more direct process. You filled out a form, you bought a product. Simple.

But today's AI systems can infer things about you from thousands of different data points, piecing together a profile you never consciously shared. For example, it might notice you browse recipes for gluten-free bread, look up symptoms of celiac disease, and follow certain health influencers, then correctly guess you have a specific dietary restriction without you ever stating it. It can connect the dots in ways that are hard to predict.

This isn't just a future problem; people are already wary. A recent study found that 57% of consumers globally believe AI is a real threat to their privacy, with 68% expressing concern about their online privacy in general. These aren't small numbers—they signal a widespread and growing discomfort with how our data is being used. You can learn more about these consumer AI privacy findings in the full report.

"Your job will not be taken by AI. It will be taken by a person who knows how to use AI." – Christina Inge, Marketing Expert

Christina Inge's take on this is spot-on. Getting a handle on AI is quickly becoming an essential skill, and a huge part of that is understanding the privacy trade-offs and knowing how to navigate them.

Think of this guide as your starting point. We're going to break down the actual risks in plain English and, more importantly, walk through the practical steps you can take to stay in charge of your information. My goal is to make this whole topic feel less intimidating by focusing on what truly matters.

How AI Systems Learn From Your Data

Have you ever felt your phone finish your thoughts? Those eerily on-point suggestions aren’t magic. They’re AI at work, fueled entirely by your digital footprint.

Imagine AI as an eager student studying from a textbook—your data. Every click, like, search and location ping adds another page. The more it “reads,” the clearer it sees your habits, preferences and daily routines.

This isn’t a one-off quiz; it’s an endless cycle of data collection and analysis. That’s how companies craft incredibly detailed profiles. They don’t just know you love running shoes—they know you clock 15 miles a week and tend to shop every Saturday morning.

The Digital Breadcrumbs You Leave Behind

Every action online drops a tiny marker. Alone, these digital breadcrumbs feel harmless. Gather millions, though, and an AI can assemble a remarkably detailed portrait of you.

AI collects these crumbs in two main ways:

- Direct Data: Information you share willingly—like when you fill out your name and birthday for a social media profile or answer a survey.

- Indirect Data: Behavior it observes—what you click on, how long you watch a video, which posts you scroll right past, and even the path your mouse takes across a webpage.

This visual highlights that clicks and likes plus search history supply the bulk of training data for modern AI models.

From Raw Data To Personal Insights

How does a random click turn into an ad that feels almost psychic? It comes down to pattern-recognition algorithms. These systems sift through massive datasets to spot connections and forecast what you might do next.

To get a clearer picture, check out deep learning vs machine learning in our detailed guide.

Here are a few real-world examples:

- Personalized Advertising: You search “best hiking boots for beginners.” Hours later, those exact boots pop up on your social feed because the AI linked your search intent with your browsing and social signals.

- Recommendation Engines: You stream a sci-fi flick. The platform analyzes that choice against millions of other fans and nails your next suggestion with 90% accuracy. That's not a guess; it's a data-driven prediction based on the viewing habits of people just like you.

- Social Media Feeds: The posts you see first aren’t random. AI tracks what you stop to watch or like and shapes your feed to keep you scrolling.

“Your data is already out there; what AI is changing is simply the sophistication with which your data is being used.”

— Christina Inge, Marketing Expert & Harvard Instructor

That insight sums it up neatly. Data collection itself isn’t new. What’s changed is the speed and depth with which AI analyzes every detail—often without you even realizing it. This growing power is at the heart of today’s privacy debates.

The Top AI Privacy Risks You Need to Know

Now that we've seen how AI learns from data, let's get down to brass tacks. The phrase "privacy risk" can sound a little vague, but the dangers are very real and creep into our daily lives more than we realize.

Let's unpack the biggest privacy headaches that AI brings to the table. Think of this as your field guide for spotting the threats so you can start protecting yourself.

Data Re-Identification: When 'Anonymous' Isn't Anonymous

Companies love to tell us they use "anonymized" data, which sounds great. They strip out obvious things like your name and email, but AI is a master at connecting the dots. It can take multiple "anonymous" datasets—say, your location history, online shopping habits, and browsing activity—and piece them back together to figure out exactly who you are.

Suddenly, data that was supposed to be disconnected from you points right back to your front door. The promise of anonymity often falls apart under the power of modern algorithms.

Constant Surveillance From Smart Devices

That smart speaker on your counter? Your fitness tracker? They’re always on, always listening, and always gathering information. While they offer convenience, they also create a nonstop feed of data about your private life, from your daily routines to your quietest conversations.

Your smart assistant doesn't just hear you ask for a song; it logs when you wake up, when you leave the house, and who you talk to. Over time, this paints an incredibly detailed portrait of your life—one that’s far more valuable than you might think.

Algorithmic Bias and Unfair Decisions

Here’s a hard truth: AI systems are only as good as the data they're trained on. If that data is packed with historical human biases, the AI will learn those biases and, in many cases, make them even worse. This leads to genuinely unfair outcomes that can affect your life in major ways.

This isn't just a hypothetical problem. It’s happening right now:

- Hiring: An AI trained on résumés from a male-dominated field might automatically downgrade qualified female candidates.

- Loans: An algorithm might deny a loan based on an applicant's zip code, effectively discriminating against entire communities regardless of individual financial health.

This means you could be denied a job or a loan not based on your own merit, but because a flawed algorithm made a snap judgment. It’s a huge issue, especially as more companies look into AI automation for business processes.

Expert Insight: "We have a very long way to go before we start correcting that bias. It is an absolute concern."

This highlights a critical point: algorithmic bias is a deep-rooted challenge, not a simple bug to be fixed. It's one of the most serious ethical problems in AI today.

Deepfakes and AI-Powered Misinformation

Generative AI can create astoundingly realistic—but completely fake—videos, images, and audio clips. These are called deepfakes. While sometimes used for harmless fun, they are also a powerful tool for spreading lies and causing harm.

Imagine a fake video of a CEO announcing a fake product launch to manipulate stock prices, or a fabricated audio clip of a political candidate used to derail an election. The technology blurs the line between reality and fiction, making it dangerously easy to create convincing scams or destroy someone's reputation.

The Honeypot Problem: Centralized Data Pools

AI models are data-hungry. To train them, companies collect and store vast databases of user information. While valuable for the company, these massive data pools become a tempting target—a honeypot—for cybercriminals.

A single breach can expose the sensitive information of millions. And these attacks are on the rise. According to the Stanford AI Index Report, AI-related incidents shot up by 56.4% in just one year, with 233 reported cases. Combine that with the more than 1,700 data breaches disclosed in the first half of a recent year, and the picture becomes clear. As you can learn more about AI data privacy risks in the full report, these aren't just statistics; they're real people whose privacy has been compromised.

To make these abstract risks more concrete, let's look at how they show up in the technologies we use every day.

Common AI Privacy Risks and Real-World Scenarios

| Privacy Risk | What It Means | Everyday Example |

|---|---|---|

| Data Re-identification | AI pieces together "anonymous" data points to identify a specific person. | Your "anonymous" ride-sharing history is combined with public social media check-ins to reveal your home and work addresses. |

| Pervasive Surveillance | Smart devices constantly collect data about your environment and personal habits. | Your smart speaker records snippets of conversations, which are used to build a profile for highly targeted advertising. |

| Algorithmic Bias | AI systems make unfair or discriminatory decisions based on flawed training data. | A facial recognition system used by law enforcement has a higher error rate for women and people of color, leading to false identifications. |

| Misinformation | Generative AI is used to create convincing but fake content to deceive or manipulate. | A deepfake video appears online showing a public figure saying something inflammatory they never actually said. |

| Data Breach Vulnerability | Huge, centralized datasets used for AI training become prime targets for hackers. | A healthcare company's AI database is breached, exposing millions of sensitive patient records, diagnoses, and histories. |

These examples show that AI privacy risks aren't some distant, futuristic problem. They are embedded in the tools we rely on now, making awareness and proactive protection more important than ever.

Learning from Real-World AI Privacy Failures

Theory is one thing, but seeing how privacy failures impact real people is another. The abstract idea of data misuse becomes painfully clear when we look at specific cases where things went sideways. These stories are more than just headlines; they're powerful lessons about the human side of artificial intelligence privacy concerns.

https://www.youtube.com/embed/N82TgjKU9yE

By digging into these real-world examples, we can truly grasp what's at stake. They show just how easily personal information can be exploited when AI systems are rolled out without strong ethical guardrails or genuine user consent. It moves the conversation from "what if" to "what happened."

The Cambridge Analytica Wake-Up Call

If one event dragged AI-driven data misuse into the global spotlight, it was the Cambridge Analytica scandal. It all started with a third-party app that vacuumed up the data of as many as 87 million Facebook users, most of whom had no idea it was happening. We're not just talking about names and emails—the app gathered incredibly detailed information on personalities, social networks, and online habits.

Armed with this massive dataset, Cambridge Analytica built sophisticated psychographic profiles. These weren't just for market research; they were used to fuel AI algorithms that dished out hyper-targeted political ads, all designed to influence voters during the 2016 U.S. presidential election.

The fallout was enormous. It sparked worldwide outrage and forced a long-overdue reckoning over how social media platforms handle our data. It became the textbook case for how personal information, collected for one reason, could be weaponized for something else entirely.

When Smart Speakers Are Listening a Little Too Closely

Smart speakers and virtual assistants are everywhere now, offering hands-free convenience. But the biggest names in the game—including Amazon, Google, and Apple—have all been in the hot seat for how they handle audio recordings.

It came to light that human contractors were routinely listening to a small percentage of these voice recordings. While the companies insisted it was for quality control to make the AI better, the revelation set off major privacy alarms. People were, quite rightly, disturbed by the thought of strangers overhearing private family conversations, arguments, or sensitive moments in their own homes.

This situation highlights a critical disconnect: users often assume their interactions with AI are private and automated, when in fact, human oversight can introduce a deeply personal privacy risk.

The whole affair exposed a huge gap in transparency. It was a stark reminder that even everyday tech can cross serious privacy lines without clear and honest communication.

The Hidden Cost of "Free" AI Training

A newer, and perhaps more subtle, issue is popping up around how companies train their AI models. More often than not, customer data—everything from chats with support bots to photos you upload—is being used as the raw material to teach new AI systems. The catch? This detail is usually buried in the fine print of a sprawling terms of service agreement.

Here’s how it typically plays out:

- You Use an AI Tool: Maybe it's a "free" AI photo editor or a customer service chatbot.

- Your Data Becomes Training Material: The pictures you uploaded or the transcript of your conversation get fed back into the company’s models to make them smarter.

- Consent is Murky at Best: You technically clicked "agree," but you probably had no clue your personal data was being repurposed for R&D.

This trend gets to the heart of a fundamental challenge in the AI world. Countless users are unknowingly donating their personal information to build the very products they use. It raises big questions about ownership, consent, and whether users should be compensated, reminding us to always approach "free" services with a healthy dose of skepticism.

Your Digital Rights in the Age of AI

With all these potential minefields, it’s fair to wonder who’s actually setting the rules. Fortunately, you’re not left to figure out the world of artificial intelligence privacy concerns on your own. Governments across the globe are finally starting to build legal frameworks to give you more control over your digital footprint.

This isn’t about trying to make sense of dense legal texts. It’s about understanding your fundamental rights and knowing how to exercise them. Think of these regulations as a digital bill of rights, arming you with the power to hold companies accountable for how they treat your information.

Landmark Privacy Laws Explained

Two major pieces of legislation have really set the global standard for data protection, and their core ideas are popping up in new laws everywhere. Getting a handle on them is the first step toward taking back your privacy.

- GDPR (General Data Protection Regulation): This one comes out of Europe, and it's a big deal. It grants people some powerful rights, including the right to access whatever data a company has on you, the right to correct any mistakes, and—this is a huge one—the right to be forgotten, which lets you request your data be deleted.

- CCPA (California Consumer Privacy Act): Just as the name implies, this law covers California residents, but its ripple effects are felt across the United States. It provides similar rights, like the right to know what personal info is being collected and the right to opt out of the sale of your data.

It helps to think of these laws as your personal toolkit. If a company is using your data in a way that makes you uneasy, these regulations give you the legal leverage to intervene and demand a change. They fundamentally shift the balance of power, putting you back in control.

The Global Push for AI Regulation

And this movement isn't losing steam. As AI weaves itself deeper into our daily lives, lawmakers are scrambling to keep pace. This has kicked off a wave of new legislation aimed squarely at governing artificial intelligence.

Globally, legislative mentions of AI shot up by 21.3% across 75 countries in a recent year, marking a massive ninefold increase since 2016. This trend shows a clear and urgent recognition that the unique challenges of AI require dedicated rules. Discover more insights about these legislative trends on hai.stanford.edu.

All this global attention is good news for you. It means more countries are embracing principles like transparency and accountability, which forces companies to be more open about how their algorithms actually work. We can expect to see more protections that tackle specific AI risks, such as algorithmic bias and decisions made by machines without human oversight. These evolving laws are a direct answer to the growing public demand for stronger digital rights.

Practical Ways to Protect Your Data from AI

It's easy to feel a little lost in the conversation about AI and data privacy. But here’s the good news: you have a lot more control than you might realize. You don't have to be a tech wizard to put meaningful safeguards in place.

Think of it this way: you don't need to build a digital fortress. It's more about locking your doors and windows. A few conscious choices can make a world of difference. Let's walk through some simple habits you can start today.

Practice Smart Sharing Habits

The most powerful tool in your arsenal is data minimization. It sounds technical, but the concept is dead simple: only share what is absolutely necessary.

Before you fill out another online form or grant an app a new permission, just pause and ask, "Does this service really need this information to work?" A photo editor probably doesn't need your contact list. A weather app might need your city, but it doesn't need to track your every move. Getting into this habit shrinks your digital footprint, giving AI systems less to work with.

Take Control of Your Settings

Your devices and online accounts are already equipped with privacy controls—they're just waiting for you to use them. Seriously, take ten minutes and poke around the settings on your phone and most-used apps. You’ll be surprised at what you find.

Here’s a quick-start checklist:

- Review App Permissions: Go into your phone's privacy settings and see which apps can access your microphone, camera, location, and contacts. If an app doesn't need it to function, turn it off.

- Adjust Social Media Privacy: Switch your profiles to "private" or "friends only." Take a look at who can see your posts, tag you in photos, or view your personal info.

- Opt for Privacy-First Tools: Consider using browsers like DuckDuckGo or Brave that don't track your every search. A good VPN is another great layer of protection, helping to obscure your online activity.

"Your data is already out there; what AI is changing is simply the sophistication with which your data is being used."

– Christina Inge, Marketing Expert

This point from a marketing expert really hits home. Managing your settings is all about limiting the raw material that these incredibly sophisticated AI systems have to work with. And for business owners, figuring out how to balance powerful data tools with customer trust is a key challenge when implementing AI in business.

Think Before You Chat

Finally, be careful with AI chatbots and creative AI tools. These systems are designed to learn from every single conversation. Avoid feeding them sensitive personal information, confidential company data, or anything you wouldn't want saved in a massive database for others to analyze.

A simple rule of thumb: don't type anything into a chatbot that you wouldn't be comfortable shouting in a crowded room. Treat every chat as if it's being recorded for training purposes—because it likely is.

Common Questions About AI and Your Privacy

Even after digging into the details, it's completely normal to have lingering questions about how artificial intelligence affects your privacy. It's a complicated topic, and the technology is moving at a breakneck pace. Let's tackle some of the most frequent questions to bring a little more clarity.

Is It Possible to Completely Stop AI From Using My Data?

The short answer is no, not really. Wiping your digital footprint completely is practically impossible these days, but you can significantly shrink it. The goal isn't to become a digital ghost; it's to take back control.

When you actively manage your privacy settings on social media and other apps, you get to dictate what their AI models can learn about you. Think of it this way: turning off location history for a retail app stops it from building a map of your daily life. Combining that with privacy-first browsers and a good VPN makes it much more difficult for AI systems to follow you around the internet. It's all about being a conscious, active manager of your own data.

Are All AI Systems a Threat to My Privacy?

Absolutely not. In fact, a growing number of responsible companies are embracing a concept called "Privacy by Design." This just means they build privacy protections into their technology from the ground up, rather than trying to tack them on as an afterthought.

"Your data is already out there; what AI is changing is simply the sophistication with which your data is being used."

— Christina Inge, Marketing Expert & Harvard Instructor

Christina's point gets to the heart of the matter: it’s not about the data existing, but how it's handled. A privacy-focused AI, for instance, might use data anonymization to scrub your personal details from the information it analyzes. Another great approach is on-device processing, where the AI computations happen directly on your phone instead of sending your raw data to a company's server. The real trick is learning to tell the difference between companies that genuinely respect your privacy and those that are just paying it lip service.

What Does the Future of AI and Privacy Look Like?

Looking ahead, we're going to see a continuous push-and-pull between technological innovation and stronger privacy laws. As AI capabilities grow, you can bet that regulations will have to become more specific and powerful just to keep pace.

We can also expect to see more technologies that put you in the driver's seat, giving you direct control over your information—almost like a personal data wallet. The public is getting smarter and louder, and the demand for real transparency and clear ethical rules for AI developers isn't going away.

Staying on top of AI trends is crucial to understanding how they'll shape our lives. At YourAI2Day, we deliver the news and insights you need to navigate the future of artificial intelligence. Explore our resources today at https://www.yourai2day.com.