Welcome, AI explorers! Stepping into the world of artificial intelligence can feel like learning a new language, and the first vocabulary words you'll encounter are "machine learning frameworks." Think of these as your complete workshop—packed with tools, blueprints, and pre-built components that let you create amazing things without having to forge every screw from scratch. Whether you're a curious beginner wondering what all the buzz is about, or a budding developer ready to build your first model, choosing the right framework is your most important first step.

But with so many options, where do you start? From industry giants like TensorFlow and PyTorch to specialized tools for specific jobs, the landscape can be overwhelming. This is where our guide comes in. We’ll cut through the noise to give you a clear, practical breakdown of the top machine learning frameworks and platforms essential for any AI project today.

This isn't just another list. We'll skip the dry, technical jargon and focus on what really matters: what each tool does best, who it's for, and how to get started quickly. We've included insights from experts, practical examples of how these tools are used in the real world, and direct links to get you going. Ready to find the perfect toolkit for your next project? Let's dive in.

1. TensorFlow (Official)

As one of the most foundational machine learning frameworks, TensorFlow's official website is more than just a place to download the library; it's an indispensable educational hub and resource center. Developed by Google, this platform is the definitive starting point for anyone serious about deep learning. Think of it as the heavy-duty machinery of the AI world—powerful, reliable, and capable of handling massive industrial-scale projects.

The user experience is clean and structured, guiding you through a vast ecosystem that includes TensorBoard for visualization, TensorFlow Extended (TFX) for production pipelines, and seamless Keras integration. For developers, the site provides meticulously curated installation commands for various environments, including CPU, GPU, and WSL2, which is now the recommended path for Windows users needing GPU acceleration with versions beyond 2.10.

Key Features & Offerings

- Comprehensive Guides: The platform hosts extensive tutorials and a rich model zoo. For a practical example, you can grab a pre-trained image classification model like

MobileNetV2and have it identifying cats in your photos in just a few lines of code. - Installation Support: Detailed compatibility matrices and step-by-step guides ensure you can install the correct version for your specific hardware and software, a common pain point for newcomers.

- Broad Ecosystem: Access to integrated tools like TensorBoard (for visualizing how your model is learning) and TFX (for building robust, automated pipelines that retrain your models on new data) is readily available.

Expert Opinion: Dr. Anya Sharma, an AI consultant, says, "For production-grade models that need to be reliable and scalable, TensorFlow is still my top choice. Its ecosystem is built for deployment. The versioned wheels and detailed installation recipes for CUDA and cuDNN have saved my team countless hours of debugging."

Website: https://www.tensorflow.org

2. PyTorch (Official)

Emerging from Meta AI, PyTorch has rapidly become a favorite among researchers and developers, and its official website is a masterclass in usability for machine learning frameworks. If TensorFlow is the industrial machinery, PyTorch is the agile, modern workshop beloved by artisans and innovators. It's celebrated for its Python-first design, which feels intuitive and makes the process of building and experimenting with new ideas incredibly fast.

The standout feature is its brilliant interactive installation matrix on the homepage. Users can select their OS, package manager (like Pip or Conda), compute platform (CUDA, ROCm, or CPU), and desired stability (stable or nightly), and the site instantly generates the exact command needed for a clean installation. This removes guesswork and significantly lowers the barrier to entry, making it an excellent resource for anyone starting their deep learning journey or managing multiple complex environments.

Key Features & Offerings

- Interactive Installer: Generates precise installation commands tailored to your specific system, including OS, package manager, and compute backend (CUDA/ROCm).

- Comprehensive Ecosystem: Provides direct access to a rich ecosystem of libraries like TorchVision, TorchAudio, and TorchText. For example, with just a few imports from

TorchVision, you can load a model likeResNet50that's already been trained on millions of images. - LibTorch C++ Support: Offers clear documentation and downloads for LibTorch, the C++ distribution for high-performance, low-latency production deployments.

Expert Opinion: "The PyTorch website's interactive installer is the gold standard," notes independent ML engineer Ben Carter. "When I need to spin up a new environment with a specific CUDA version, it gives me the exact

piporcondacommand I need in seconds. It’s a simple feature that respects the developer's time."

Website: https://pytorch.org

3. Keras (Official)

As one of the most user-friendly machine learning frameworks, Keras.io is the official gateway to a high-level API designed for fast experimentation. Think of Keras as the friendly face of deep learning. It's designed to be approachable and easy to learn, letting you build powerful neural networks without getting bogged down in complex details. Keras now works seamlessly on top of TensorFlow, JAX, or PyTorch, making it incredibly versatile.

The platform is meticulously organized around education, with a clean interface that guides users from fundamental concepts to advanced applications. Its strength lies in its simple design patterns. For instance, building a basic image classifier is as simple as stacking layers together like Lego blocks: model = keras.Sequential([layers.Dense(64, activation='relu'), ...]). This clarity makes it a favorite for teaching and rapid prototyping.

Key Features & Offerings

- Multi-Backend Support: The site provides clear guides on how to switch between TensorFlow, JAX, and PyTorch backends, offering unprecedented flexibility for deployment and research.

- Rich Educational Content: An extensive collection of tutorials, examples, and API documentation makes the learning curve incredibly gentle for beginners.

- KerasHub Model Repository: Access a growing library of pre-trained models that can be integrated into projects with just a few lines of code, perfect for transfer learning.

Expert Opinion: "For anyone starting in deep learning, I always recommend Keras.io," says AI educator Maria Flores. "The website's tutorials on the Functional API are fantastic for understanding how to build complex, non-linear models without getting lost in boilerplate code. It’s all about building intuition fast."

Website: https://keras.io

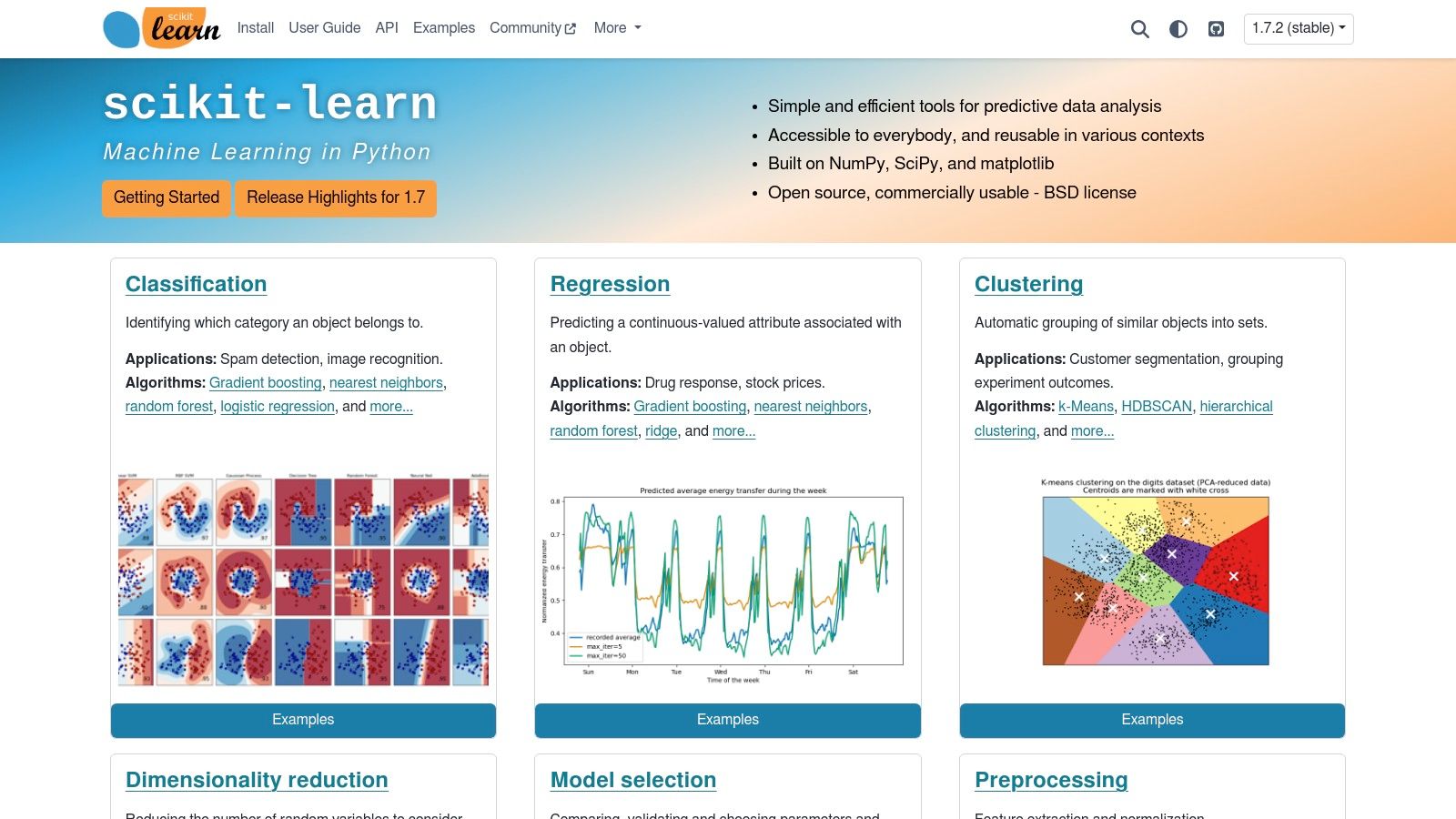

4. scikit-learn (Official)

As the undisputed king of classical machine learning frameworks in Python, the scikit-learn official website is the ultimate encyclopedia for practitioners. While others focus on flashy deep learning, scikit-learn is the reliable workhorse for the everyday data problems that businesses face. It's the go-to resource for fundamental algorithms like regression, classification, and clustering.

The user experience is built around clarity and accessibility. Need to predict customer churn from a spreadsheet? You can quickly find the RandomForestClassifier, see a code example of how to train it (model.fit(X_train, y_train)), and understand its parameters, all on one page. This practical, example-driven approach makes it an indispensable learning tool for students and a reliable reference for data scientists.

Key Features & Offerings

- Consistent API: The site thoroughly documents its elegant and consistent estimator API (

fit/predict/transform), which simplifies the process of swapping algorithms and building complex pipelines. - Model Selection Tools: It provides extensive guides on using powerful tools like

GridSearchCVandRandomizedSearchCVfor automatically finding the best settings for your model. - User Guides and Examples: The platform offers a rich "User Guide" that visually compares algorithms, helping you choose the right tool for your specific problem.

Expert Opinion: "For any tabular data problem, my first stop is the scikit-learn documentation," shares data scientist Leo Kim. "The examples are so practical that I can usually adapt a snippet for a new project in minutes. It's the gold standard for accessible, reliable ML resources."

Website: https://scikit-learn.org

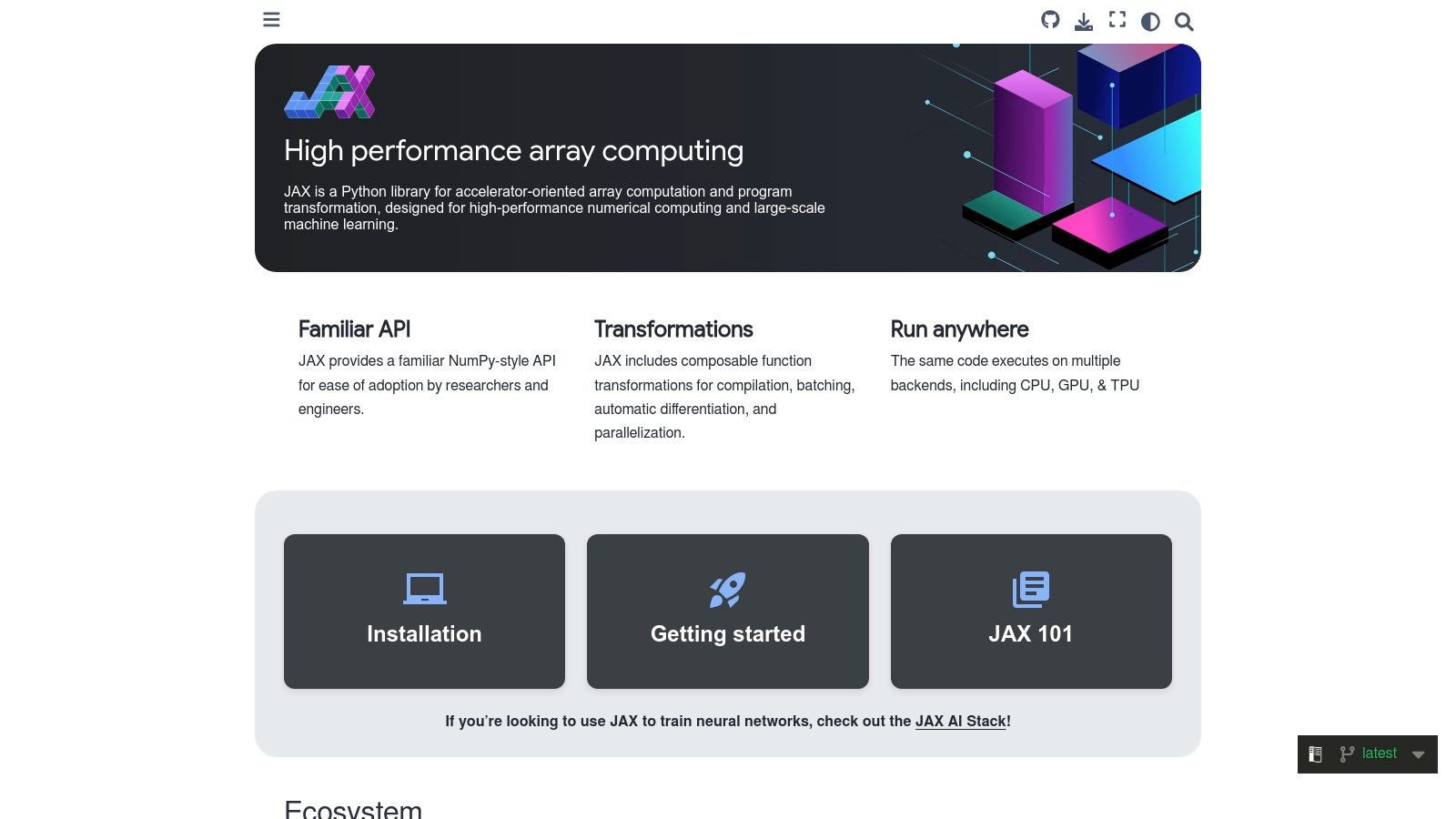

5. JAX (Official Docs)

JAX stands out among machine learning frameworks not as a full-featured ecosystem, but as a high-performance numerical computing library designed for speed and research. Think of it as a high-performance engine for machine learning. Developed by Google, its official documentation site is for those who need to push the limits of performance on GPUs and TPUs. It's a favorite in research labs for its speed and flexibility.

The user experience is direct and to the point. The homepage presents clear installation commands for CPU, NVIDIA CUDA, and Google's TPUs. Rather than a vast model zoo, the focus is on mastering JAX's powerful primitives. For example, you can take a standard Python function and instantly make it run faster on a GPU by just adding a @jax.jit decorator before it. This level of control is what makes JAX so powerful for custom research.

Key Features & Offerings

- Targeted Installation Guides: Provides specific

pipcommands with extras for CUDA 12/13 and TPU installations, which is crucial for matching drivers to the library version. - Performance-Oriented Primitives: The documentation is built around explaining composable functions like

jit(just-in-time compilation),vmap(automatic vectorization), andgrad(automatic differentiation). - In-Depth Tutorials: Offers sharp, concise tutorials on core concepts. Its utility in specialized fields is also growing; for instance, you can explore how JAX is used for differential privacy at scale.

Expert Opinion: "The JAX docs are my bible for custom research models," says research scientist Dr. Evelyn Reed. "Its 'gotchas' section is incredibly honest and has saved me from common pitfalls, especially around pure functions. It's built for those who love to get their hands dirty with the math."

Website: https://docs.jax.dev

6. ONNX Runtime (Microsoft, Official)

As a pivotal tool in the world of machine learning frameworks, the official ONNX Runtime website is the central hub for a high-performance inference engine. It's not for building models, but for running them anywhere. Think of it as a universal adapter for your AI models. You can train a model in PyTorch, convert it to the ONNX format, and then use ONNX Runtime to run it super-fast on a Windows PC, a Linux server, or even in a web browser.

The website presents a clean, developer-focused experience, providing clear installation commands for its numerous builds. This focus on cross-platform compatibility and hardware acceleration makes it a go-to solution for production environments. For those working with generative AI, specialized GenAI packages are also highlighted. This makes it a key component in a robust machine learning model deployment strategy.

Key Features & Offerings

- Broad Hardware Acceleration: Provides pre-built packages to leverage GPUs and other accelerators on different systems, from cloud servers to edge devices.

- Multi-Language Support: Extensive API bindings for Python, C++, C#, Java, and JavaScript allow integration into nearly any application stack.

- Targeted Builds: Offers specialized runtimes for web (via WebAssembly), mobile, and even training, giving developers flexibility for any use case.

Expert Opinion: MLOps specialist Chloe Davis states, "ONNX Runtime is my secret weapon for deployment. I can train a model in PyTorch, convert it to ONNX, and then run it almost anywhere with near-native performance. The website's clear pip install matrix is a lifesaver for matching the right build to my target hardware."

Website: https://onnxruntime.ai

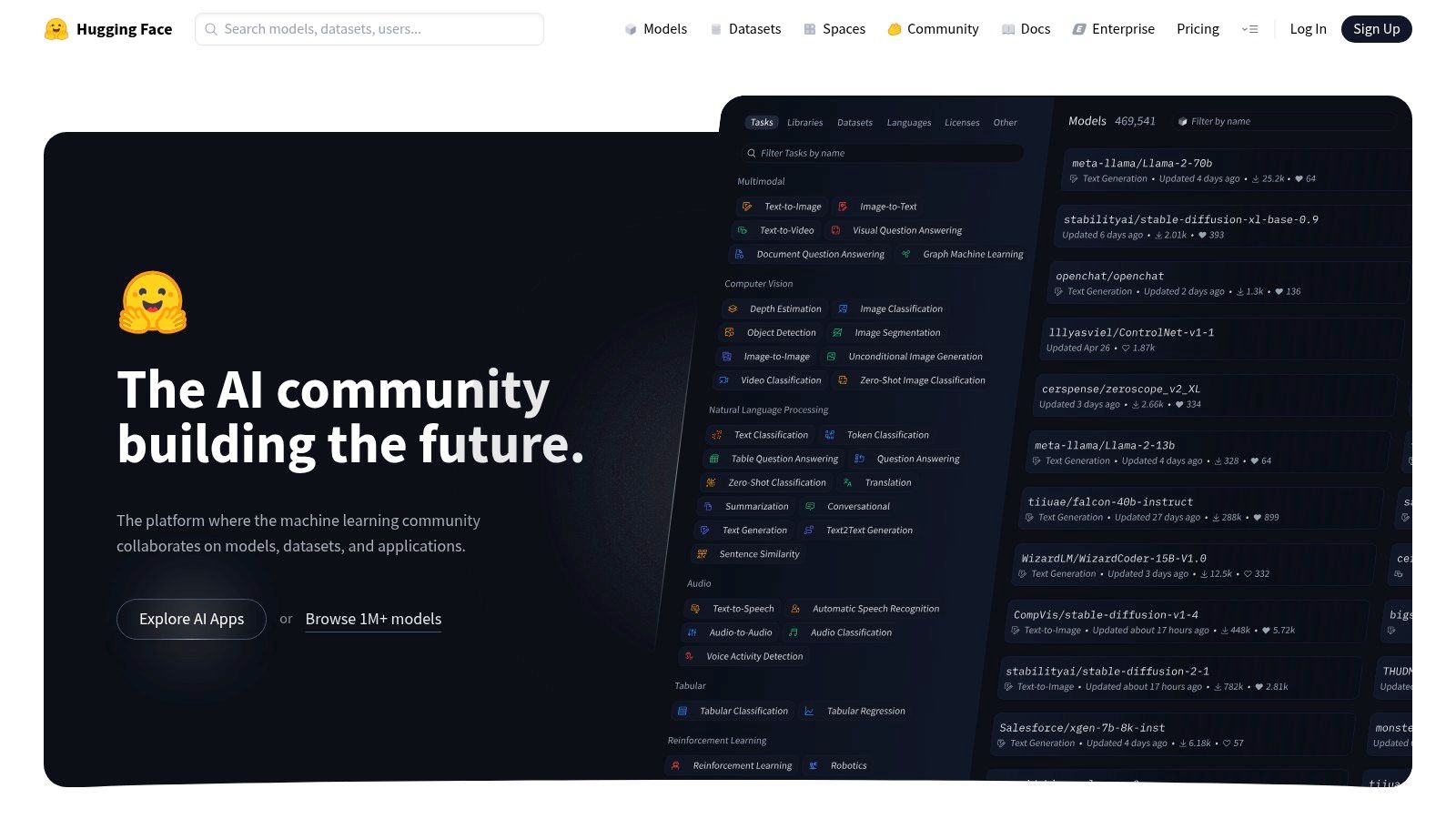

7. Hugging Face (Hub, Libraries, and Services)

While not a traditional framework itself, Hugging Face has become the indispensable central hub for the modern NLP and transformer-based machine learning frameworks ecosystem. It's like a combination of a social network, a library, and an app store for AI. It is the go-to platform for discovering, downloading, and deploying over a million model checkpoints, datasets, and interactive demos.

The user experience is designed for collaboration and discovery. Want a model that can summarize text? You can search for one, test it live in your browser on the website, and then get the exact Python code to use it in your own project—often in less than five lines. This has fundamentally democratized access to state-of-the-art AI. For those looking to take models from experiment to production, the platform offers Spaces for building live demos and managed Inference Endpoints.

Key Features & Offerings

- Massive Model Hub: Access an unparalleled collection of pre-trained models and datasets, complete with "model cards" that explain their use cases and limitations.

- Framework-Agnostic Libraries: The popular

TransformersandDiffuserslibraries provide a simple way to use cutting-edge models without being locked into a single framework. - Integrated Deployment Tools: Easily deploy models as interactive web apps using Spaces or as scalable production APIs with Inference Endpoints. You can learn more about fine-tuning these models to adapt them for specific tasks.

Expert Opinion: "Hugging Face is where I start any new NLP project," remarks AI startup founder Alex Rivera. "I can find a state-of-the-art baseline model, fine-tune it on my data, and deploy a demo app in hours, not weeks. It has completely changed the pace of AI development."

Website: https://huggingface.co

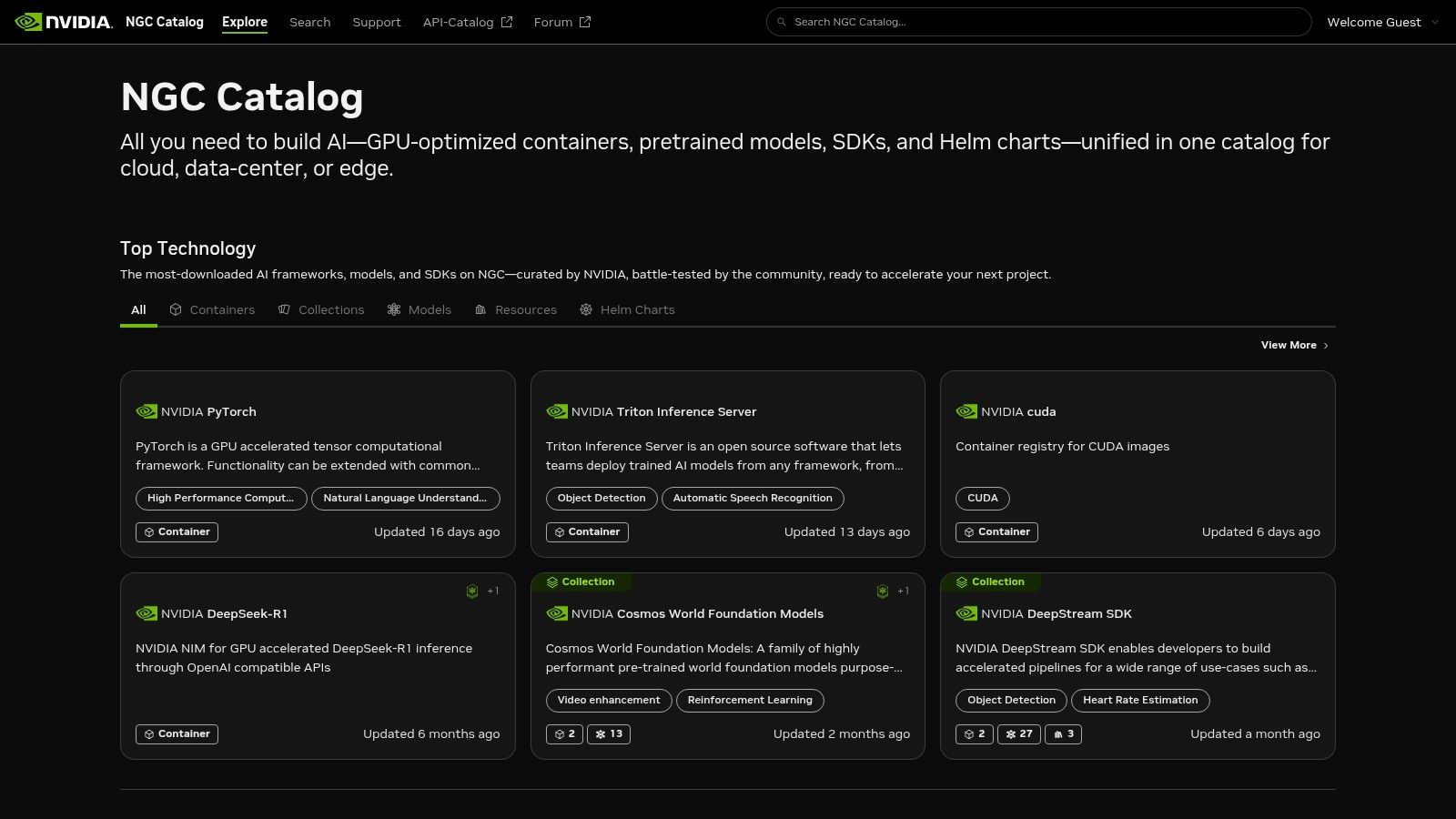

8. NVIDIA NGC Catalog (Official)

For practitioners running workloads on NVIDIA GPUs, the NGC Catalog is an essential resource that solves one of the biggest headaches in ML: environment setup. It's like having a master mechanic prepare your high-performance race car for you. Instead of you having to install and configure all the parts (drivers, libraries, frameworks), NGC gives you a perfectly tuned, ready-to-race container.

The platform is designed to get you from zero to training in minutes. Instead of manually installing drivers and libraries, you simply pull a ready-to-use Docker image that is optimized for NVIDIA hardware. A practical example is running a single command: docker pull nvcr.io/nvidia/pytorch:24.06-py3. This one line gives you a complete environment with PyTorch and all necessary GPU drivers, saving you from a world of compatibility pain.

Key Features & Offerings

- GPU-Optimized Containers: Monthly updated Docker containers with pre-integrated CUDA/cuDNN stacks ensure you have a stable, high-performance environment for any major framework.

- Pre-trained Models & SDKs: A vast collection of models and industry-specific software development kits (SDKs) that can be used as a foundation for new applications.

- Multi-Cloud & On-Prem Support: Images can be deployed seamlessly across major cloud platforms (AWS, Azure, GCP) or on-premises hardware like DGX systems.

Expert Opinion: "NGC is a lifesaver," says cloud architect Sam Jones. "Before, I'd spend a day or two fighting with driver versions. Now, I just run

docker pulland I have a perfectly tuned environment ready for training. It's the cleanest way to get started on a new GPU-powered project."

Website: https://ngc.nvidia.com

9. Anaconda (Distribution and Cloud Notebooks)

While not a framework itself, Anaconda is an essential distribution platform that radically simplifies how developers access and manage various machine learning frameworks. It acts as a universal installer and environment manager, solving one of the biggest hurdles for beginners: dependency conflicts. Think of it as a smart toolbox that keeps all your tools separate and organized, so the wrench for one project doesn't get mixed up with the screwdriver from another.

The platform offers a clean user interface through the Anaconda Navigator and a powerful command-line tool. You can create a self-contained "environment" for each project. For example, you might run conda create -n project1 tensorflow and conda create -n project2 pytorch, keeping both projects completely isolated and preventing version conflicts. This is a lifesaver for anyone working on multiple projects at once.

Key Features & Offerings

- Effortless Environment Management: The

condatool allows users to create, save, load, and switch between environments with just a few commands, isolating project dependencies. - Curated Repositories: Access thousands of data science and ML packages from Anaconda's repositories and conda-forge, all tested for compatibility.

- Anaconda Cloud: Offers hosted notebooks, allowing users to run code and share projects without local setup, with quotas based on subscription plans.

- Clear Licensing: Free for individual use, students, and small businesses, with paid options required for commercial use in organizations with over 200 employees.

Expert Opinion: "For anyone starting in data science, I always recommend Anaconda first," says university professor Dr. Chen. "It eliminates the nightmare of manual package and dependency management, letting you focus on learning the frameworks, not fighting with your setup."

Website: https://www.anaconda.com

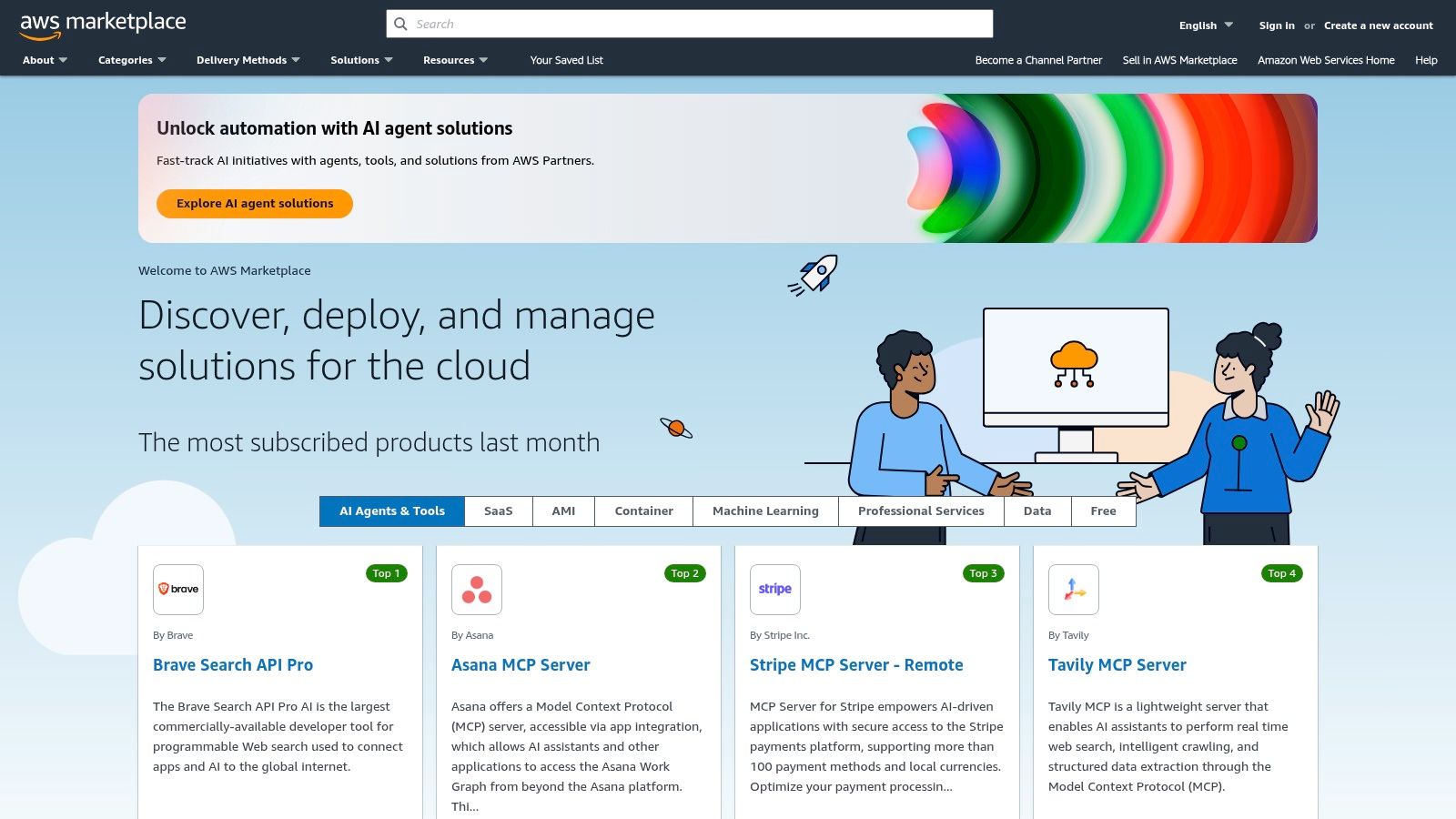

10. AWS Marketplace (Deep Learning AMIs/Containers)

For developers who need to get a GPU-accelerated environment running in the cloud without delay, the AWS Marketplace is the most direct path. It offers pre-configured Deep Learning AMIs (Amazon Machine Images) and containers, essentially providing a one-click launchpad for popular machine learning frameworks. Think of it as renting a fully-equipped, high-performance workshop instead of building one yourself. You can walk in and start working immediately.

The platform is designed for both individual developers and large enterprises. In a few clicks, you can launch a powerful EC2 instance with your chosen framework (like PyTorch or TensorFlow), all NVIDIA drivers, and necessary libraries already installed and optimized. This approach streamlines setup and ensures your environment is fine-tuned for performance from the start, saving hours of manual configuration.

Key Features & Offerings

- Pre-built Environments: Launch fully configured Deep Learning AMIs and Containers with one-click subscriptions, eliminating manual installation and driver management.

- Framework Variety: Access ready-to-use environments for TensorFlow, PyTorch, JAX, ONNX Runtime, and more, all optimized for performance.

- Broad Hardware Support: Deploy on a vast selection of EC2 GPU instance types, giving you the flexibility to choose the right hardware for your model's needs.

- Integrated Billing: Costs are consolidated into your existing AWS bill, simplifying expense management and allowing for enterprise purchasing models.

Expert Opinion: "The AWS Marketplace is my secret weapon for rapid prototyping," shares consultant Aisha Bakare. "When a client needs a proof-of-concept, I can spin up a fully optimized PyTorch environment on a powerful GPU instance in under 15 minutes, skipping hours of setup."

Website: https://aws.amazon.com/marketplace

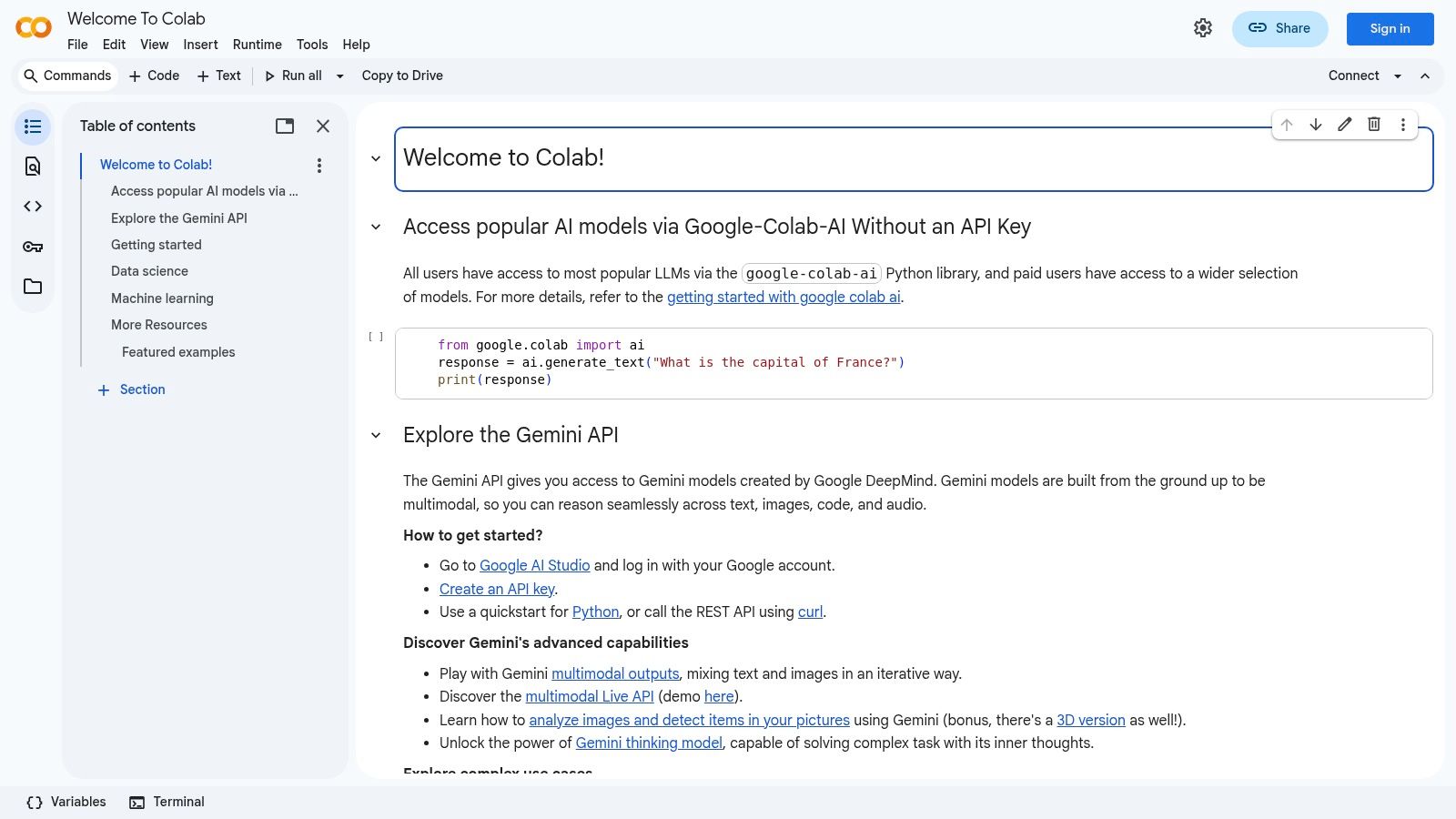

11. Google Colab (Hosted Notebooks with Frameworks Preinstalled)

While not a framework itself, Google Colab is an indispensable platform for anyone working with machine learning frameworks. It provides a free, browser-based Jupyter notebook environment where major libraries like TensorFlow, PyTorch, and scikit-learn come preinstalled. It's like having a free, powerful laptop for AI that you can access from anywhere. This makes it the perfect starting point for beginners, educators, and researchers looking to prototype ideas quickly.

The user experience is seamless. Notebooks are saved directly to Google Drive, which simplifies sharing and collaboration. The killer feature is free access to hardware accelerators. For a student learning to train an image classifier, this means they can use a powerful GPU for free to train their model in minutes instead of hours on their personal computer. Paid tiers like Colab Pro offer longer runtimes and even better hardware.

Key Features & Offerings

- Zero-Setup Environment: Instantly access a fully configured environment with popular ML libraries ready to use, removing the barrier of complex local installations.

- Hardware Acceleration: Offers free (though limited) access to NVIDIA GPUs and Google's own TPUs, which dramatically speeds up model training.

- Google Drive Integration: Notebooks and datasets can be easily mounted and saved to Google Drive, creating a portable and collaborative workflow.

Expert Opinion: "Colab is my go-to for workshops and tutorials," notes data science bootcamp instructor Tim Gorman. "I can share a link, and everyone has the exact same environment with GPU access, regardless of their personal computer's specs. It has democratized machine learning education."

Website: https://colab.research.google.com

12. Apple Core ML (Official Developer Site)

For developers building apps within the Apple ecosystem, the official Core ML developer site is the essential destination for on-device machine learning. Unlike cloud-based machine learning frameworks, Core ML is designed for fast, private, and efficient AI directly on iPhones, iPads, and Macs. This site provides the tools and documentation needed to integrate sophisticated models into applications while leveraging Apple's custom silicon, like the Neural Engine, for amazing performance.

The user experience is clean, well-organized, and developer-centric. A great practical example is how it helps developers build apps with real-time camera effects. You can train a style transfer model in PyTorch, use Apple's coremltools to convert it, and then drop it into your iOS app to apply artistic filters to a live video feed, all happening instantly on the device without sending any data to the cloud.

Key Features & Offerings

- On-Device Performance: Provides comprehensive guides on leveraging device hardware (CPU, GPU, and Apple Neural Engine) for high-speed, low-latency inference that respects user privacy.

- Model Conversion & Integration: Features extensive documentation for

coremltools, the Python package for converting models from other frameworks into the Core ML format. - Performance Analysis: The site explains how to use Xcode Instruments to get detailed performance reports, helping developers optimize model size and speed specifically for Apple hardware.

Expert Opinion: "When deploying a model to an iPhone, Core ML is non-negotiable," says iOS developer Sarah Jenkins. "The official site is my bible for understanding the conversion process. The guidance on model quantization (making models smaller) saved my app from being too large and slow."

Website: https://developer.apple.com/machine-learning/core-ml/

Top 12 ML Frameworks Comparison

| Tool / Platform | Core & Key Features ✨ | Quality / Ease ★ | Pricing / Value 💰 | Target Audience 👥 | Standout 🏆 |

|---|---|---|---|---|---|

| TensorFlow (Official) | ✨ Deep-learning framework; model zoo, Keras, detailed install guides | ★★★★ | 💰 Free OSS; compute costs vary | 👥 Researchers, production engineers | 🏆 Broad ecosystem (TensorBoard, TFX) |

| PyTorch (Official) | ✨ Research-first DL; interactive installer, LibTorch, nightly builds | ★★★★★ | 💰 Free OSS; compute costs vary | 👥 Researchers, prototypers, production | 🏆 Pythonic API for rapid prototyping |

| Keras (Official) | ✨ High-level multi-backend API (TF/JAX/PyTorch); KerasHub presets | ★★★★ | 💰 Free; great prototyping ROI | 👥 Beginners, educators, fast-iterators | 🏆 Gentle learning curve & portability |

| scikit-learn (Official) | ✨ Classical ML library; consistent estimators API, model selection tools | ★★★★ | 💰 Free; industry-standard value | 👥 Data scientists, ML engineers (classical ML) | 🏆 Stable, well-documented standard |

| JAX (Official Docs) | ✨ Autodiff + XLA; jit/vmap/pmap primitives; CUDA/TPU extras | ★★★★ | 💰 Free; research/perf-focused | 👥 Research engineers, performance-focused devs | 🏆 High-performance composable primitives |

| ONNX Runtime (Microsoft) | ✨ Cross-platform inference; CPU/CUDA/ROCm/DirectML + multi-language | ★★★★ | 💰 Free; production-grade inference | 👥 Prod engineers, cross-platform deployers | 🏆 Broad hardware & language support |

| Hugging Face (Hub & Services) | ✨ Model Hub, Transformers/Diffusers, Spaces, managed endpoints | ★★★★★ | 💰 Hub free; paid PRO/Endpoints for scale | 👥 NLP/ML practitioners, teams | 🏆 Fastest path to SOTA models & tooling |

| NVIDIA NGC Catalog | ✨ GPU-optimized containers, pretrained models, monthly updates | ★★★★ | 💰 Catalog free; some enterprise content paid | 👥 GPU users, enterprises, DGX/cloud admins | 🏆 Performance-tuned GPU stacks |

| Anaconda (Distribution) | ✨ Conda envs, curated repos, cloud notebooks, governance tools | ★★★★ | 💰 Free/paid Enterprise plans | 👥 Data scientists, teams needing env mgmt | 🏆 Simplifies dependency & env management |

| AWS Marketplace (DL AMIs) | ✨ Prebuilt Deep Learning AMIs/containers; one-click EC2 launch | ★★★★ | 💰 Paid listings + EC2 compute fees | 👥 Cloud engineers, enterprises | 🏆 Fast cloud deployment & enterprise procurement |

| Google Colab | ✨ Hosted Jupyter with frameworks; free + Pro tiers; GPUs/TPUs | ★★★★ | 💰 Free tier; Pro/Pro+ paid for more resources | 👥 Students, educators, demos, prototyping | 🏆 Zero-setup notebooks with GPU/TPU access |

| Apple Core ML (Developer) | ✨ On-device inference; coremltools conversion, NE/Apple silicon tuning | ★★★★ | 💰 Free SDK; platform-specific | 👥 iOS/macOS app developers | 🏆 Neural Engine acceleration & privacy |

Your Next Step in the AI Adventure

You've successfully navigated the expansive landscape of modern machine learning frameworks. From the industrial-strength capabilities of TensorFlow to the research-friendly flexibility of PyTorch, and the beginner-welcoming simplicity of scikit-learn, it's clear there is no single "best" tool. The ideal framework is not a universal constant; it's a variable that depends entirely on your project's unique requirements, your team's existing skills, and your long-term goals.

This journey has shown us that the ecosystem is rich and varied. We've seen how Hugging Face democratizes access to state-of-the-art models, how ONNX Runtime champions interoperability, and how platforms like Google Colab and Anaconda eliminate the friction of setup, letting you dive straight into coding. The choice you make is a strategic one, shaping your development velocity, deployment strategy, and ability to innovate.

Distilling the Key Takeaways

As you move forward, keep these core principles in mind. They will act as your compass while navigating the ever-evolving world of AI development.

- Project-Centric Selection: The most critical takeaway is to let your project's needs dictate your choice. Are you building a performance-critical mobile application? Apple's Core ML is engineered for that exact purpose. Is your goal to deploy scalable models in the cloud? AWS Marketplace and NVIDIA NGC provide production-ready solutions.

- The Learning Curve Matters: Don't underestimate the "time to first model." Frameworks like Keras and scikit-learn are designed with a gentle learning curve, making them perfect for beginners or rapid prototyping. In contrast, a lower-level tool like JAX offers immense power and performance but demands a steeper initial investment in learning its unique programming model.

- Community and Ecosystem are Your Lifeline: A framework is more than just its code; it's the community, documentation, and pre-built models that surround it. The vast ecosystems of PyTorch and TensorFlow, amplified by resources like Hugging Face, mean you are rarely starting from scratch. When you encounter a bug at 2 a.m., a vibrant community forum is an invaluable asset.

- Deployment is Not an Afterthought: From the very beginning, consider how your model will reach users. Tools like ONNX Runtime are designed to bridge the gap between training and inference across different hardware. Similarly, frameworks with strong support for edge devices, like TensorFlow Lite or Core ML, are essential if your target is not the cloud.

Actionable Guidance: How to Choose Your Framework

Feeling overwhelmed by the options? Let's break down the decision-making process into a practical, step-by-step guide. Choosing the right machine learning frameworks is a foundational step for any AI project.

- Define Your Primary Use Case: Be specific. Are you doing natural language processing, computer vision, or traditional tabular data analysis? This single question will narrow your options significantly. For instance, Hugging Face is the undisputed leader for NLP tasks, while scikit-learn excels with tabular data.

- Assess Your Team's Expertise: What languages and paradigms is your team comfortable with? If your team is full of Python veterans who love a more object-oriented, "Pythonic" feel, PyTorch will be a natural fit. If they are accustomed to building and debugging static computation graphs for production environments, TensorFlow might be the more familiar path.

- Consider Your Deployment Target: Where will your model live? A web server, a mobile phone, a tiny IoT device, or a browser? Each target has its own constraints. Select a framework with a mature and well-documented deployment path for your chosen environment.

- Start Small with a "Trial Project": Don't commit to a framework for a mission-critical project without testing it first. Pick a small, well-defined problem and try to build a solution with your top two or three candidate frameworks. This hands-on experience is more valuable than reading a hundred articles. Using a platform like Google Colab is perfect for this kind of low-stakes experimentation.

The world of AI is not static; it's a dynamic and thrilling field defined by constant progress. The tools we've discussed are your keys to participating in this revolution. Don't just be a spectator. Pick a framework, find a dataset that excites you, and start building. Your journey from enthusiast to creator begins with that first line of code.

Stay ahead of the curve in the fast-paced world of artificial intelligence. For the latest news, in-depth guides, and practical insights on machine learning frameworks and beyond, subscribe to the YourAI2Day newsletter. We break down complex AI topics into easy-to-understand content, helping you build your skills and stay informed. Visit us at YourAI2Day and join our community of AI builders and innovators today