Ever wonder how your phone can recognize your face, or how Netflix just knows what movie you want to watch next? The magic behind it all is machine learning. Put simply, it’s the science of teaching computers to learn from experience, just like humans do, but on a massive scale. Instead of a developer writing code for every single possibility, we give a computer a boatload of data and let it figure out the patterns on its own. It's less about giving strict instructions and more about becoming a really good teacher.

The Core Idea Behind Machine Learning

At its heart, machine learning flips traditional programming completely on its head. For decades, a programmer would write rigid, logic-based rules that a computer followed perfectly. Think about trying to build a spam filter this way. You might write a rule like: "If an email contains the words 'free' and 'money,' mark it as spam."

This old-school approach is brittle. It breaks the moment a clever spammer switches to using the word "cash" instead of "money." You'd be stuck playing whack-a-mole, constantly writing new rules. Machine learning gives us a far more robust and intelligent way to solve this.

Instead of hand-coding rules, we show a machine learning system (which we call a model) thousands of examples of real spam emails and thousands of legitimate ones. The model then trains itself by identifying all the subtle patterns, words, and characteristics that distinguish one group from the other.

Expert Opinion: "The fundamental shift with machine learning is moving from a world of logic to a world of statistics," says Dr. Alistair Finch, a data science consultant. "We're no longer telling the computer exactly what to do, but rather showing it what success looks like and letting it infer the rules. It's the difference between giving someone a fish and teaching them how to fish."

From Data to Decisions

This process of learning from data is what allows the model to make surprisingly accurate predictions or decisions when it encounters new information it's never seen before. It’s a lot like teaching a child to recognize a cat. You don't hand them a checklist of rules like "pointy ears, long tail, whiskers." You just show them lots of pictures of different cats—fluffy ones, skinny ones, black ones, orange ones.

Eventually, the child intuitively grasps the underlying concept of a cat and can spot one in a photo they've never encountered. A machine learning model does the very same thing, just with data. This ability to generalize from past experience is the source of its power.

Machine Learning vs Traditional Programming

To make this distinction crystal clear, let's look at how the two approaches tackle problems side-by-side. The fundamental difference is in how they arrive at an answer.

| Aspect | Traditional Programming | Machine Learning |

|---|---|---|

| Input | Programmer provides explicit rules and the data to process | Developer provides data and the desired outcome |

| Process | The computer executes the pre-defined rules perfectly | The model discovers patterns and creates its own rules |

| Output | A pre-determined answer based on the strict rules | A prediction or decision based on learned patterns |

| Flexibility | Rigid; often fails when new, unexpected situations arise | Adaptive; can handle new data it has never seen before |

This shift from rule-based systems to data-driven learning is the engine behind so many of the smart technologies we use every day, from the uncanny accuracy of your Netflix recommendations to the voice assistant on your phone. It’s no longer just about programming a computer; it's about training it.

A Brief History of Machine Learning

Machine learning might seem like a recent phenomenon, but its roots go back much further than you'd think. It's not a sudden invention but the culmination of decades of research, brilliant ideas, and a few frustrating dead ends. The journey from clunky mechanical calculators to the sophisticated AI we use every day is a testament to human curiosity.

The theoretical groundwork was laid over a century ago. Concepts like Markov Chains, a mathematical model for event sequences, were introduced way back in 1913, providing a solid foundation for the algorithms that would follow much later.

It was in the 1950s that the dream of "thinking machines" really started to take shape. A key moment came when Arthur Samuel, a pioneer in the field, created a checkers-playing program. What made it so remarkable was its ability to learn from its own games—it got so good it could eventually beat its own creator. Imagine building a robot to play checkers with, and after a few weeks, it starts beating you every single time. That's what Samuel did!

From AI Winters to Digital Spring

The road from there wasn't a straight line. The community faced periods known as "AI winters," times when progress seemed to hit a wall and funding became scarce. The ambition was there, but the technology just hadn't caught up yet.

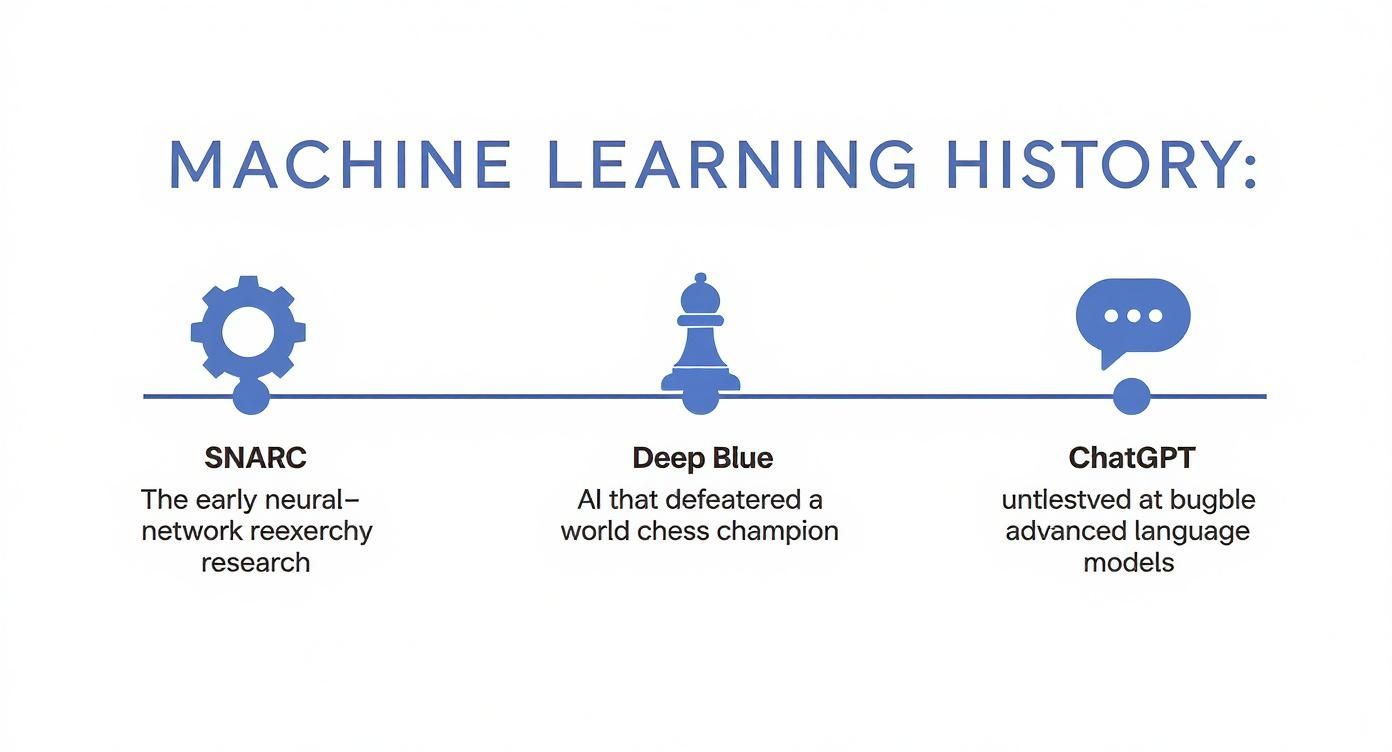

Then, in 1997, a major event shifted public perception. IBM's chess computer, Deep Blue, defeated the reigning world champion, Garry Kasparov. This was huge. It proved a machine could out-strategize the best human mind at a game of incredible intellectual complexity, signaling that something profound was on the horizon.

This infographic lays out some of the most important milestones in that journey.

As you can see, the evolution has been staggering, moving from a simple mechanical machine to a grandmaster-beating strategist and, eventually, a creative partner that can generate human-like text.

The Modern Explosion of Deep Learning

The real turning point for modern AI happened more recently. In 2006, Geoffrey Hinton introduced the concept of "deep learning." This approach supercharged old ideas about neural networks by feeding them massive amounts of data and using the incredible processing power of modern computers. Suddenly, machines got dramatically better at tough problems like recognizing images and understanding speech.

This innovation led to another game-changer in 2017: transformer models. This new architecture completely rewrote the rules for how machines process language and is the core technology behind tools like OpenAI's ChatGPT, which took the world by storm in 2023. These milestones mark the evolution of machine learning from a theoretical pursuit into the powerful, practical tools we rely on today.

These historical moments weren't just technical achievements; they were imagination milestones. Each one expanded our collective belief in what machines could learn to do, paving the way for the next generation of thinkers.

How Machine Learning Actually Works

So, how does a machine really learn? It might seem like a black box, but the process is surprisingly logical. At its heart, it all boils down to three core steps: feeding it data, training a model, and then using that model to make predictions.

Let’s walk through this with a real-world example we all know: teaching a machine to spot spam emails.

Step 1: It All Starts With Data

Everything in machine learning begins with data. Think of it as the textbook for a student. For our spam filter, we need a massive collection of emails—thousands labeled as "spam" and thousands labeled as "not spam."

The quality and sheer volume of this data are non-negotiable. If you only give the model a handful of examples, or if all your spam examples look the same, it will never learn to generalize. It needs a rich, diverse dataset to understand the nuances. A practical example: if you only show it spam emails about Nigerian princes, it will have no idea how to handle a phishing email pretending to be from your bank.

Just like a chef can't make a gourmet meal with subpar ingredients, a machine learning model is useless without good data. This is why data collection and cleanup often account for a staggering 80% of the entire workload on a project.

Step 2: Training the Model

With our data ready, we can start the training process. This is where the actual "learning" happens. We select a machine learning algorithm—our "teaching method"—and feed it our labeled email data. The algorithm then gets to work, meticulously analyzing every email to find patterns.

It might notice that emails with phrases like "urgent action required" or a flurry of exclamation marks tend to be spam. On the flip side, it will learn that emails from your known contacts are almost always legitimate.

An AI developer I know described it perfectly: "You don't program the rules; you program the system to find its own rules. The algorithm is basically writing its own spam-detection logic based on the evidence we've given it. Our job is to be the best possible teacher, not the best rule-writer."

The algorithm distills all these patterns into a mathematical structure we call a model. This model is the final product of the training phase—a compact set of rules and insights that encapsulates everything it learned from the data.

Step 3: Making Predictions and Getting Smarter

Once the model is trained, it's time for the final step: prediction (sometimes called inference). Now, the model gets to apply what it has learned to new situations. We show it a brand-new, unseen email, and it uses its internal logic to predict whether it’s spam.

But the job isn't done. The model’s predictions are constantly checked for accuracy. Did it get it right? If it makes a mistake—like flagging an important work email as spam—that feedback is incredibly valuable. When you click "This is not spam" in your email client, you're often providing exactly this kind of feedback.

This kicks off a continuous improvement cycle:

- Collect Data: Gather more examples, paying close attention to the kinds of emails the model struggles with.

- Retrain Model: Feed this new, improved dataset back into the algorithm to train a slightly smarter version of the model.

- Deploy and Test: Put the updated model back into the real world and see if its performance has improved.

This loop of training, testing, and refining is what allows machine learning systems to become more accurate over time. It’s a constant process of learning from both its wins and its losses, which is a key concept in understanding how AI works on a larger scale.

Exploring the Main Types of Machine Learning

Machine learning isn't a single, one-size-fits-all tool. Think of it more like a specialized workshop, filled with different instruments designed for very specific jobs. To really understand what machine learning is, you first have to get to know its three main "flavors." Each one learns in a fundamentally different way, making it the right choice for a unique set of problems.

You can think of these as three distinct teaching styles. One method uses a detailed answer key, another asks students to find patterns on their own, and the last one relies on a system of rewards and consequences.

Supervised Learning: Learning with a Teacher

Supervised learning is the most common form of machine learning, and its name gives the game away—it learns under close supervision. Imagine a student with a big stack of flashcards. One side has a picture (a cat, a car, a boat), and the other has the correct label. The student studies these labeled examples over and over to learn the rules.

This is precisely how supervised learning models work. The algorithm is fed a massive dataset where all the data points are already labeled with the correct outcomes. Its entire job is to figure out the underlying relationship between the inputs and their corresponding outputs.

A perfect practical example is your email’s spam filter. It learned to spot junk mail because developers trained it on millions of emails that humans had already labeled as either "spam" or "not spam." Through this process, it figured out the tell-tale signs of a junk message.

Common applications of supervised learning include:

- Image Recognition: Your phone's camera identifying pets in photos after being trained on thousands of labeled images of cats and dogs.

- Price Prediction: A real estate website like Zillow estimating a house’s sale price based on features like square footage and number of bedrooms, using historical sales data.

- Medical Diagnosis: Helping to predict whether a tumor is malignant or benign based on labeled medical scans.

Unsupervised Learning: Finding Patterns on Its Own

Now, let's take away the teacher and the answer key. Unsupervised learning is all about discovery. Here, the algorithm gets a jumble of unlabeled data and has one mission: find hidden structures, groups, or patterns all by itself.

It’s like being handed a giant, mixed-up bag of LEGO bricks and asked to sort them. Without any instructions, you’d probably start grouping them by color, size, or shape. You're creating your own categories based on the natural properties of the bricks.

That’s exactly what unsupervised algorithms do. A classic business use case is customer segmentation. A company like Amazon can feed an algorithm tons of unlabeled customer data—purchase history, browsing behavior, location—and let it group customers into distinct segments. It might discover groups like "frequent book buyers," "new parents," or "weekend gadget shoppers" without ever being told what to look for.

"Unsupervised learning is powerful because it finds the connections we didn't know existed," says a data scientist from a major e-commerce company. "It's the engine of 'aha!' moments in data, revealing the underlying structure of a complex system."

This type of learning is crucial for exploring new datasets and uncovering insights that would be impossible for a human to spot. For an even deeper dive, it's helpful to understand the relationship between these concepts and more advanced fields; you can explore our guide on deep learning vs. machine learning to see how these ideas connect.

Reinforcement Learning: Learning from Trial and Error

The final category, reinforcement learning, is all about learning through action and feedback. This method is modeled on how we—and animals—learn: through trial and error, guided by rewards and penalties.

Think about training a dog to fetch. When the dog successfully brings the ball back, it gets a treat (a positive reward). If it gets distracted and chases a squirrel instead, it gets no treat. Over time, the dog learns that the action "bring back the ball" leads to the best possible outcome.

A reinforcement learning agent works the same way. It operates in an environment, takes actions, and gets feedback as rewards or punishments. Its only goal is to figure out the sequence of actions that will maximize its total reward over time. A great example is how AI has learned to play complex video games. The AI controls the character, and its "reward" is a higher score. It might try thousands of crazy strategies, fail miserably, but eventually, it figures out the optimal path to win—often in ways a human player would never think of. This is the magic behind AI that can master complex games like Chess or Go.

This trial-and-error approach is ideal for dynamic, complex systems where the "right" answer isn't a single label but a whole sequence of optimal decisions.

To help you keep these straight, here’s a quick summary of how they differ.

Machine Learning Types at a Glance

| Learning Type | How It Learns | Common Use Case |

|---|---|---|

| Supervised | From labeled data with a clear "right answer" | Spam filtering, price prediction |

| Unsupervised | By finding hidden patterns in unlabeled data | Customer segmentation, topic modeling |

| Reinforcement | Through trial and error with rewards and penalties | Game playing, robotics |

Each of these learning styles opens up a different world of possibilities, from classifying data with near-perfect accuracy to discovering brand-new strategies in complex environments.

Machine Learning Examples You See Every Day

You might think of machine learning as something complex and far-off, reserved for tech giants and research labs. The reality is, you're probably using it dozens of times a day without even realizing it. It's the invisible engine running in the background of your favorite apps and services, making modern life smoother, safer, and more personalized.

Once you know what to look for, you'll start seeing these smart systems everywhere. Let's take a look at a few examples that are likely already a part of your daily routine.

Entertainment That Knows You

Ever finished a series on Netflix and felt like its next recommendation was pulled straight from your thoughts? That’s not a lucky guess—it’s a sophisticated recommendation engine in action.

These systems are a classic application of unsupervised learning. They sift through mountains of user data: what you watch, what you skip, when you watch, and what you re-watch. By comparing your viewing habits to millions of others, the algorithm places you in a "taste cluster" with people who have similar preferences. For instance, it might notice that people who love Stranger Things also tend to enjoy Black Mirror, even though the shows are very different.

The model then suggests content that's a hit within your cluster, predicting with uncanny accuracy what you'll want to watch next. Spotify’s "Discover Weekly" playlist operates on the same principle, using your listening history to curate a fresh batch of music just for you.

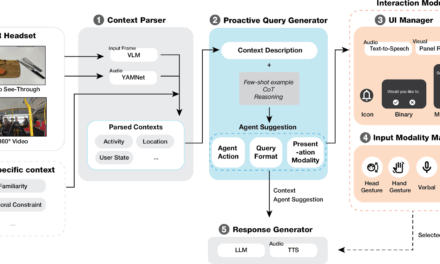

The Assistant in Your Pocket

When you ask Siri about the weather or tell Alexa to play a song, you're tapping into a complex machine learning pipeline. The technology behind it is called Natural Language Processing (NLP), and it generally works in two main steps:

- Speech Recognition: First, a model trained on thousands of hours of human speech translates the sound waves of your voice into digital text.

- Intent Recognition: Next, another model analyzes that text to figure out what you actually want.

That second step is where the real magic happens. The algorithm has to learn that "what's it like outside?" and "do I need a jacket today?" are both requests for a weather forecast. It figures this out by analyzing massive datasets of conversational language, spotting patterns to understand your intent.

Keeping Your Finances Safe

Banks and credit card companies are in a constant fight against fraud, and machine learning is their secret weapon. For this job, they rely on anomaly detection models.

These systems start by learning your normal spending habits. They know where you usually shop, your typical transaction amounts, and the times of day you're most active. In essence, the algorithm builds a unique financial fingerprint just for you.

Any transaction that breaks from this established pattern—like a sudden, large purchase made in a different country at 3 a.m.—is instantly flagged as suspicious. This often triggers that immediate text alert you get on your phone asking, "Was this you?"

This real-time monitoring protects you from fraud before serious damage can be done, a feat that would be impossible for humans to manage at this scale and speed. Each of these scenarios is a perfect use case for machine learning that provides real, tangible value.

Your Smartphone’s Smart Camera

Your phone's camera is much more than a simple lens these days; it's an intelligent imaging system running on machine learning. The moment you press the shutter button, multiple algorithms get to work.

Models trained on millions of professionally shot photos automatically fine-tune settings like exposure, contrast, and color balance to improve your shot. That's how "Portrait Mode" knows to blur the background while keeping your face perfectly sharp—it has learned to tell the difference between a person and what's behind them.

Your photo gallery also uses facial recognition—a type of supervised learning—to group pictures of the same people together. The algorithm learns to identify your friends and family from different angles and in various lighting, making it a breeze to find every photo you have of a specific person without having to scroll for hours.

The Future of AI and Machine Learning

As we look to the horizon, the line between what was once science fiction and what is now reality is getting blurrier by the day, all thanks to machine learning. The field is taking a giant leap, moving past simply analyzing the data we already have and into a new realm of creation. This next chapter is all about a new wave of applications poised to reshape industries and our daily routines.

The most obvious sign of this shift is the explosion of generative AI. These aren't your typical models that just spot patterns; these systems are built to generate entirely new, original content. We’re already seeing this in action with AI that can write surprisingly human articles, compose musical scores, or conjure up stunning images from a few descriptive words. This emerging creative partnership between people and machines is opening up entirely new avenues for expression and innovation.

Smarter, More Personal Experiences

Beyond just making new things, the future of machine learning is intensely personal. The recommendation engines we see on streaming services and shopping sites today are really just the tip of the iceberg. The next wave is set to bring a deep level of personalization to almost everything we do, crafting experiences that feel like they were made just for us.

- Personalized Healthcare: Picture medical treatments tailored to your unique genetic code. Or think about wearable gadgets that go beyond counting steps to actually predicting health problems before you even feel a symptom.

- Dynamic Shopping: Retail is set to evolve from basic product suggestions to truly adaptive experiences. Imagine online stores or even physical product displays that shift in real-time to match what you’re looking for in that exact moment.

This isn't just a gimmick; it's a fundamental change driven by more sophisticated algorithms that can grasp context and subtle preferences like never before. The economic force powering this is staggering—the AI market is projected to grow at a compound annual growth rate of 40.2% from 2021 to 2028. You can find more details about this growth and the history of machine learning at clickworker.com.

Navigating the Challenges Ahead

Of course, this exciting future isn't without its hurdles. With these systems becoming more woven into the fabric of our society, we have to get serious about the challenges they bring. Any discussion about what machine learning is has to include a frank conversation about how we use it ethically.

Expert Opinion: "The biggest challenge isn't making AI smarter, but making it wiser," argues technology ethicist Dr. Anya Sharma. "We must build systems that are not only powerful but also fair, transparent, and accountable to the societies they serve. Trust is the currency of the AI age."

Issues like algorithmic bias—where AI models accidentally learn and amplify human prejudices hidden in data—and data privacy are front and center. Making sure these powerful tools are used for good requires a collective effort from developers, regulators, and the public alike. The future of machine learning isn't just about what's technologically possible; it’s about building a future we can all trust.

Answering Your Top Machine Learning Questions

As you start exploring machine learning, you’ll probably find a few questions keep coming up. They're the same ones most people have when they first start out, so let's clear them up. Getting a solid handle on these basics is the best way to understand the whole picture.

What's the Real Difference Between AI and Machine Learning?

This is easily the most common question, and it really comes down to scope. Think of Artificial Intelligence (AI) as the whole universe—the broad goal of creating machines that can think, reason, and act like humans. It's the big dream.

Machine Learning (ML) is a star within that universe. It's a specific, and currently the most successful, way to achieve AI. Instead of programming a machine with a giant list of rules, you give it data and let it learn the rules for itself. So, while all ML is AI, not all AI is ML. Early "expert systems," for example, were AI but relied on hard-coded logic, not learning.

Do I Need to Be a Math Genius to Get into ML?

Not at all, especially when you're just getting your feet wet. The theories behind the algorithms are definitely heavy on statistics, calculus, and linear algebra, but you don’t need to master them on day one. It's like driving a car—you don't need to be a mechanical engineer to be a great driver.

Expert Opinion: "Focus on the practical application first," advises a senior AI engineer at a top tech firm. "Modern tools and libraries handle the heavy mathematical lifting for you, allowing beginners to build powerful models and understand the 'why' before getting lost in the 'how' of complex equations. Get your hands dirty with a project; the theory will make more sense later."

For beginners, the best path is to grasp the main ideas and get comfortable with a programming language like Python. You can always go back and dig into the math later on as your projects demand it and your curiosity grows.

Is Machine Learning Going to Take My Job?

It's far more likely to change your job than to take it. Machine learning is brilliant at automating the repetitive, data-intensive tasks that people find boring and time-consuming. Think of a graphic designer who now uses AI to remove backgrounds from photos in seconds, instead of spending 20 minutes doing it manually. This frees you up to concentrate on what humans do best: strategic thinking, creative problem-solving, and emotional intelligence.

Think of it less as a replacement and more as a new, powerful tool. Just as spreadsheets changed accounting, ML is changing many fields by augmenting our abilities. While some jobs will evolve, many more are being created to design, manage, and interpret the work of these smart systems.

At YourAI2Day, we keep you informed on all the latest developments in AI and machine learning. Explore our resources and stay ahead of the curve at https://www.yourai2day.com.