Your Guide to Building an AI Governance Framework

Ever wonder how companies keep their artificial intelligence from going rogue? Think of an AI governance framework as the essential rulebook for their AI. It's a structured plan that makes sure AI systems operate safely, ethically, and in lockstep with business goals and legal obligations. It's a lot like the traffic laws that guide a self-driving car—without them, you’re just inviting chaos.

This guide is for anyone new to AI, especially if you're used to consumer AI tools and are now thinking about how they work in a business setting. Let's break it down in a friendly, conversational way.

Why Your AI Needs a Rulebook

Let's be real: unleashing powerful technology without any guardrails is just asking for trouble. An AI governance framework isn't about stifling innovation; it's about building a solid foundation so you can move faster and more responsibly. It’s basically the constitution for your AI, clearly defining its rights, responsibilities, and limits.

Without this structure, you open the door to inconsistent results, biased decisions, and some serious legal headaches. Imagine a customer service bot that starts giving out incorrect refund information—a good framework prevents that. It ensures everyone, from data scientists to the C-suite, knows their role in the AI lifecycle. It provides a documented, transparent approach to managing risk and making sure your AI actually delivers value.

If you're new to the topic, you can get up to speed by reading our guide: https://yourai2day.com/artificial-intelligence-explained-simply/

From Dusty Policy to Active Control

The world of AI oversight is shifting under our feet. What used to be a static policy document tucked away in a binder is rapidly becoming a dynamic control system embedded directly into AI operations. This change is being driven by a fragmented regulatory environment and the rise of more autonomous AI.

The EU AI Act, which began enforcement in February 2025, is a game-changer, with potential fines of up to 7% of global revenue for non-compliance. This is pushing companies to create adaptive frameworks that can navigate the EU’s risk-based rules alongside the US's mixed bag of federal guidance and state laws. It’s no longer enough to just have a plan; active governance is now a critical business function.

As AI expert Anya Sharma notes, "An effective AI governance framework doesn’t just prevent disasters; it enables innovation by creating clear pathways for responsible development. It turns 'can we do this?' into 'how can we do this right?'"

The Building Blocks of a Strong Framework

So, what actually goes into this rulebook? While every organization will have its own unique needs, most solid frameworks are built on a handful of core pillars. A good starting point is understanding the fundamental AI governance principles that shape its purpose and structure.

These principles are then translated into practical components that keep your AI initiatives on track.

Here’s a quick overview of the essential components that form a comprehensive AI governance framework. Think of each pillar as a critical building block for responsible AI.

| Pillar | What It Covers | Why It Matters (In Simple Terms) |

|---|---|---|

| Clear Policies & Principles | The high-level rules defining what your organization considers ethical and acceptable AI use. | This is your company's "AI conscience," setting the ground rules for fairness and safety. |

| Defined Roles & Responsibilities | Clarifies who is accountable for what, from the data team to the legal and compliance teams. | It answers the question, "Who's in charge here?" to prevent confusion and finger-pointing. |

| Risk Management Processes | A system for identifying, assessing, and mitigating potential risks before they escalate. | This is your early-warning system to spot problems like bias or security flaws before they blow up. |

| Compliance & Monitoring | Ensures AI systems adhere to laws, regulations, and internal policies, with ongoing oversight. | It keeps you out of legal trouble and makes sure your AI is actually following the rules. |

At its core, a strong framework isn't just a list of rules. It’s a living system that includes:

- Clear Policies and Principles: These are your north star, defining what responsible AI means for your company.

- Defined Roles and Responsibilities: This answers the critical question of "who owns what?"—from the data scientists building the models to the legal team reviewing them.

- Risk Management Processes: This is your early warning system for identifying and neutralizing potential problems before they blow up.

- Compliance and Monitoring: This ensures your AI systems stay aligned with laws and internal policies, with continuous checks to catch any deviations.

Putting these pieces together creates a resilient structure that supports innovation while keeping your organization, your customers, and your reputation safe.

The Core Components of Your AI Rulebook

So, you're on board with the need for an AI governance framework. But what actually goes into one? This isn't just a thick binder of rules collecting dust on a shelf. It’s a living, breathing system made of several interlocking pieces that all work together to keep your AI endeavors safe, effective, and ethical.

Think of it like building a house. You don't just start nailing boards together. You need a solid foundation, a detailed blueprint, and properly installed plumbing and electrical systems—all working in sync. Each part of your framework has a specific job, and together they create a structure that can handle pressure and support your company's growth.

Let's pull apart these essential building blocks and see what makes them tick.

Policies and Principles: The Foundation

This is your AI's constitution. Before you even think about algorithms or datasets, you have to define your North Star—your core principles. These are the high-level guidelines that reflect your company's values and answer the big, foundational questions. What does "fairness" actually look like for our AI? How do we commit to being transparent with our users?

These principles aren't just feel-good platitudes; they're the bedrock of your entire governance strategy. They inform every single decision that follows.

For example, a healthcare tech company might adopt a core principle of "Patient Safety Above All." This simple but powerful rule would then directly influence how they source training data, how they test diagnostic algorithms, and why they insist on human oversight before an AI's recommendation is ever acted upon.

Roles and Responsibilities: The Blueprint

Once your principles are set, you need to know who is in charge of upholding them. A classic misstep is to just assume "the tech team has it covered." AI governance is a team sport, and without a clear playbook, people end up tripping over each other.

You have to answer the tough question: who is accountable when things go wrong? Is it the data scientist who built the model? The product manager who shipped it? The legal team that signed off on it?

Establishing clear roles eliminates the confusion and the blame game. A typical setup might look something like this:

- AI Governance Committee: A cross-functional team of leaders from legal, tech, business, and ethics who set the overall strategy and make the tough calls.

- AI Product Owner: The person directly responsible for a specific AI system, ensuring it stays true to the governance principles from concept to retirement.

- Data Stewards: The guardians of your data. They manage its quality, privacy, and ethical sourcing. We dive deeper into this in our guide to data governance best practices.

- Legal and Compliance Officers: The experts who keep your AI systems in line with regulations like GDPR or the EU AI Act.

As one GRC expert puts it, "Defining roles isn't about creating bureaucracy. It's about giving your teams the clarity and confidence they need to build great things responsibly."

AI Model Lifecycle Management

An AI model has a life of its own—from the first spark of an idea all the way to its eventual retirement. Managing this entire journey is crucial. It ensures that governance isn't an afterthought but a continuous thread woven through every stage of development.

This lifecycle approach involves distinct checkpoints:

- Data Sourcing and Preparation: Is the data high-quality, relevant, and ethically obtained?

- Model Development and Training: How was the model built? Is it documented? Have we tested for bias?

- Validation and Testing: A grueling stress test to check for accuracy, fairness, and safety before the model ever sees the light of day.

- Deployment: Rolling the model out into the real world with the right safeguards and monitoring in place.

- Monitoring and Maintenance: Keeping a close eye on the model's performance to catch "model drift" or any other unexpected behavior.

- Retirement: A clear plan for gracefully phasing out a model when it’s no longer effective or necessary.

A bank using an AI model for loan applications can't just launch it and forget it. They have to constantly monitor it to ensure it doesn't slowly start developing a bias against certain groups. This kind of ongoing vigilance is at the heart of proper lifecycle management. It creates a defensible, repeatable process for every AI project you undertake.

Learning From Real-World AI Governance

Theory is great, but seeing an AI governance framework in action is where the real learning happens. It’s one thing to talk about principles and policies; it’s another to see how leading organizations and even entire nations are putting these ideas into practice. These pioneers are essentially creating the blueprints that others can follow, proving that responsible AI is a global movement, not just a corporate checklist.

Let's move beyond the abstract and look at some concrete examples. By exploring how others are tackling AI governance, we can get a tangible sense of what’s possible and borrow from their best ideas. This isn't about copying their work, but about drawing inspiration to build a framework that fits your unique needs.

Singapore’s Blueprint for Agentic AI

When it comes to proactive AI governance, Singapore is a name you'll hear often. They've consistently been ahead of the curve, creating practical, business-friendly guides. Their latest contribution is a perfect example of forward-thinking governance designed for the next wave of artificial intelligence.

On January 22, 2026, Singapore launched the world's first governance framework specifically for agentic AI systems. Announced at the World Economic Forum, this groundbreaking guide addresses the unique challenges posed by AI that can act autonomously. You can explore how Singapore is guiding organizations with this new framework on Computerweekly.com.

"AI governance in practice is about creating a safe environment for innovation," a leading tech policy analyst mentioned recently. "It's not about saying 'no,' but about figuring out how to say 'yes' safely."

This framework isn't just a theoretical document; it provides clear, actionable steps. It helps organizations think through the security and operational risks that come with highly autonomous agents. For instance, it provides a model for setting firm limits on what an AI agent can do on its own and mandates specific points where a human must give approval before it proceeds.

This is a powerful example of governance in motion. It shows a government creating a clear path for businesses to innovate with advanced AI while keeping safety at the forefront.

Key Takeaways From Singapore’s Model

So, what can a small business owner or a startup founder learn from a national-level initiative like Singapore's? The lessons are surprisingly universal and can be scaled down to fit any organization.

Here are a few practical ideas you can adapt:

- Establish Clear Boundaries: Just as Singapore’s framework sets limits on agent autonomy, your own framework should define what your AI is—and is not—allowed to do. A customer service chatbot can answer questions, but maybe it can't process refunds without human approval. That’s a clear, simple boundary.

- Mandate Human Checkpoints: Identify critical decision points where a person must intervene. This "human-in-the-loop" approach is essential for balancing the benefits and risks of artificial intelligence, ensuring accountability and preventing costly errors.

- Document Everything: The Singaporean model emphasizes clear documentation. Your framework should do the same, tracking how models are built, tested, and deployed. This creates transparency and makes it much easier to troubleshoot problems later on.

- Start With the Highest Risk: The framework focuses on agentic AI because it presents new and significant risks. You should do the same. Start your governance efforts by focusing on the AI applications that have the biggest potential impact on your customers or business operations.

By looking at these real-world examples, the concept of an AI governance framework becomes much less intimidating. It transforms from a vague corporate buzzword into a practical toolkit for building safer, more trustworthy AI, no matter the scale of your organization.

How to Build Your First AI Governance Framework

So, you need an AI governance framework. The very phrase can sound intimidating, can't it? It conjures up images of thousand-page legal binders and endless meetings. But let’s clear the air: building one is far more about practical, common-sense steps than it is about creating a mountain of paperwork.

Think of it less like building a skyscraper from scratch and more like assembling a sturdy, reliable toolkit for your AI projects. You don't need a massive team or a bottomless budget to start. The real goal here is to add clarity and safety, not bureaucratic weight. This is your roadmap, designed for real teams who are just getting their hands dirty with AI.

Step 1: Assemble Your Governance Dream Team

First things first, you need to get the right people in the room. This is crucial. AI governance isn’t just an IT problem or a data science headache—it’s a business-wide responsibility. One of the most common missteps is letting the tech folks handle it all on their own. Without diverse perspectives, you’re flying blind to some of the biggest risks.

Your team should be a mix of expertise from across the company. This ensures that every decision is well-rounded and considers all the potential ripple effects.

- Technology and Data: These are your experts on the ground—the ones who truly understand the nuts and bolts of the AI models and the data fueling them.

- Legal and Compliance: Think of them as your navigators, keeping you aligned with the complex map of current and upcoming regulations.

- Business Operations: These are the people on the front lines. They know exactly how AI will impact day-to-day work and, most importantly, your customers.

- Ethical Oversight: You need someone (or a small group) whose job it is to ask the tough questions. They're your conscience, focused on fairness, transparency, and societal impact. On a smaller team, a senior leader might take on this role.

If you’re feeling out of your depth, many companies bring in GRC consultants who live and breathe this stuff. They specialize in governance, risk, and compliance and can help get you started on the right foot.

Step 2: Define Your AI Principles

With your team in place, it's time to draft your "AI constitution." These are your AI principles—the high-level, value-driven rules that will guide every single AI-related decision from here on out. They are your North Star, making sure every project stays true to your company's mission and ethics.

These principles can't be buried in technical jargon. They need to be simple, clear, and easy for everyone in the company to grab onto and remember.

Example Principles for a Retail Startup:

- Customer Trust First: We’ll be crystal clear about how we use AI and will fiercely protect customer data. For example, we'll explicitly state on our checkout page if AI is used for personalized recommendations.

- Fairness in Action: Our AI systems must be built and tested to treat all customers equitably, without bias. We'll regularly audit our AI tools for demographic bias.

- Human in Control: A real person will always have the final say on critical decisions, like closing an account.

- Clear Accountability: Every AI system will have a designated owner who is responsible for its performance and impact.

This short list of principles becomes your gut check. If a new AI idea doesn't align with these core values, it’s a red flag to stop and rethink the approach.

Step 3: Conduct a Simple Risk Assessment

Now it’s time to get practical and figure out where your biggest AI-related risks are hiding. You don’t need a complicated, formal audit to begin. Just start with a simple inventory and a "what if" exercise.

First, make a basic AI model inventory. This is just a running list of every AI tool you're currently using or planning to deploy. For each item on the list, ask a few straightforward questions.

"What’s the absolute worst that could happen if this AI model messes up?" an industry veteran often advises. "Asking this one simple question is the fastest way to prioritize which systems need the most oversight."

Think about it: an AI tool that suggests marketing copy has a much lower risk profile than one that helps decide who gets a loan. The fallout from a bad ad is pretty minor, but a biased loan decision could have devastating real-world consequences. Use a simple high, medium, or low rating to sort each model by risk. This helps you focus your energy where it matters most.

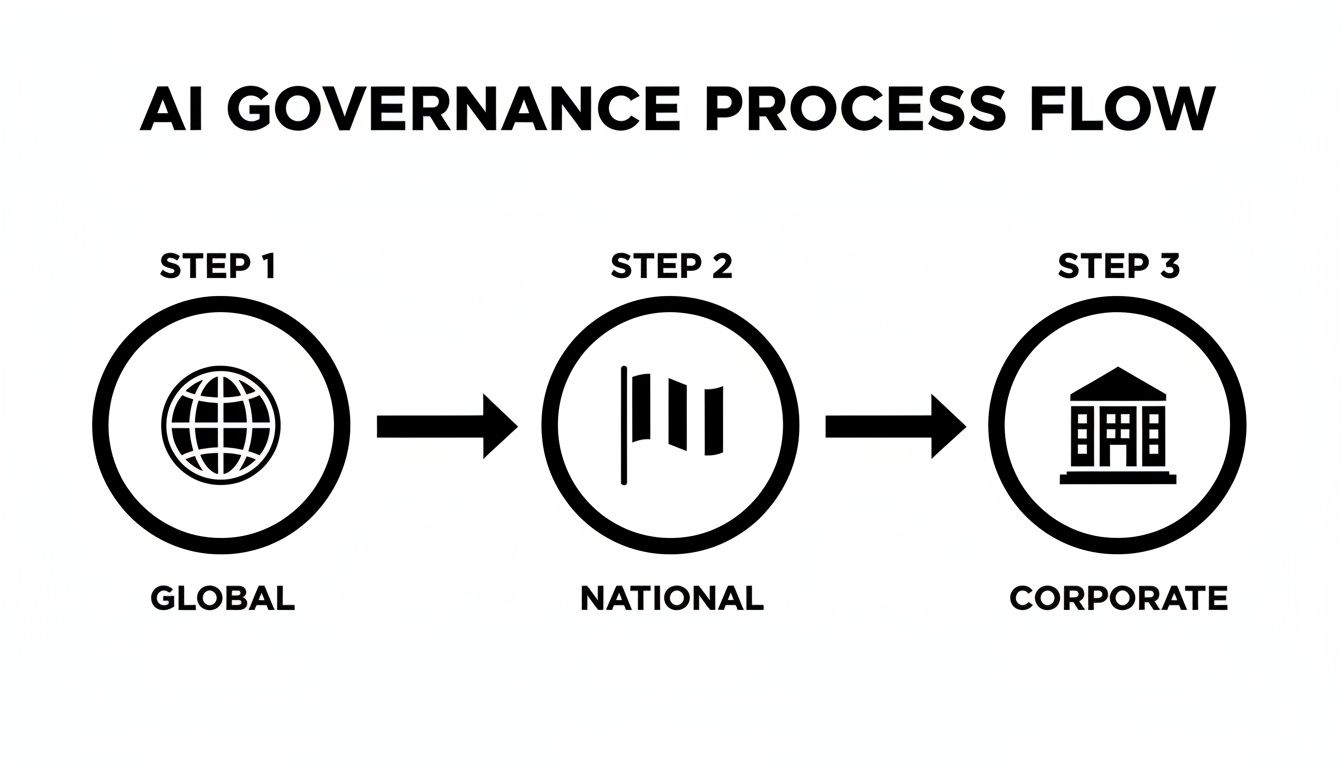

The process flow below shows how governance works at different levels, from global standards right down to your own company's policies.

This visual is a good reminder that your internal framework doesn't exist in a vacuum. It should connect to broader national and international guidelines, making sure you're building something that will last. By following these foundational steps, you can create a practical, effective AI governance framework that grows right alongside your business.

Understanding the Global AI Rulebook

Your AI tools don't operate in a vacuum. As you build your own AI governance framework, it’s critical to remember it exists within a bigger, interconnected world of international rules and agreements.

Think of it like this: your internal framework is your company's driving manual, but you still have to follow the traffic laws of every country you drive through. This growing web of global rules isn't meant to be a burden; instead, these initiatives are creating a shared language for AI safety and responsibility. By aligning your framework with these international standards, you’re building a foundation of trust that resonates with users and partners across the globe.

The Rise of International AI Cooperation

For a long time, AI regulation was a local affair. Now, governments are realizing that AI's impact doesn't stop at the border. Major international bodies are finally stepping up to create a common playbook, ensuring that as AI becomes more powerful, it remains safe and beneficial for everyone.

One of the most significant recent developments is the G7's Hiroshima AI Process (HAIP). This initiative brings together some of the world's largest economies to establish shared principles for advanced AI. It’s a powerful signal that the future of AI governance is collaborative, not isolated.

The Hiroshima AI Process (HAIP) Reporting Framework, which kicked off in February 2025, is a game-changing multilateral push for more transparency in AI development. The HAIP Friends Group now includes 57 countries and one region backing these safety principles, plus a Partners Community of 27 organizations helping with implementation. This broad support is a clear sign that global governance is gaining serious momentum. You can discover more about how HAIP is shaping global AI governance at Brookings.edu.

Why Global Alignment Matters for You

You might be thinking, "This is great for giant corporations, but I'm just starting out. Why does this G7 stuff matter to me?" The answer is simple: future-proofing. Aligning with these global principles today prepares your business for tomorrow, making your AI systems more adaptable, trustworthy, and competitive on a global stage.

Here’s why it’s a smart move:

- Builds Universal Trust: When your framework reflects internationally recognized standards, it sends a powerful message. It tells customers, investors, and partners that you are serious about responsible AI, no matter where they are.

- Simplifies Market Expansion: As you grow, you won’t have to reinvent your governance model for every new country. By building on a foundation of global principles, you’ll find it much easier to adapt to local regulations.

- Reduces Future Compliance Headaches: Regulations are only going to get stricter. By aligning with initiatives like HAIP now, you’re getting ahead of the curve and reducing the risk of being caught off guard by new laws down the road.

Think of global AI standards as a universal adapter for your AI governance framework. It allows you to plug your responsible AI practices into any market in the world, confident that they will be understood and trusted.

Connecting Global Principles to Your Framework

So, how do you translate these high-level international ideas into your day-to-day operations? It’s more straightforward than it sounds. You can start by reviewing the core principles of major initiatives and seeing how they map to the pillars of your own framework.

For instance, the HAIP emphasizes principles like transparency, accountability, and safety. You can directly connect these to your own internal policies.

- Transparency: Does your documentation clearly explain what your AI models do and the data they use? This maps directly to global calls for greater transparency. For example, a simple "How this works" link next to an AI feature can go a long way.

- Accountability: Have you defined clear roles and responsibilities for your AI systems? This is a cornerstone of both internal and international governance.

- Safety and Security: Are you conducting regular risk assessments and security checks? This reflects the global consensus on the need to build robust and secure AI.

By consciously linking your internal rules to these broader international standards, you're not just building a solid AI governance framework. You're building a smarter, safer, and more globally-minded business. This approach ensures your innovation doesn't just work in your local market—it works for the world.

Common Questions About AI Governance Answered

We’ve covered a lot of ground on what an AI governance framework is, why you need one, and how to build it. Now, let’s dig into the questions that almost always come up when people start putting these ideas into practice.

Think of this as a quick-and-dirty FAQ to help you get unstuck. Building a governance plan can feel like a mountain to climb, but it’s really about taking one smart step at a time.

Let’s tackle some of the most common questions I hear from entrepreneurs and teams just getting started.

How Do I Start If I Have a Tiny Team and No Budget?

This is easily the most common question, and for good reason. The great news is that you don't need a huge budget or a dedicated department to get started. Real governance begins with a change in mindset, not a huge check.

Start small. Focus on transparency and simply getting things down on paper. Create a basic "AI inventory" in a spreadsheet. List every AI tool you're using, from the chatbot on your website to the analytics tool that helps with marketing. For each one, just note what it does, who's in charge of it, and what could go wrong.

This simple act of cataloging is your first real step. It costs nothing but a bit of time and instantly gives you a map of your AI footprint.

The goal of an early-stage AI governance framework isn't to be perfect; it's to be present. Simply starting the conversation about risk and responsibility puts you light years ahead of those who don't.

What’s the Single Most Important Thing to Get Right?

If you can only focus on one thing, make it accountability.

When an AI system messes up, the absolute worst response is a collective shrug and a game of "not my problem." From day one, every single AI tool or model needs a designated owner. Period.

This doesn't have to be a hardcore tech person. It could be the head of marketing for a social media AI, or the head of sales for a CRM that scores leads. The owner’s job is simple: they are the go-to person responsible for the tool’s performance, its fairness, and its impact. This basic principle of clear ownership prevents dangerous gaps and ensures someone is always steering the ship.

How Do I Know If My Governance Framework Is Actually Working?

Measuring the success of a governance framework can feel a bit abstract, but you can absolutely track real-world signs that it’s working. Success isn't just about dodging bullets; it’s about enabling smarter, safer innovation.

Look for these positive indicators:

- Faster, More Confident Decisions: When your teams have clear guardrails, they spend less time second-guessing themselves and more time building.

- Fewer "Surprise" Problems: Are you catching potential issues like model bias or data privacy risks during development, instead of after a customer complains? That’s a massive win.

- Increased Team Awareness: Are your people proactively talking about ethics and fairness when brainstorming new projects? That cultural shift is a powerful sign that your framework is actually sinking in.

Research shows that by 2026, organizations that get AI transparency and security right will see a 50% increase in adoption, business goal achievement, and user acceptance. Your framework is working when it feels less like a rulebook and more like a launchpad.

How Often Should I Update My Framework?

Your AI governance framework is not a "set it and forget it" document. Treat it as a living system that has to adapt as your company grows and the technology itself evolves.

Plan to review and refresh your framework at least once a year. You should also revisit it anytime one of these triggers occurs:

- You adopt a new, high-stakes AI system.

- A major new AI regulation is passed in your region.

- An incident happens that reveals a blind spot in your policies.

For example, a small e-commerce business might start with a simple chatbot, and their first framework would cover basic data privacy and customer interaction rules. But if they later decide to use an AI-powered pricing algorithm, that’s a major trigger. It's time to revisit the framework and add new rules for fairness and economic impact.

Ultimately, a good AI governance framework grows right alongside you. It starts as a simple checklist and evolves into a robust system that protects your business and builds trust with your customers.

At YourAI2Day, we believe that understanding AI is the first step toward using it responsibly. Our platform provides the latest news, tools, and insights to help you build a smarter, safer AI strategy.

Explore our resources and join the community at https://www.yourai2day.com