Convolutional Neural Networks Explained Simply for Beginners

Ever wonder how your phone instantly tags friends in photos, or how a self-driving car can spot a pedestrian on a busy street? That's the work of a Convolutional Neural Network, or CNN. It might sound super technical, but the core idea is surprisingly simple.

Think of it like teaching a child to recognize a cat. You don't show them one static picture and tell them to memorize it. Instead, they learn to spot the key features—pointy ears, whiskers, a long, swishy tail. A CNN does the same with digital images, breaking them down into basic patterns and features to figure out what it's looking at. It's one of the coolest and most powerful tools in the world of artificial intelligence today.

What Are CNNs and Why Do They Matter

At its heart, a convolutional neural network is a specific kind of artificial intelligence built to process and make sense of visual information. Traditional neural networks treat data like a simple, flat list of numbers. CNNs, however, are designed to see the spatial hierarchy within an image—they understand that pixels are arranged next to each other to form lines, shapes, and textures.

This unique structure gives them a massive advantage in tasks that we humans find second nature but have always been a huge challenge for machines. A CNN doesn't just see random pixels; it learns to identify the gentle curve of a smile or the sharp corner of a building. This is exactly why they've become a cornerstone of modern deep learning, the field dedicated to building these powerful, multi-layered networks.

If you're still getting your bearings, getting a handle on the differences between deep learning vs machine learning will give you some great foundational context.

The Shift From Manual to Automatic Feature Finding

Before CNNs hit the mainstream, computer vision was a much more manual, tedious affair. Engineers and data scientists had to hand-craft "feature detectors" to tell the computer exactly what to look for—things like horizontal edges, specific color gradients, or simple shapes. This approach was incredibly brittle; it was slow, rigid, and fell apart when faced with the natural variations of the real world.

Expert Opinion: “CNNs flipped the script entirely by learning these features on their own. Instead of being told what matters, a CNN analyzes thousands, or even millions, of example images and automatically discovers which patterns are most important for a given task. This self-learning ability is what makes them so powerful and adaptable.”

Where Did CNNs Come From?

While they feel like a recent breakthrough, the core ideas behind CNNs have been percolating for decades. The story really starts back in 1980 with a Japanese researcher named Kunihiko Fukushima. He introduced the Neocognitron, a groundbreaking model directly inspired by the workings of the human visual cortex.

This early work laid the foundation for everything that came after, introducing the crucial concepts that allow a network to recognize a pattern regardless of where it appears in an image. It's a fascinating history that shows how these ideas grew from biological inspiration into the powerful technology we use today.

The Building Blocks of a CNN

Before we dive deep into the mechanics, let's get a high-level view of the key components that make a CNN tick. Each part has a specific job, and together, they form a powerful system for understanding images.

Key CNN Concepts at a Glance

| Component | Simple Analogy | Main Purpose |

|---|---|---|

| Convolution Layer | A magnifying glass with a specific pattern filter | To scan the image and detect features like edges, corners, and textures. |

| Filter (Kernel) | The pattern on the magnifying glass | A small matrix of numbers that defines the feature to be detected. |

| Activation Function | An "interestingness" meter | To introduce non-linearity, helping the network learn more complex patterns. |

| Pooling Layer | Shrinking the image to get the gist | To reduce the image size (downsample) and make the detected features more robust. |

| Fully Connected Layer | A final committee vote | To take all the detected features and make a final classification or prediction. |

These are the fundamental pieces we'll be exploring. Understanding how they work together is the key to unlocking the power of convolutional neural networks.

Understanding the Core Building Blocks of a CNN

To really get what makes a convolutional neural network tick, we have to look under the hood at its essential parts. Think of it like a highly specialized assembly line built just for understanding images. Each station has a specific job, and by the end, a complex visual puzzle gets solved. The whole process moves logically, starting with simple features and ending with a final, intelligent decision.

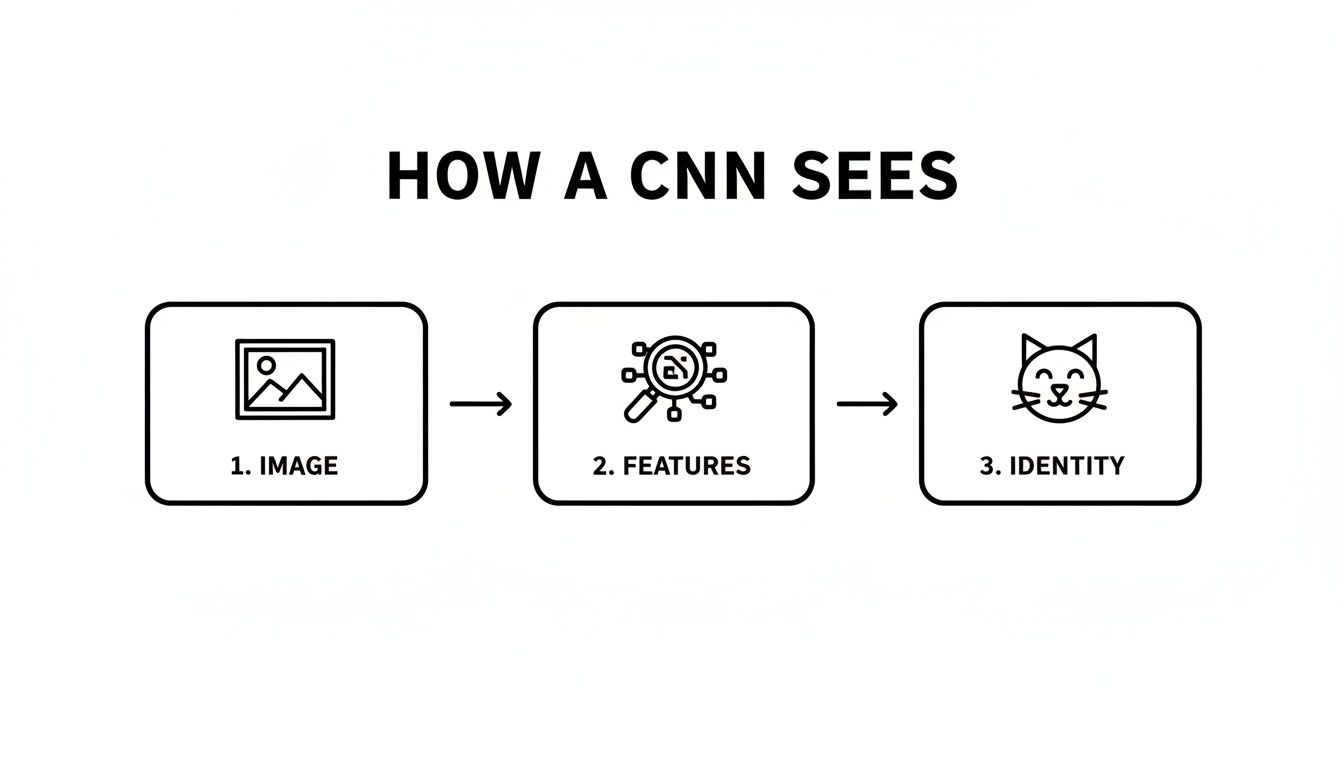

This quick overview shows the basic flow: the network takes an input image, uses filters to find features, and finally identifies what the image contains.

This visual really simplifies the journey from raw pixels to a confident prediction, which is the core strength of a CNN. Let's break down each step in this process.

The Convolutional Layer: The Feature Detective

The very first and most important part of the assembly line is the Convolutional Layer. This is where the real magic of feature detection begins. Imagine you're a detective scanning a photograph with a special magnifying glass, one designed to find only a single type of clue—let's say, vertical lines.

You'd slide this magnifying glass across the entire image, from left to right and top to bottom. Every time you find a strong vertical line, you make a note on a separate map. In a CNN, this magnifying glass is called a filter (or kernel), which is just a small matrix of numbers. The network doesn't have just one filter; it has dozens or even hundreds, each one looking for a different simple feature:

- Horizontal or vertical edges

- Specific colors or gradients

- Curves or corners

By applying all these filters, the convolutional layer transforms the original image into a set of feature maps. Each map highlights exactly where a specific feature was found, breaking down a complex picture into simple, understandable parts.

The Activation Function: The Importance Filter

After a feature is detected, the network has to decide if it's significant enough to pass along. This is the job of the Activation Function. Think of it as an "interestingness" meter. If a feature is strong and clear (like a sharp edge), the activation function lets it pass through with a high score. If it's weak or irrelevant, it gets a low score or is ignored completely.

A very popular choice here is the Rectified Linear Unit (ReLU). Its rule is simple: if the input value is positive, let it through. If it's zero or negative, block it by changing it to zero. This straightforward step helps the network focus only on the important clues, making the whole system more efficient.

Expert Opinion: "The beauty of ReLU is its simplicity. By introducing non-linearity—essentially, the ability to turn signals on or off—it allows the network to learn much more complex patterns than it could with simple linear functions alone. It was a major breakthrough for training deeper, more powerful networks."

The Pooling Layer: The Summary Artist

At this point, the network has a lot of detailed feature maps. To make things more manageable and faster, the Pooling Layer (sometimes called a subsampling layer) steps in. Its job is to create a condensed summary of each feature map while keeping the most important information.

Imagine taking a detailed drawing and creating a quick, small sketch that captures only the most essential outlines. That's basically what pooling does. The most common type is Max Pooling, which looks at a small window of pixels on a feature map and picks only the pixel with the highest value to carry forward.

This clever step achieves two things:

- It shrinks the size of the data, which drastically cuts down on the amount of computation needed in later stages.

- It makes the feature detection more robust. By focusing on the strongest signal in a neighborhood, the network becomes less sensitive to the exact position of a feature.

The Fully Connected Layer: The Final Decision Maker

Finally, after passing through multiple cycles of convolution and pooling, the processed feature maps arrive at the Fully Connected Layer. This is the head detective's office. All the summarized clues—"found a pointy ear here," "detected whiskers there," "saw a furry texture"—are gathered together.

This layer works much like a traditional neural network, connecting every input to every output. If you'd like to dive deeper into that, you can learn more about what is a neural network in our detailed guide. Its role is to look at the complete set of activated features and make the final classification. Based on the combination of features it sees, it calculates the probabilities for each possible label and makes its final call: "With 95% confidence, this is a cat."

Exploring Famous CNN Architectures and Their Impact

Understanding the theory behind convolutional neural networks is one thing, but seeing how they evolved in the real world is where it all clicks. The history of AI isn't a straight line; it's a series of landmark models, each one a breakthrough that fundamentally changed what machines could see and understand.

These architectures aren't just academic footnotes. They are the giants whose shoulders modern computer vision stands upon. Each model you're about to meet solved a critical puzzle, paving the way for the sophisticated visual systems we now use in our phones, cars, and hospitals. Let’s take a walk through the hall of fame.

LeNet-5: The Original Pioneer

Long before AI was a household term, there was LeNet-5. Developed by the legendary Yann LeCun back in the 1990s, it was one of the very first CNNs to see practical use. Its mission was narrow but incredibly challenging for its time: reading handwritten digits on bank checks.

The design was elegant in its simplicity. LeNet-5 established the foundational pattern we still follow today: a stack of convolutional layers, followed by pooling layers to condense the information, and finally, a set of fully connected layers to make a decision. It was the first to prove that a network could learn to spot visual patterns directly from raw pixels.

While it might look basic by today's standards, LeNet-5 was the crucial proof-of-concept that started it all. It showed the world this layered approach to learning features was the right path forward, laying the groundwork for every major CNN to come.

AlexNet: The Game Changer

After LeNet-5, progress in the field was steady but not spectacular. Then, in 2012, everything changed. A model named AlexNet stormed the prestigious ImageNet Large Scale Visual Recognition Challenge (ILSVRC), and it didn't just win—it completely blew the competition away.

AlexNet was bigger and deeper than anything that came before it. It was the first to successfully stack multiple convolutional layers directly and made clever use of the ReLU activation function, which helped it train much faster. Crucially, it was built to run on powerful new graphics processing units (GPUs), which could handle the immense computational load.

The results were jaw-dropping. AlexNet's victory in 2012 was the "big bang" moment for modern deep learning, achieving a top-5 accuracy of 84.7%. This wasn't just a small improvement; it was a staggering 15.3 percentage points ahead of the runner-up. This singular event ignited the deep learning boom we see today. You can get a deeper perspective on how these historical models shaped modern AI to understand the full story.

Expert Opinion: "AlexNet's success wasn't just about winning a competition; it was a profound shift in mindset. It showed the entire research community that deep, data-hungry neural networks, trained on powerful hardware, were the future of artificial intelligence."

VGGNet: The Architect of Simplicity

Riding the wave of AlexNet's success, researchers started asking, "How can we go even deeper?" One of the most elegant answers came in 2014 with VGGNet. Its core philosophy was beautiful: what if we just used small, uniform 3×3 filters and stacked them on top of each other, over and over again?

This simple, repeating structure made VGGNet incredibly easy to understand and build upon. Instead of wrestling with complex, bespoke layer designs, developers could create very deep networks (like VGG-16 or VGG-19) using a consistent, modular block. This combination of depth and simplicity was a home run.

VGGNet quickly became a favorite in the AI community. Its straightforward design made it a perfect "backbone" for more complex tasks like object detection, where its learned features could be repurposed. The principles it championed are still fundamental to how we design networks today.

ResNet: The Deep Thinker

As networks like VGG got deeper, a strange and frustrating problem appeared. Logic suggests that adding more layers should make a network more powerful. But in practice, engineers found that after a certain depth, performance would actually get worse. The models were getting too deep to train effectively, a roadblock known as the vanishing gradient problem.

Enter ResNet (Residual Network) in 2015 with a truly ingenious solution: "skip connections." These are little shortcuts that allow information to bypass a few layers and jump ahead. Think of it like giving the network a direct line to its earlier notes, so it doesn't forget crucial information as it processes data through dozens of layers.

This simple trick was a game-changer. It allowed researchers to build and train networks that were previously unimaginable—some with over 150 layers—without a drop in performance. ResNet shattered records at the ImageNet competition and completely redefined how we build deep models. It proved that, with the right architecture, we could push the boundaries of depth to unlock incredible new levels of accuracy.

Comparing Famous CNN Architectures

To see this evolution at a glance, it helps to compare these landmark models side-by-side. Each one built upon the last, introducing a key idea that pushed the entire field of computer vision forward.

| Architecture | Year of Impact | Key Innovation | Primary Use Case |

|---|---|---|---|

| LeNet-5 | 1998 | The first practical CNN, using the classic conv-pool-fc structure. | Handwritten digit recognition (e.g., checks). |

| AlexNet | 2012 | Deep architecture, used ReLU and GPUs to win ImageNet. | Kickstarted the deep learning revolution in image classification. |

| VGGNet | 2014 | Showcased the power of very deep networks using simple, small (3×3) filters. | Popular as a feature-extraction backbone for other vision tasks. |

| ResNet | 2015 | Introduced "skip connections" to solve the vanishing gradient problem. | Enabled training of extremely deep networks (100+ layers). |

From the humble beginnings of LeNet-5 to the incredible depth of ResNet, this journey shows a clear pattern of innovation. Each architecture wasn't just an incremental improvement; it was a conceptual leap that unlocked new possibilities for what machines could learn to see.

How a Convolutional Neural Network Actually Learns

So, we've covered the building blocks and the big-name architectures. But how does a CNN go from seeing a meaningless jumble of pixels to confidently identifying a cat in a photo? An intelligent model isn't born smart—it has to be trained.

Think of it like a student cramming for a huge exam. The process is a cycle of guessing, checking the answer key, and correcting mistakes until the concepts finally click.

Let's say you show the brand-new network an image of a cat and ask, "What is this?" At the start, all its internal parameters (the weights) are set to random values. Its first guess might be completely off the mark, like "a car." This is where the learning journey begins.

The network then compares its prediction ("car") to the correct label ("cat"). It needs a way to measure just how wrong it was, and for that, it uses a mathematical formula called a loss function. A massive error produces a high loss score, while a near-perfect guess results in a low one. The ultimate goal? Get that loss score as close to zero as possible.

Correcting Mistakes with Backpropagation

This is where the magic happens. The network uses a powerful algorithm called backpropagation to work backward from its mistake. It traces the error all the way back through its layers, figuring out precisely which weights were most responsible for the incorrect guess.

It’s like that student realizing they bombed a practice question. They’d go back through their notes to see where their logic went wrong and adjust it. "Okay, that connection I made was faulty. Let me tweak my understanding." Backpropagation does the same thing, making tiny adjustments to thousands or even millions of weights, nudging them in a direction that would have produced a better answer. This cycle repeats over and over—sometimes for millions of images—and with each pass, the network gets a little bit smarter.

If you want to dig deeper into this feedback loop, our guide on how to train a neural network breaks it down even further.

Avoiding the Pitfall of Memorization

One of the biggest hurdles in training is a problem called overfitting. This is the classic case of a student who just memorizes the answers to the practice test. They'll ace that specific test, but they haven't actually learned the material. So, when the real exam comes with slightly different questions, they completely fall apart.

An overfit CNN is the same. It performs flawlessly on the images it was trained on but fails miserably when shown new, unseen data. To prevent this, we have a few clever tricks up our sleeves.

Expert Insight: "Overfitting is the enemy of generalization. A model that only memorizes has learned nothing. The key is to teach it the underlying patterns, not just the specific examples you show it. Techniques like data augmentation are essential for building robust, real-world models."

Smart Training Strategies for Better Learning

To build a truly effective CNN, you don't just dump data into it and hope for the best. Experts rely on proven strategies to make the training process more efficient and the final model more robust. Two of the most important are Data Augmentation and Transfer Learning.

Here’s how they work:

- Data Augmentation: This is like giving our student-model slightly different versions of the same practice problem. We take our training images and create modified copies—rotating them, flipping them horizontally, zooming in, or subtly changing the colors. This teaches the network that a cat is still a cat, even if it's viewed from a different angle or under different lighting. It forces the model to learn the real features of a "cat" and makes it far more resilient.

- Transfer Learning: Why start from scratch when you can stand on the shoulders of giants? Transfer learning lets us take a powerful, pre-trained model (like a ResNet that has already learned to recognize features from millions of images) and then fine-tune it for our specific task. It’s like starting with a graduate-level student instead of a kindergartener. This approach dramatically cuts down on training time and often produces better results, especially when you don't have a massive dataset of your own.

Real-World CNN Applications You Use Every Day

It’s easy to think of a convolutional neural network as some abstract concept locked away in a research lab. But the truth is, this technology is already quietly powering the devices and apps you interact with every single day.

You probably used one this morning. That facial recognition feature that unlocked your phone? That’s a CNN, analyzing the unique contours of your face in a split second to confirm it's you. It’s become such a common feature that we barely even notice it anymore.

This kind of magic happens all over the place, especially on social media. When you upload a group photo and the platform instantly suggests tagging your friends, you're seeing a CNN at work. It has learned to recognize specific faces from your past photos and can now pick them out of a new image with surprising accuracy.

From Social Feeds to Saving Lives

But the applications go much deeper than convenience. CNNs are becoming indispensable in modern medicine. In medical imaging, they serve as a second set of expert eyes, scanning X-rays, CTs, and MRIs to catch tiny anomalies that might indicate disease—subtle patterns a human radiologist could potentially overlook after a long day.

This isn't about replacing doctors; it's about giving them better tools. The result is earlier detection and better patient outcomes.

Expert Opinion: “In diagnostics, CNNs provide a level of consistency and speed that is difficult for humans to match. They can analyze thousands of images without fatigue, flagging potential anomalies for a doctor's review. This partnership between human intuition and machine precision is the future of medical analysis.”

The progress here is staggering. Hardware like NVIDIA's A100 GPUs can train these models 10,000x faster than what was possible back in 2012. This has fueled a computer vision market on track to hit $20 billion by 2025. Today, CNNs already drive 80% of medical imaging analysis, and some 2023 studies show models capable of detecting 94% of lung cancers in CT scans. You can dive deeper into how CNNs are revolutionizing industries on Wikipedia.

Powering the Future of Transportation and Commerce

Look no further than the automotive industry to see another massive shift. Self-driving cars rely on CNNs as their digital eyes, processing a constant flood of visual information from cameras to make sense of the world.

In real-time, these networks are handling critical tasks:

- Identifying pedestrians and cyclists to keep them safe.

- Reading traffic signs and reacting to speed limits.

- Detecting lane markings to stay on course.

This ability to interpret a chaotic, fast-moving environment is the bedrock of autonomous driving.

CNNs are also changing the way we shop. Have you ever used a visual search feature on an e-commerce app, where you snap a picture of an item to find something similar online? That’s a CNN breaking down the image and matching it against a massive product catalog. It's the same technology behind the automated checkout systems in some stores, where cameras identify everything in your cart without a single barcode scan.

Getting Started with Your First CNN

Ready to roll up your sleeves and build your first CNN? Let's get you started. This isn't about throwing a wall of code at you; it's about giving you a solid game plan to begin experimenting, which is the most critical step on your deep learning journey.

The best part? You don't need a massive server rack in your basement. Thanks to some incredible tools, building your first neural network is more accessible than it's ever been, and many of the best resources won't cost you a thing.

Choosing Your Toolkit

First things first, you need a deep learning framework. Think of it as a professional-grade workshop full of pre-built parts—like layers, optimizers, and activation functions—that you can snap together to assemble your network. For both newcomers and seasoned pros, the conversation almost always comes down to two big names: TensorFlow and PyTorch.

-

TensorFlow (with Keras): This is usually the go-to recommendation for beginners, and for good reason. Its Keras API is beautifully simple. You can literally stack layers one on top of the other with a clear, intuitive syntax, making it the perfect way to build an understanding of how these models are put together.

-

PyTorch: A favorite in the research world, PyTorch is known for feeling more like regular Python. It offers a ton of flexibility and fine-grained control, which is fantastic once you want to start tinkering with custom parts of your model. The learning curve is a bit steeper, but many find the control it offers is well worth it.

Expert Opinion: "Honestly, don't get stuck on the TensorFlow vs. PyTorch debate for your first project. Just pick one and go. I usually tell people to start with TensorFlow and Keras because its high-level, straightforward approach helps you grasp the architecture without getting bogged down in boilerplate code. You can always pick up PyTorch later."

Your First Training Grounds: Datasets and Platforms

A model is useless without data to learn from. Luckily, the machine learning community has a few classic "starter" datasets that are perfect for getting your feet wet. They’re clean, well-structured, and small enough that you won't be waiting days for your model to train.

- MNIST: This is the quintessential "hello, world" of computer vision. It's a massive collection of handwritten digits (0 through 9) that makes for a perfect first image classification task.

- CIFAR-10: A nice step up in complexity. This dataset has 60,000 tiny color images sorted into 10 classes of common objects, like airplanes, cars, birds, and frogs.

You also need a place to actually run your code. This is where free, cloud-based notebooks like Google Colab are a game-changer. They give you a coding environment that runs right in your browser, and crucially, they offer free access to GPUs. These are the specialized processors that make training a CNN go from taking hours to just minutes. It means you can start building powerful models without spending a penny on hardware.

A Few Lingering Questions About CNNs

As we wrap up our deep dive into convolutional neural networks, you might still have a few questions buzzing around. That’s perfectly normal. Let's tackle some of the most common ones to help tie everything together.

Think of this as a quick chat to clear up any final cloudy spots.

Why Not Just Use a Regular Neural Network for Images?

The short answer? Efficiency. A standard neural network, sometimes called a fully-connected network, would be a computational nightmare for image processing.

Imagine connecting every single pixel of a 1080p color image to every neuron in the first hidden layer. You'd end up with billions of connections, or parameters, to train. It would be incredibly slow and almost guaranteed to overfit by just memorizing the training images instead of learning actual features.

Convolutions are the elegant solution. By using small filters with shared weights that slide across the image, CNNs drastically cut down on the number of parameters. That little 3×3 filter looking for a vertical edge is the same filter used everywhere on the image. This clever design makes them faster, more efficient, and better at generalizing what they learn.

Expert Opinion: “Parameter sharing is the secret sauce of CNNs. It’s built on a very intuitive idea: if a feature like a curve or an edge is important in one corner of an image, it’s probably important elsewhere too. This assumption is what allows them to scale so effectively for visual recognition.”

Are CNNs Only Good for Pictures?

Not at all! While computer vision is where they made their name, the core idea—detecting local patterns in grid-like data—is surprisingly versatile. As long as the data has some kind of spatial structure where proximity matters, a CNN can probably find a use.

Here are a few places you’ll find CNNs working their magic outside of image recognition:

- Audio Processing: When sound is converted into a spectrogram (a visual map of frequencies over time), it looks a lot like a 1D image. CNNs can scan these spectrograms to pick out the patterns of human speech or identify musical genres.

- Text Analysis: Sentences can be transformed into matrices of word embeddings. A CNN can then slide across these matrices, detecting key phrases or word combinations that signal positive or negative sentiment.

- Time-Series Data: Think of stock market data or readings from an industrial sensor. A 1D CNN can analyze these sequences to spot recurring patterns, predict future values, or flag anomalies.

This adaptability is a huge part of what makes the convolutional neural network such a powerful tool.

Here at YourAI2Day, our mission is to break down complex AI topics and make them easy to understand. Whether you're exploring neural networks or catching up on AI news, we're here to help you learn. Continue your journey by exploring more resources on our platform at https://www.yourai2day.com.