Mastering Feature Engineering for Machine Learning

Feature engineering is where you take raw, often messy data and carefully shape it into clean, insightful "features" that a machine learning model can actually work with. Think of it less as data cleaning and more as the creative art of selecting, transforming, and even inventing the variables that will give your model the best shot at success. It's truly where human smarts meet machine power.

What Is Feature Engineering And Why It Matters

Imagine you're a detective handed a box full of jumbled evidence for a big case. Inside, you find blurry photos, random receipts, and notes scribbled on napkins. As they are, these items are just a pile of confusing junk. But a good detective knows how to piece it all together.

That's precisely what you're doing with feature engineering for machine learning. The messy evidence is your raw data. Your detective work is the process of creating clear, meaningful clues. You’re not altering the facts; you’re just presenting them in a way that makes the underlying story jump out. Maybe you group those receipts by location to map out a suspect's movements or enhance the blurry photos to create a clear timeline of events.

An AI model, no matter how sophisticated, is lost without this kind of prep work. It needs you to create features that highlight the important patterns. As we cover in our guide on what machine learning is, models learn from the data you give them, so the quality of that data is everything.

The True Engine Of Model Performance

A common mistake for beginners is obsessing over the algorithm. They'll spend ages debating whether to use a random forest or a complex neural network, assuming the model choice is the key to a great result.

But talk to any data scientist who’s been in the trenches, and they’ll tell you where the real magic happens.

"Feature engineering is the secret sauce. A simple model with excellent features will almost always outperform a complex model with poor ones. It's where domain knowledge and creativity have the biggest impact." – Dr. Andrew Ng, AI pioneer and Coursera co-founder.

This isn’t just a nice sentiment; it’s a fundamental truth in machine learning. Better features directly translate into better outcomes.

Before we dive deeper, let's look at exactly how this process pays off.

How Feature Engineering Boosts Your AI Model

| Benefit | What It Means | Real-World Example |

|---|---|---|

| Improved Accuracy | Well-crafted features make patterns in the data more obvious, allowing the model to make more precise predictions. | Instead of a raw timestamp, create features like "day of the week" or "is_holiday" to predict retail sales. |

| Faster Training Times | With cleaner, more potent data, models don't have to work as hard to find the underlying signals, which significantly speeds up training. | Scaling numerical features (like income and age) to a similar range helps gradient-based models converge much faster. |

| Enhanced Interpretability | Features that reflect real-world concepts make it easier for humans to understand why a model made a specific decision. | Creating an "age_group" category (e.g., 18-25, 26-35) from a birthdate is far more intuitive than a raw date. |

Ultimately, good feature engineering makes your entire project more robust, efficient, and understandable.

Why It's Still Crucial Today

You might hear that modern deep learning models can handle feature extraction automatically, and to some extent, they can. But for the vast majority of business problems that rely on structured data (think spreadsheets and databases), manual feature engineering remains absolutely vital.

Time and again, experts find that teams get a bigger return on their investment by focusing on creating better features rather than just building more complex models. The global machine learning market is set to grow with a CAGR of about 31.7% from 2025–2031, and this growth is fueled by effective, foundational data practices.

As emphasized in Coursera's expert blog, this is the critical step that transforms raw data's potential into real-world performance.

The Core Techniques for Any Machine Learning Project

Alright, now that we understand why feature engineering is so critical, let's get our hands dirty with the how. Think of these next few techniques as your foundational toolkit. You'll find yourself reaching for them on pretty much every single machine learning project you tackle.

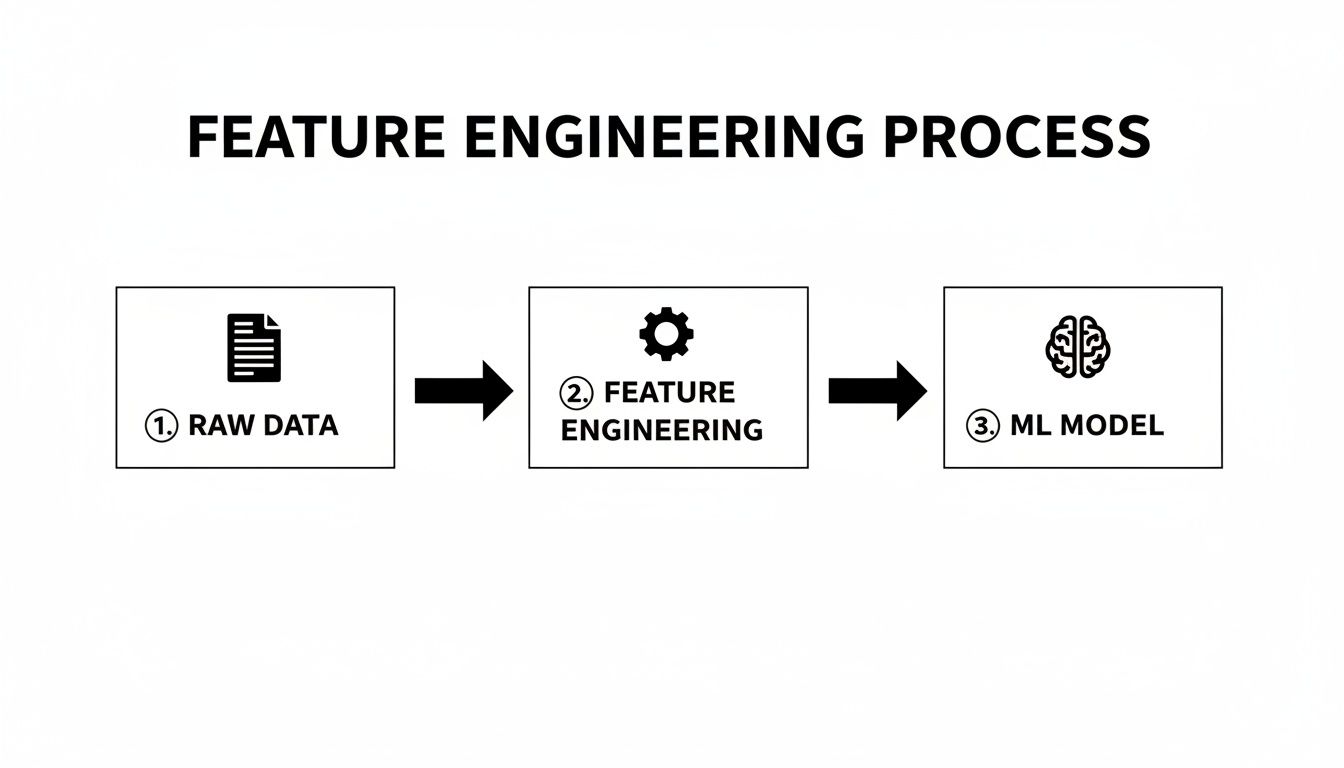

We'll start with the basics, breaking down how to handle the messy, real-world data you're bound to encounter. The process looks something like this:

As you can see, feature engineering is that crucial step that turns raw information into something a model can actually learn from.

Taming the Wild West of Missing Data

Let’s be honest: real-world data is never clean. You'll almost always open a dataset to find gaps and blank cells where information should be. Just ignoring these gaps can crash your model or, even worse, send its predictions spiraling into inaccuracy. So, what's the game plan?

Your first instinct might be to just delete any rows with missing values. While simple, this is often a terrible idea. You risk throwing out a ton of valuable information, which is especially damaging if you don't have a massive dataset to begin with.

A much smarter approach is imputation—a fancy term for strategically filling in those blanks.

Here are a few go-to strategies:

- Mean/Median Imputation: For numerical data like 'age' or 'income', you can fill the gaps with the column's average (mean) or its middle value (median). The median is often the safer bet because it isn't skewed by a few unusually high or low numbers.

- Mode Imputation: When you're dealing with categories like 'city' or 'product type', the best move is often to fill missing values with the most common entry (the mode). If 'New York' is the most frequent city in your customer data, you'd fill the blank city fields with 'New York'.

- Creating an Indicator Column: Here's a clever trick: sometimes, the very fact that data is missing is a signal in itself. You can create a new binary feature (e.g., 'is_age_missing') that is set to 1 if the age was missing and 0 if it wasn't. This lets the model know that those specific gaps might be important.

"Don't just delete missing data on autopilot. The absence of a value can be just as informative as its presence. Always ask why the data is missing before deciding how to handle it." – Cassie Kozyrkov, Chief Decision Scientist at Google.

Ultimately, the right method depends on your data and the problem you're trying to solve. Don't be afraid to experiment to see what works best.

Turning Words into Numbers with Encoding

Machine learning models are mathematical powerhouses, but they have a major limitation: they don't understand text. A column full of words like 'USA', 'Canada', or 'Mexico' is completely meaningless to them. We have to translate that text into a numerical format through a process called encoding.

Imagine you're trying to predict which customers might cancel their subscriptions. One of your data points is the customer's plan: 'Basic', 'Pro', or 'Enterprise'. An algorithm can't do anything with those words.

This is where a technique called One-Hot Encoding shines. It takes that single 'Subscription Plan' column and cleverly converts it into three new binary columns:

is_Basicis_Prois_Enterprise

So, for a customer on the 'Pro' plan, the new columns would look like this: is_Basic: 0, is_Pro: 1, and is_Enterprise: 0. This approach makes the categories crystal clear to the model without accidentally suggesting that one plan is "greater" or "less" than another. To see what kinds of models use these features, you can find a great overview in this guide where there are a variety of machine learning algorithms explained.

Putting Features on an Even Playing Field

Picture a dataset with two features: 'customer age' (ranging from 18 to 80) and 'number of purchases' (ranging from 1 to 5). Because the numbers for age are so much larger, an algorithm might mistakenly assume it's a more important feature. This kind of bias can completely throw off your model.

Feature scaling prevents this by resizing all numerical features to a common scale, ensuring a level playing field.

The two most popular ways to do this are:

- Normalization (Min-Max Scaling): This method squishes or stretches all values to fit neatly within a specific range, usually 0 to 1. It’s perfect for algorithms that don’t rely on data following a specific distribution.

- Standardization (Z-score Scaling): This approach transforms the data so that it has a mean of 0 and a standard deviation of 1. It's incredibly effective and the default choice for many, especially for models that perform best when data follows a classic bell curve.

By scaling your features, you make sure no single variable bullies the others just because its numbers are bigger. This almost always leads to faster model training and more reliable results.

Creating Powerful Features From Your Data

So far, we’ve built a solid foundation by cleaning up missing data and getting our features on the same scale. Now comes the really fun part—where your creativity and deep understanding of the problem truly shine. We're shifting gears from simply cleaning the data to actively inventing new features that tell a much richer, more insightful story.

Think of it like being a chef. Your raw dataset is a pile of ingredients: flour, sugar, eggs. The steps we've covered so far were like measuring everything correctly and making sure it's all ready to go. Now, you get to be the one who combines them to bake a cake. That cake—a brand-new creation—is way more valuable than just the sum of its parts.

This process is often called feature creation or generating derived features, and it's a cornerstone of any serious machine learning project.

Unlocking Insights With Feature Creation

At its heart, feature creation is about combining or transforming the variables you already have to produce new ones that make more sense to your model. Your raw data is full of hidden relationships and patterns that aren't obvious at first glance. By creating new features, you drag those relationships out into the open.

Let's take a classic e-commerce example. Imagine you have a dataset with order_date and delivery_date columns. By themselves, they’re just timestamps. But with a bit of ingenuity, you can craft a far more powerful feature.

If you subtract the order date from the delivery date, you create shipping_duration. Suddenly, you have a single, potent feature that could be a massive predictor of customer satisfaction. A model can easily learn that a long shipping_duration often leads to unhappy customers—a pattern that would be much harder for it to figure out from the raw timestamps alone.

That’s the whole idea behind feature creation. You take what you have and make something better. Here are a few common ways to do it:

- Combining variables: Add, subtract, multiply, or divide columns to create meaningful ratios or sums. For example, in real estate, creating a

price_per_square_footfeature by dividinghouse_pricebysquare_footageis incredibly powerful. - Extracting date components: Pull out the day of the week, the month, or the season from a timestamp.

- Binning continuous numbers: Group ages into practical categories like 'Young Adult', 'Middle-Aged', and 'Senior'.

"The goal of feature creation isn't just to add more columns. It's to distill domain knowledge into a format the algorithm can understand. A single well-crafted feature can be more powerful than a dozen raw data points." – Experienced Data Scientist

This creative step is what often separates a decent model from a fantastic one. It’s where your human intuition gives the machine a massive head start.

Basic vs. Advanced Feature Engineering Techniques

To give you a clearer picture, let's compare some basic techniques with more advanced ones. This comparison helps you understand when to apply different methods based on your project's needs and the type of data you're working with.

| Technique Type | Examples | Best Used For |

|---|---|---|

| Basic | One-Hot Encoding, Label Encoding | Handling simple categorical data, creating baseline models. |

| Basic | Min-Max Scaling, Standardization | Preparing numerical features for distance-based algorithms like SVMs. |

| Basic | Polynomial Features (e.g., squaring a feature) | Capturing simple non-linear relationships in the data. |

| Advanced | Target Encoding, Feature Hashing | Handling high-cardinality categorical features without creating too many columns. |

| Advanced | TF-IDF, Word Embeddings (Word2Vec) | Extracting nuanced meaning and context from unstructured text data. |

| Advanced | Fourier Transforms, Wavelet Transforms | Analyzing cyclical patterns and signals in time-series data. |

Starting with the basics is always a good strategy, but knowing when to reach for more advanced tools can dramatically improve your model's performance, especially with complex datasets.

Understanding How Features Work Together

Sometimes, the real story isn't in one feature or another, but in how they interact. For instance, in a marketing campaign, spending more on ads usually leads to more sales. But what if the effectiveness of that ad spend skyrockets during the holiday season?

This is where interaction features come into play. An interaction feature is created by multiplying two or more features together to capture their combined effect.

Imagine a model for predicting hotel booking prices. Two of your key features might be is_weekend (a binary 1 or 0) and is_summer (also binary). Individually, both probably push the price up. However, a summer weekend is likely far more expensive than just the added cost of a weekend plus the added cost of a summer day.

To capture this synergy, you can create a new feature: weekend_summer_peak = is_weekend * is_summer. This feature will only be 1 when both conditions are true, giving the model a powerful, explicit signal to recognize this peak pricing period. Without this interaction term, the model might struggle to learn this complex relationship on its own.

Turning Text Into Meaningful Numbers

As we've mentioned, machine learning models speak the language of numbers, not words. We’ve covered One-Hot Encoding for simple categories, but what do you do with more complex text, like customer reviews or product descriptions? This is a massive field within feature engineering.

A simple yet surprisingly effective starting point is the Bag-of-Words (BoW) model. Imagine you have two short customer reviews:

- "Great service, fast delivery."

- "Slow delivery, bad service."

The BoW approach completely ignores grammar and word order and just counts how many times each word appears. First, you build a vocabulary of all the unique words ('great', 'service', 'fast', 'delivery', 'slow', 'bad'). Then, you count their occurrences in each review.

This method turns messy, unstructured text into a clean table of numbers that a model can easily process. While much more advanced techniques exist, this is a fantastic first step for pulling signals out of text. It lets you start quantifying things like sentiment or identifying key topics without needing to jump straight to complex language models.

Automating Feature Engineering for Faster Results

As we've seen, crafting powerful features is part art, part science. It's a creative process that leans heavily on domain knowledge and a whole lot of trial and error. But what if you had an AI partner to handle the most tedious parts of the job?

This is where Automated Feature Engineering (AutoFE) comes into play. Modern tools can now take on the heavy lifting, automatically digging through your data to discover and build hundreds—sometimes thousands—of potentially valuable features.

You can think of it as a supercharged brainstorming session with your dataset. These tools are designed to combine columns, apply mathematical transformations, and uncover complex patterns that a human analyst might easily miss or never even consider.

The Power of Automation in Machine Learning

The biggest win with AutoFE is the huge amount of time and effort it saves. A data scientist might spend weeks manually creating and testing new features. By handing this repetitive work over to a machine, they can focus on what really matters: high-level strategy and making sense of the model's output.

This also opens the door to more people. A business analyst, for instance, who isn't a Python guru can use an AutoFE tool to generate a solid set of features. It bridges the gap between raw data and a working predictive model, without the need to write tons of custom code. Our guide on automating machine learning explores this industry-wide shift in more detail.

Here's a quick look at what most AutoFE tools are doing under the hood:

- Generate Transformations: They'll automatically try out functions like logarithms, square roots, or reciprocals on your numerical columns to see if it helps the model find a clearer signal.

- Create Interaction Features: The tool will systematically multiply or divide different features, searching for those synergistic effects we talked about earlier.

- Extract Date and Time Components: Give it a timestamp, and it can instantly break it down into dozens of useful pieces of information, like 'day of the week,' 'is_holiday,' or 'time until the next marketing campaign.'

How Automated Feature Engineering Works

The core idea behind most AutoFE platforms is a clever algorithm called Deep Feature Synthesis. It starts with your basic "entities"—like customers, products, or transactions—and then systematically applies a library of "primitives," which are just simple operations like sum, mean, count, or max.

The magic happens when the algorithm applies these primitives across the different relationships in your data. It might automatically figure out the mean_purchase_value_per_customer or the time_since_last_session. By stacking these simple building blocks, it can construct incredibly complex and predictive features, all without a human guiding every single step.

"Automated feature engineering doesn't replace the data scientist; it supercharges them. It handles the brute-force search for good features, allowing the human expert to guide the process and validate the results." – Max Kuhn, creator of the popular

caretR package.

The results speak for themselves. Feature engineering is still one of the most reliable ways to boost a model's performance, and automation is just making it more powerful. Recent studies on advanced AutoFE methods have shown they can improve prediction accuracy by a median range of 29–68% over baseline models on typical business datasets. These are huge gains, often achieved with just a small extra investment in training time.

This technology is helping to make data science more accessible, allowing smaller teams to build models that were once the exclusive domain of large corporations. By letting the machine handle the exhaustive search for signals, you can build better models, faster.

Best Practices and Common Pitfalls to Avoid

You’ve done the hard work of creating a set of powerful features, and it feels like a huge win. But hold on. Before you celebrate, it's time to adopt the professional habits that ensure your model actually works in the real world, not just on your computer.

The path of feature engineering for machine learning is riddled with subtle traps. These pitfalls can easily trick you into thinking you've built a world-class model when, in reality, it's set up to fail. Let's walk through the essential practices that separate a robust, reliable AI system from a fragile one.

The Silent Model Killer: Data Leakage

Here’s a classic scenario: you're building a model to predict which stocks will rally tomorrow. While engineering features, you accidentally include tomorrow's closing price. Unsurprisingly, your model hits 100% accuracy during training. The problem? It's completely useless in the real world because you can't know tomorrow's price today.

This is the very definition of data leakage. It's a sneaky, all-too-common mistake where information from the future—or any data that won't be available at prediction time—creeps into your training set.

Data leakage creates a model that is wildly optimistic and completely unprepared for reality. It's like giving a student the answer key before a test—they'll ace it, but they haven't learned a thing.

To stop data leakage in its tracks, always ask yourself this simple question: "Would I have this exact piece of information at the very moment I need to make a prediction?" If the answer is no, it has no business being a feature. A common slip-up is calculating a global average from your entire dataset before splitting it into training and testing sets. Don't do it.

Treat Your Data Pipeline Like Code

It's so easy to treat feature engineering as a messy, one-off experiment. You might tinker in a notebook, find something that seems to work, and then just move on. This kind of improvised approach is a recipe for failure on any serious project. Your feature engineering steps are just as vital as your model's code, and they deserve the same rigorous treatment.

This means your entire process must be repeatable and consistent. Every single transformation—from how you fill missing values to the way you scale numbers—needs to be scripted and saved.

Make these practices non-negotiable:

- Version Control Everything: Use a tool like Git to track changes to your data processing scripts, not just your model code.

- Document Your Decisions: Why did you choose median imputation over the mean? What was the logic behind creating a specific interaction feature? Leave notes and comments for your future self and your teammates.

- Keep It Consistent: The exact same feature engineering steps you use on your training data must be applied to any new data your model sees in production. Any deviation can lead to silent, hard-to-diagnose failures.

The Shift to Governed Feature Pipelines

The industry is finally moving away from messy, individual scripts and toward structured, automated solutions. We're seeing a clear trend toward governed, production-ready pipelines that manage features systematically. This is why tools like feature stores are becoming so popular—they solve the consistency problem right at the source.

Adopting a professional workflow like this pays off. According to various MLOps surveys, teams using feature stores and automated pipelines can slash model deployment time by 20–50%. They also significantly cut down on production errors caused by mismatched data processing. As highlighted in recent machine learning trend analyses, this structured approach is key to building more reliable AI systems, faster.

Your Feature Engineering Questions, Answered

As you start rolling up your sleeves and working with real data, a few key questions always seem to surface. Feature engineering is part art, part science, so it's only natural to have these "what ifs" and "how tos." Think of this as a quick chat with a mentor who's been there before.

Let's cut through the noise and get straight to the practical answers for the questions that come up most often when you're moving from theory to actually building models.

How Much Time Should I Spend on Feature Engineering?

This is probably the most common question I hear, and for good reason. The honest answer? It depends, but it's almost certainly more than you think.

There's no golden rule, but it’s not uncommon for seasoned data scientists to spend 60% to 80% of a project's timeline just on feature engineering. It sounds like a lot, but this is where the magic really happens. This phase is an iterative loop of exploring the data, brainstorming ideas, building features, and testing them out.

Spending a few focused hours creating one or two really insightful features can give you a bigger performance jump than spending days fine-tuning an algorithm.

A good rule of thumb is to block out a significant chunk of your initial project time just for data exploration and feature creation. Don't treat it as a box to tick off quickly; it's the foundation your entire model stands on.

Over time, you'll build an intuition for how much effort a given problem needs.

Is Feature Engineering Still Relevant with Deep Learning?

A fantastic question, especially with the rise of deep learning models that seem to learn features all by themselves. While it's true that deep neural networks are incredible at extracting features from raw, unstructured data (like pixels in an image or words in a document), that's not the whole story.

The reality is that the vast majority of business problems are built on structured, tabular data—the kind you find in a database or a CSV file. For this kind of data, manual feature engineering for machine learning isn't just relevant; it's often the single most powerful thing you can do to improve your model.

Here’s why it still matters so much:

- Data Scarcity: Deep learning models need massive amounts of data to work their magic. For the smaller datasets typical in many business settings, handcrafted features provide strong, clear signals that help a model learn without needing millions of examples.

- Domain Knowledge: You understand the business context better than any algorithm. Creating features like a

debt-to-income-ratiofor a loan application orcustomer_lifetime_valuefor a marketing model injects your expertise directly into the process. A neural network can't invent that kind of context on its own. - Interpretability: Hand-built features are usually easy to understand. Knowing that

time_since_last_purchaseis a top predictor gives you a clear, actionable insight. Trying to explain why a specific neuron fired in a deep learning model? Not so much.

So, while deep learning is a powerful tool in the right context, classic feature engineering remains an absolutely essential skill for anyone working with structured data.

How Do I Know if I Have Created a Good Feature?

Ah, the million-dollar question. You've had a brilliant idea, coded up a new feature, and added it to your dataset. Is it a game-changer or just noise? Thankfully, you don't have to guess.

The best way to find out is to run an experiment. The whole idea is to see if your new feature actually makes your model better at its job.

Here’s a simple, reliable workflow to follow:

- Establish a Baseline: First, train your model using only your existing features. Record its performance score (accuracy, F1-score, whatever metric you're using). This is the number to beat.

- Add Your New Feature: Now, retrain the exact same model on the same data, but with your new feature included.

- Compare Performance: Check the score on your validation set. Did it go up? If so, congratulations—you've likely created a useful feature. If it stayed flat or even dropped, your feature might be redundant or, worse, confusing the model.

- Check Feature Importance: Most machine learning libraries can show you which features the model relied on most. After training, look at these importance scores. If your new feature is near the top of the list, that's a great sign it's capturing a valuable signal.

By making this disciplined, iterative cycle part of your process, you can confidently build a powerful set of features backed by evidence, not just hope.

At YourAI2Day, our goal is to help you master concepts just like these. We provide the latest news, detailed guides, and practical tools to support you on your AI journey. Whether you're a professional, an enthusiast, or a business leader looking to innovate, we have the resources you need to succeed.

Explore more insights and join our community at https://www.yourai2day.com.