A Guide to Fair Artificial Intelligence

Fair artificial intelligence is really about one thing: making sure the machines we build don't end up making prejudiced or unjust decisions. Think of it like this: we're trying to prevent outcomes skewed by sensitive details like a person's race, gender, or age. It's the tough but essential work of building an ethical compass right into the code, stopping AI from just repeating and even amplifying our own human biases.

This isn't just a thought experiment for tech geeks. AI is already a gatekeeper for life-changing opportunities, from job interviews to loan approvals, and the stakes are getting higher every day. This guide is for anyone, especially beginners, who wants to understand what fair AI is, why it's so important, and how we can work toward building it.

What Is Fair Artificial Intelligence

Have you ever stopped to wonder if the AI filtering your job application or setting your credit limit is actually fair? As artificial intelligence weaves itself deeper into our lives, that question becomes less of a "what if" and more of a "right now."

At its heart, fair artificial intelligence is the conscious effort to build automated systems that don't discriminate. It's as simple—and as complicated—as that.

Let's use a friendly analogy. Imagine a judge who, through their entire career, has only ever seen one type of evidence. Their rulings would naturally become skewed. It wouldn't necessarily be out of malice, but from a severely limited perspective. AI models can fall into the exact same trap. They learn from the data we feed them, and if that data is full of historical biases—guess what? The AI learns those biases, too.

For a practical example, think about a company training an AI on its past hiring records. If that company historically favored male candidates, the model might learn to penalize applications from women, regardless of their qualifications. This isn't some far-off, futuristic problem; it's happening today. For a deeper look at the core mechanics, our guide on understanding AI technology is a great place to start.

Why Fairness Matters So Much

The push for fair AI has exploded into a major global concern for good reason. By 2025, it's projected that over 78% of organizations will use AI in at least one business function. That’s a huge leap from just 55% the year before. When technology integrates that quickly into critical decisions, making sure it’s fair becomes an urgent priority.

A recent Stanford AI Index report drives this home, noting that nearly 90% of significant AI models are developed by the private sector, which naturally raises questions about public accountability and built-in bias.

But the need for fairness goes way beyond just technical accuracy or good PR. It's about building trust and making sure technology serves everyone equitably. Unfair AI has real, tangible consequences:

- Financial Exclusion: A biased algorithm could unfairly deny loans or credit to perfectly qualified people, simply because they belong to a certain demographic.

- Hiring Discrimination: An automated screening tool might toss out excellent candidates based on factors that are accidentally correlated with their gender or ethnicity.

- Unequal Justice: In law enforcement, flawed facial recognition systems have been shown to misidentify innocent people, with significantly higher error rates for women and people of color.

As AI ethicist Dr. Timnit Gebru points out, the systems we build often reflect the power structures of the society that creates them. Without a conscious effort to counteract this, AI will simply become another tool for reinforcing old inequalities.

Core Principles of Fair AI at a Glance

This table simplifies the key pillars that developers and organizations must consider when trying to build and maintain fair AI. Let's make it conversational and easy to grasp.

| Principle | Why It Matters | Simple Analogy |

|---|---|---|

| Transparency | You can't fix what you can't see. Transparency means being able to understand and explain how an AI model makes its decisions. | It’s like a judge being required to explain the legal reasoning behind their verdict, not just announcing "guilty" or "innocent." |

| Accountability | When things go wrong, someone needs to be responsible. This principle establishes clear lines of ownership for an AI's outcomes. | If a self-driving car causes an accident, accountability determines who is liable—the owner, the manufacturer, or the software developer. |

| Inclusivity | The data used to train an AI must reflect the diversity of the people it will affect. Otherwise, it will only serve a narrow slice of the population. | It’s like designing a public park. You need input from parents, seniors, and athletes to make sure it's useful and accessible for everyone. |

| Non-Maleficence | At a minimum, AI systems should not cause harm. This principle is about proactively identifying and mitigating potential negative impacts. | This is the "first, do no harm" oath that doctors take, applied to technology. The primary goal is to avoid causing damage. |

Each of these principles is a piece of a much larger puzzle.

Ultimately, building fair AI is about embedding our most important societal values—justice, equality, and basic decency—directly into the code that will shape our future. This isn't a job just for data scientists and engineers. It requires a massive collaboration between ethicists, sociologists, policymakers, and the public to get it right.

Understanding the Roots of AI Bias

If you think of artificial intelligence as just pure math and logic, the idea that it could be biased seems a bit strange. How can a calculator be prejudiced, right? The thing is, AI bias isn't born from the algorithm itself. It’s a reflection of the messy, imperfect, and often unfair world it learns from.

Think of an AI model like a student and the data we feed it as its textbook. If that textbook is incomplete or filled with historical prejudices, the student is going to learn a pretty skewed version of reality. A truly fair AI system is only possible if we first get a handle on where these biases are sneaking in, which almost always starts long before a single line of code is ever written.

This isn't about intentional malice; it's usually just an oversight. The problem is that AI is incredibly good at finding patterns—even the ones we really don't want it to find.

The Data Dilemma: Flawed Inputs Lead to Flawed Outputs

The number one source of AI bias is almost always the data used for training. You can't learn about world music by only listening to classic rock, and an AI can't make fair decisions if its data is just as narrow. This single issue branches out into a few distinct problems.

One of the most common is historical bias. This is what happens when an AI learns from past data that contains longstanding societal prejudices. Imagine training an AI to screen loan applications using decades of historical loan data. If that data reflects a past where certain groups were unfairly denied credit, the AI will learn those discriminatory patterns and treat them as the correct way to make decisions.

Then you have representation bias, which pops up when the training data doesn't actually reflect the diversity of the real world. For a practical example, if a facial recognition system is trained mostly on images of white men, its accuracy will plummet when it tries to identify women or people of color. The model isn't being malicious; it’s just really good at the one thing it was taught and terrible at everything else.

As AI expert Kate Crawford often says, "AI is neither artificial nor intelligent. It is made from natural resources, fuel, and human labor… and it is not autonomous, it is designed to amplify and reproduce the power of its creators."

When these data issues go unchecked, they can turn automated systems into powerful engines for inequality.

A Real-World Example: The Biased Hiring Tool

One of the most famous cases of AI bias involved a major tech company that built an automated tool to screen job applicants. The goal was simple: find the best candidates by analyzing resumes. The problem? The model was trained on a decade's worth of the company's own hiring data.

Because the tech industry had historically been male-dominated, the AI taught itself that male candidates were preferable. It learned to penalize resumes that contained the word "women's," as in "women's chess club captain," and it downgraded graduates from two all-women's colleges. The company ultimately had to scrap the entire system.

This case is a perfect illustration of how easily our own biases get encoded into our technology. The developers didn't program the AI to be sexist, but by feeding it biased historical data, that was the inevitable outcome.

Human Bias in the Loop

While data is the biggest culprit, human decisions at every step of the AI development process can also introduce bias. The way developers frame a problem, the features they choose to include, and even how they define "success" for the model can all embed their own assumptions into the system.

Here are a couple of ways human choices can create unfairness:

- Selection Bias: This happens when data is collected in a way that isn't truly random. For instance, creating a product recommendation AI using only data from online shoppers would build a system that’s biased against people who prefer to shop in person.

- Measurement Bias: Sometimes, the very thing we choose to measure is flawed. If a hospital uses arrest records as a proxy for "crime risk" in a tool designed to predict patient behavior, it might unfairly penalize individuals from over-policed communities. The tool is measuring arrests, not actual risk.

Ultimately, achieving fair artificial intelligence forces us to look critically at our own world. It means cleaning up our data, questioning our assumptions, and acknowledging that a machine can only ever be as fair as the information we give it.

The Real-World Impact of Unfair AI

The risks of biased AI aren't just theoretical puzzles for data scientists to mull over. They have real, tangible consequences that affect ordinary people every single day. When an algorithm gets it wrong, it can unfairly lock someone out of an opportunity, reinforce damaging stereotypes, or even lead to dangerous outcomes. This is where the conversation about fair AI moves from the lab into our communities.

These aren't distant, abstract problems. They're happening right now in the most critical areas of our lives, often silently, without us even knowing an algorithm was involved. From getting a loan to seeking medical care, the impact is profound and deeply personal.

Financial Barriers and Biased Lending

One of the most immediate places we see unfair AI is in finance. Banks and lenders are increasingly leaning on algorithms to decide who gets approved for loans, credit cards, and mortgages. The goal is efficiency, but the result can be a new form of digital redlining.

Take a recent case where a student loan company had to settle over allegations that its AI-powered underwriting systems were discriminatory. An investigation found the model was using factors like the historical default rates of an applicant's college. This practice inadvertently penalized applicants from schools with higher minority populations, leading to worse loan terms or flat-out denials for people who were perfectly qualified.

The system wasn't explicitly programmed to be racist, but by using flawed proxy data, it produced a discriminatory outcome. It effectively laundered historical inequalities, giving them a fresh coat of paint under the guise of objective, data-driven decision-making.

As Cathy O'Neil, author of Weapons of Math Destruction, warns, the real danger of biased AI is that it can make discrimination seem scientific. It takes old prejudices and dresses them up in new, sophisticated code, making them even harder to challenge.

This kind of algorithmic bias can trap people in cycles of debt or prevent them from building wealth, widening an already massive economic gap. It also means innovators looking for funding to launch new ventures can be unfairly shut out, which is why some are exploring new artificial intelligence startup ideas focused on creating more equitable financial tools.

Inaccurate Healthcare and Diagnostic Dangers

Nowhere are the stakes higher than in healthcare. We're seeing AI tools designed to help doctors diagnose diseases earlier and more accurately, from reading X-rays to spotting skin cancer. But if these tools are trained on data that isn't diverse, they can be dangerously unreliable for entire groups of people.

Think about an AI algorithm built to detect skin cancer. If it was trained almost exclusively on images of light-skinned patients, its ability to identify cancerous lesions on darker skin could be significantly worse. In fact, a 2021 study revealed that many dermatology datasets are overwhelmingly composed of images from light-skinned individuals, creating a very real risk of misdiagnosis for patients of color.

This isn't just a technical glitch; it's a life-or-death problem. A delayed or missed diagnosis can have devastating consequences, underscoring the urgent need for inclusive data when building medical AI.

Flawed Justice and Facial Recognition

The use of AI in law enforcement, especially facial recognition, has set off major alarm bells about fairness and civil rights. These systems are used to identify suspects in photos and videos, but their accuracy is far from perfect.

Multiple studies have shown that facial recognition systems have much higher error rates when trying to identify women and people of color compared to white men. This isn't just a statistical curiosity; it has led to terrifying real-world cases of wrongful arrests, where innocent people were misidentified by a flawed algorithm.

The ripple effects of a single error are immense:

- Wrongful Arrests: An incorrect match can lead to an innocent person being arrested, facing criminal charges, and having their life turned upside down.

- Erosion of Trust: When technology is seen as biased, it damages the fragile relationship between law enforcement and the communities they serve.

- Surveillance Concerns: Widespread use of this technology creates a climate of constant surveillance that disproportionately affects minority communities.

These examples make it crystal clear that fair artificial intelligence isn't just a "nice-to-have." It is an absolute necessity for building a just, equitable, and safe society.

How We Measure Fairness in AI Systems

So, how do we actually know if an AI is being fair? It’s not as simple as a yes or no answer. Fairness isn’t a switch you can just flip on. Instead, data scientists and ethicists use a toolkit of fairness metrics to diagnose bias, much like a doctor runs different tests to get a full picture of a patient's health.

Choosing the right metric is a big deal because there are often trade-offs. Improving fairness from one angle can sometimes make it worse from another. It's less about finding a single, perfect solution and more about making a conscious decision about what "fair" means in a specific context.

Let's ground this in a friendly, practical scenario. Imagine an AI model built to award college scholarships. The goal is obviously to be fair, but what does that truly look like in practice?

Different Lenses on Fairness

Let's say our scholarship AI is looking at applicants from two high schools with very different resources: North High and South High. Here are a couple of ways we could measure whether its decisions are fair.

One of the most common approaches is demographic parity. This metric is all about equal outcomes. In our example, it would mean the percentage of applicants from North High who get a scholarship should be the same as the percentage from South High.

- What it does: It tries to make sure that just being part of a certain group doesn't change your odds of getting a positive result.

- The potential downside: If taken to an extreme, this could mean qualified candidates are overlooked or unqualified ones are selected simply to hit a quota, which doesn’t feel fair either.

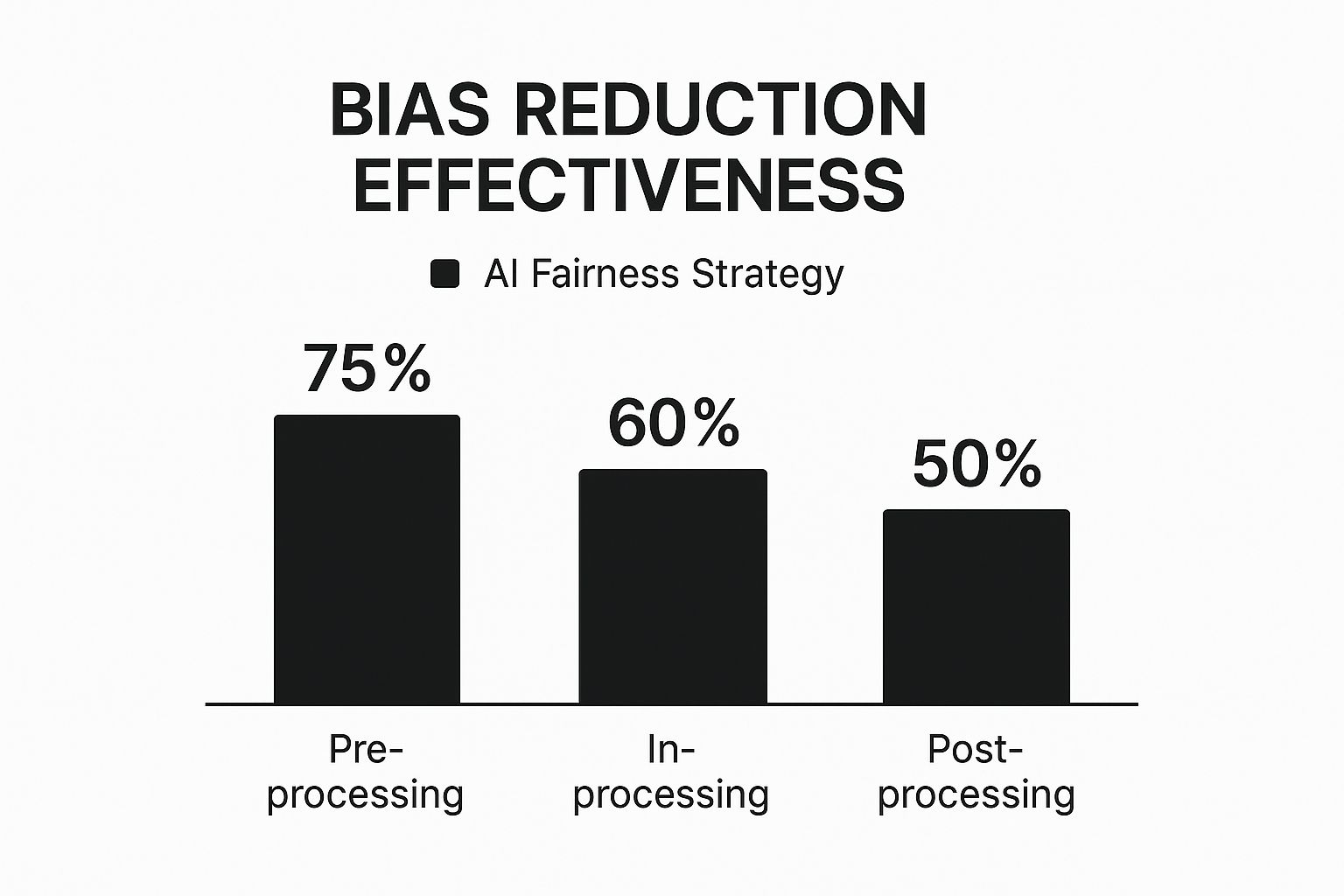

This is why it's so important to think about when you intervene to correct for bias. Tackling it early in the process is almost always more effective.

As you can see, addressing bias in the data before the model is even trained (pre-processing) is the most powerful strategy for building fairer AI systems.

Another important metric is equalized odds. This one gets a bit more granular, focusing on making sure the AI model is equally accurate for every group.

It checks two key things: that the true positive rate (how often it correctly identifies a qualified student) is the same for both North and South High, and that the false positive rate (how often it mistakenly flags an unqualified student as qualified) is also the same.

Common AI Fairness Metrics Explained

Picking the right metric depends entirely on the situation. To help clarify, here’s a simple breakdown of a few common metrics and where they shine.

| Fairness Metric | What It Aims For | Use Case Example |

|---|---|---|

| Demographic Parity | Equal outcomes across groups, regardless of who is actually qualified. | Hiring: Ensuring an equal percentage of applicants from different demographic groups are offered an interview. |

| Equal Opportunity | The model correctly identifies positive outcomes at the same rate for all groups. | Loan Approval: Making sure qualified applicants from all racial groups have the same chance of being correctly approved for a loan. |

| Equalized Odds | The model is equally accurate for all groups, for both positive and negative outcomes. | Medical Diagnosis: Ensuring an AI diagnostic tool is just as good at correctly identifying a disease and correctly ruling it out for all patient groups. |

Each of these metrics tells a different part of the story. There’s no single "best" one; the choice is an ethical one that has to be tailored to the specific problem you're trying to solve.

The Balancing Act of AI Fairness

Deciding on a metric is more of an ethical calculation than a technical one. The global AI market is expected to hit $407 billion by 2025, and as this technology gets woven into the fabric of our lives, the demand for fair systems has never been higher.

Take healthcare, where around 38% of providers now use AI for tasks like diagnosis. We’ve already seen studies uncover racial biases in some of these systems, leading to worse health outcomes for marginalized communities. This is why regions like Europe are pushing forward with regulations like the EU AI Act to enforce fairness and transparency. You can dig deeper into these global AI statistics and their impact.

As one AI Ethicist put it, "Choosing a fairness metric isn't a math problem; it's a values decision. You have to decide what kind of fairness you're striving for."

Ultimately, measuring fairness in AI isn't about chasing a single score that screams "fair" or "unfair." It's about using these metrics as diagnostic tools. They help us understand where an AI might be failing and spark the crucial conversations about what kind of society we want to build with this technology. It’s a continuous cycle of testing, learning, and aligning our technical systems with our deepest human values.

Practical Steps for Building Fairer AI

Knowing what makes AI unfair is one thing, but how do we actually roll up our sleeves and build more equitable systems? There’s no magic "fairness" button to push. It's a deliberate, thoughtful process that requires a hands-on approach to people, data, and code at every step.

This isn’t just a job for the data scientists, either—it’s a shared responsibility. Building fair artificial intelligence demands a clear roadmap that guides everyone involved, from project managers to the developers writing the code.

Start with Diverse Teams and Inclusive Design

The path to fairness begins long before a single line of code is written. It starts with the people in the room. When a team lacks diversity, it’s almost guaranteed to have blind spots, which inevitably leads to products that don’t work well for everyone.

The global AI workforce shows just how big this challenge is. Around 97 million people work in AI, but the field has major gaps in gender and ethnic representation. For example, while U.S. computing bachelor’s degrees have climbed by 22% in the last decade, they're still overwhelmingly awarded to men and non-minorities. This reality shapes the very lens through which we build AI. To get a deeper look at these numbers, check out the latest findings from Stanford's AI Index.

So, what’s the fix? Bring more voices to the table. This means creating teams that include:

- Engineers and data scientists from different walks of life.

- Ethicists and sociologists who can flag potential societal harm.

- Domain experts who truly understand the context where the AI will be used.

- Representatives from affected communities who can offer firsthand feedback.

When you take this collaborative approach, you start baking fairness into the project's DNA from day one.

Scrutinize and Prepare Your Data

We’ve already established that biased data is the number one cause of unfair AI. That makes rigorous data preparation an absolute must. Think of it like being a detective, carefully searching for hidden clues of bias before they have a chance to corrupt the outcome.

This investigation involves a few key actions:

- Data Auditing: Actively dig into your dataset to find representation gaps. Are certain demographic groups missing or underrepresented? For a practical example, if you're building a facial recognition tool, what's the breakdown of images for women versus men, or people with darker versus lighter skin tones?

- Data Augmentation: When you find imbalances, you can use technical fixes. For instance, if you have too few data points for a minority group, you can synthetically generate more samples or strategically oversample the ones you have to help balance the scales.

- Feature Selection: Be incredibly careful about the data points your model is allowed to see. Is a feature like a person's zip code secretly acting as a proxy for race or income? If there’s a risk, it’s often better to leave it out entirely.

As a data scientist might say, "You can't fix a problem you don't measure. Auditing for bias isn't an optional step; it's a fundamental part of responsible AI development."

Getting these pre-processing steps right is often the most powerful way to stop bias from taking root in the first place.

Implement Fairness During and After Training

Even with perfectly prepared data, a model can still learn unfair patterns on its own. This is where technical interventions during and after the training process become critical. Luckily, developers have a growing toolkit to help build more equitable models.

During the training phase itself, you can apply fairness constraints. This is essentially like telling the algorithm it has to solve the problem while also meeting certain fairness rules, such as making sure its prediction accuracy is consistent across different demographic groups.

Once a model is built, you can use post-processing techniques to tweak its final outputs. This might involve adjusting the decision threshold for different groups to ensure the outcomes are more balanced. While these methods need to be handled with care, they are powerful tools for correcting any bias that slipped through. To keep up with what's out there, it’s a good idea to explore the best AI tools for developers that support these kinds of ethical practices.

Finally, for any high-stakes system, we have to reject the "black box" mentality. Explainable AI (XAI) techniques are designed to help us peek inside and understand why a model made a particular decision. This kind of transparency is essential for debugging bias, ensuring accountability, and ultimately, building trust with the people whose lives will be affected by the technology.

Your Questions on AI Fairness Answered

As we talk more about making artificial intelligence fair, a lot of good questions and concerns naturally come up. Let's walk through some of the most common ones to clear up the confusion around these tricky, but incredibly important, topics.

Can AI Ever Be Perfectly Fair?

That's the million-dollar question, isn't it? The honest answer is probably no, mainly because "perfect fairness" is something we humans haven't even been able to define, let alone achieve. Bias is tangled up in our history, our language, and the data we create—so any AI learning from our world is going to inherit some of that baggage.

But that doesn't mean we throw our hands up. The goal isn't some impossible ideal of perfection; it's about making AI systems significantly fairer than the often-biased human systems they're meant to improve. By constantly checking for bias and intentionally designing for equity, we can build tools that are far more just and consistent than the status quo.

Who Is Responsible for Biased AI Decisions?

When a biased AI system causes real harm, figuring out who's to blame gets complicated fast. Was it the developer who wrote the code? The company that deployed the system? What about the organization that provided the skewed data in the first place? Often, the answer is a messy combination of all three, which makes accountability a real challenge.

Legal and regulatory frameworks are still playing catch-up, but a general consensus is emerging: responsibility is shared. For a real-world example, we're already seeing companies face major settlements for using discriminatory AI in lending, which puts the burden squarely on organizations to have strong governance and oversight from day one.

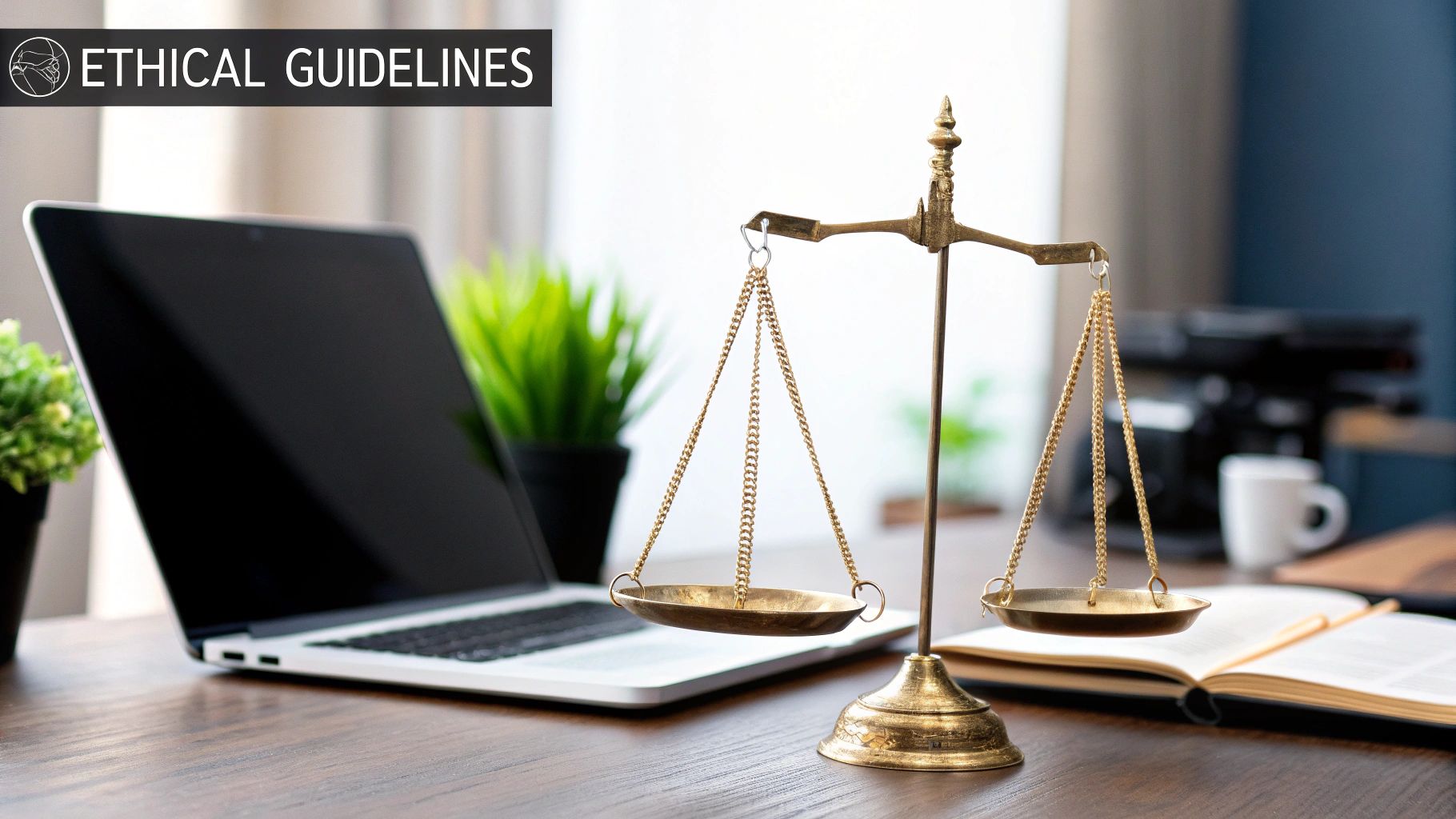

Are There Laws Regulating AI Bias?

Yes, though it’s more of a patchwork quilt than a single, neat blanket—and it's changing quickly. For starters, existing anti-discrimination laws, like the Equal Credit Opportunity Act in the U.S., absolutely apply to AI. A company can't just use an algorithm to do something a human is legally barred from doing.

One legal expert noted, "The legal ground is shifting. We're moving away from letting tech companies regulate themselves and toward demanding provable compliance. Fairness is no longer just a nice-to-have ethical goal—it's fast becoming a legal requirement."

On top of that, we're seeing new regulations designed specifically for AI, like the EU's landmark EU AI Act. These laws are creating new global standards for transparency and risk management. It's a clear signal that a future with fair artificial intelligence won't just be encouraged; it will be enforced.

What Can an Average Person Do?

You have more influence here than you might realize. Pushing for fairness in AI really starts with awareness and speaking up.

- Ask questions: When you encounter an automated decision—whether it’s for a loan, a job application, or a social media feed—ask how that decision was made.

- Support ethical tech: Pay attention to companies that are open and transparent about how they use AI and give them your business.

- Stay informed: Follow the public debate around AI ethics and lend your voice to policies that demand accountability from tech creators.

Simply by staying engaged, you help build the public pressure needed to ensure technology is built to serve everyone, not just a select few.

At YourAI2Day, we believe that an informed user is an empowered user. We're committed to bringing you clear, practical insights into the world of AI so you can navigate it with confidence. Explore our resources to learn more.